OpenAI recently announced its GPT-4o model, a new flagship model of the engine that powers ChatGPT, its famous AI-powered chatbot first released in 2022. This newer model takes human-computer interaction to a whole new level by making it seem faster and much more natural.

In GPT-4o, the 'o' stands for 'omni', since it combines text, image, and audio into a single model. While there is no improvement in the intelligence and reasoning front over the GPT-4 Turbo model, there are plenty of new updates. It is designed to provide faster, more human-sounding responses, and can even simulate emotions. It is also considerably quicker at understanding visual and audio inputs. In this deep dive, we'll be taking a look at the features that GPT-4o offers, and how it will revolutionize the way we interact with AI assistants. So, join us, and let's get started!

GPT-4o is Significantly Faster

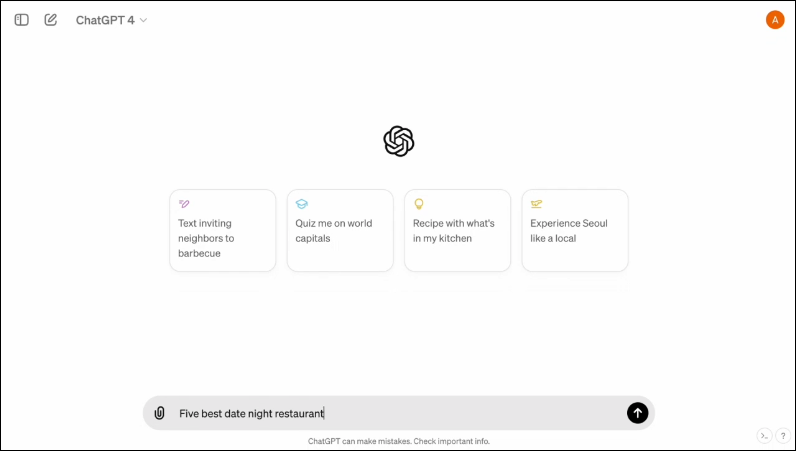

The GPT-4o model allows ChatGPT to accept inputs in various forms, including audio, text, images, or their combinations. It can also provide responses in different formats. What's most impressive about it, however, is the speed at which it provides you with responses.

With the new model, ChatGPT takes just around 320 milliseconds or even less to respond to audio inputs, which is close to the time a person takes to respond in a conversation. Besides this, the performance of GPT-4o is similar to GPT-4 Turbo so far as the use of English or writing code is concerned.

It also does much better when responding to text-based input in non-English languages, and is considerably cheaper in the API since it's much more efficient than its predecessor.

It also has a new interface, designed to make interaction easier and neater.

It is Multimodal

ChatGPT's existing Voice Mode consists of three models. The first one takes in text-based input and provides output in the same format, while the second one converts audio input to text, which is taken in by ChatGPT to provide text-based output. The last model converts text into audio.

However, this design results in a loss of information since the model cannot directly interact with the audio input or present output that has emotional elements. With GPT-4o, OpenAI has built a single multimodal model that can understand different types of inputs and process the required output directly.

It currently supports 50 languages and will also be available as an API.

It Can Simulate Human Emotions

When ChatGPT was first announced, one of the biggest downsides to the AI chatbot for many people was that it could not provide emotional responses. With GPT-4o, ChatGPT can simulate human emotions and provide suitable responses that integrate such emotions.

In the demos shared by OpenAI, the chatbot can be seen laughing, singing, and even showing sarcasm when asked to. This is leagues above what any other AI chatbot can do currently and will make user interactions much more enjoyable. According to OpenAI, the new model will allow ChatGPT to detect user's moods as well, and provide adequate responses based on that information.

Combined with the capability to use visual inputs, the ability to provide emotional responses is one of the most impressive features that the new model offers.

The New Model Can Store a Greater Amount of Information

Thanks to GPT-4o, ChatGPT can now understand images, such as charts and photos better and also store more information about the user. This also translates into responses that can contain bigger pieces of text, which can be handy in several use cases.

For instance, you can ask the chatbot to translate larger pieces of text now and it can help you out with live translation. Since it can use visual and audio inputs along with text-based ones, you can use it to gather information from screenshots, photos, and other images.

Since it can store a larger amount of information without users needing to reiterate, GPT-4o allows users to participate in back-and-forth conversations with the AI chatbot. With greater information, users can expect the conversations to be more sophisticated.

Safeguards in the New Model

Like the existing GPT models, GPT-4o is designed with certain safeguards in mind to ensure safety. The training data has been filtered and how the model behaves has been refined after training. OpenAI has also evaluated the AI model across several parameters, such as cybersecurity and persuasion, to avoid unwanted incidents.

Besides that, the developers took the help of over 70 experts in various fields like misinformation and social psychology to identify and mitigate risks that might occur or increase with the new model. They will also continue to monitor the use of the chatbot for future risks and take the required actions as and when needed.

For starters, OpenAI will only make available a limited selection of voice model presets. It will also take into account user-provided feedback to improve the model and ensure complete safety.

GPT-4o Availability

Till now, there were two versions of ChatGPT available - a free version running on GPT 3.5 and a paid version that ran on GPT 4.0 and cost $20 per month. The latter can access a larger language model allowing it to handle greater amounts of data.

GPT-4o will be available to both free and paid users and is expected to roll out to devices in the coming weeks. It will be available on mobile devices through the existing app, which will be updated, and a new macOS desktop app will also be available in addition to the web version for desktop users (the Windows version will come later this year).

To distinguish between free and paid users, OpenAI will offer paid users five times more capacity than what will be offered to free users. Once you run out of your limit, you'll be switched back to ChatGPT 3.5.

OpenAI's announcement regarding GPT-40 comes just one day before Google's annual I/O Developer Conference, where Google is expected to make its own AI-related announcements. OpenAI has also promised more announcements are on the way, so there can be no doubt that the competition between the two rivals is just starting to heat up.

While we do not know when OpenAI will unveil more changes to its AI model, we do know that GPT-4o is headed to devices running ChatGPT globally. So, keep your fingers crossed and wait for the update to show up on your device. Until next time!

Member discussion