Google has a lot of faith in Gemini and it's apparent from the way the company is integrating AI features, powered by Gemini across its products. Google Maps is the latest recipient of this integration.

With the latest update to this beloved product, Google has added the ability for users to ask for recommendations and ideas within Google Maps. So, with the new update, Maps isn't just a way to get to places, it's also a way to discover which place you want to get to.

Say you want to plan an activity with a friend, you can ask Maps things to do with friends at night. Gemini will then curate a set of results for you that'll include recommendations for places that match the description you requested. In this instance, Gemini goes on and procures a list of places with live music or Speakeasies, since you specifically asked for activities at night. You'll still be able to browse regular results below results curated by Gemini.

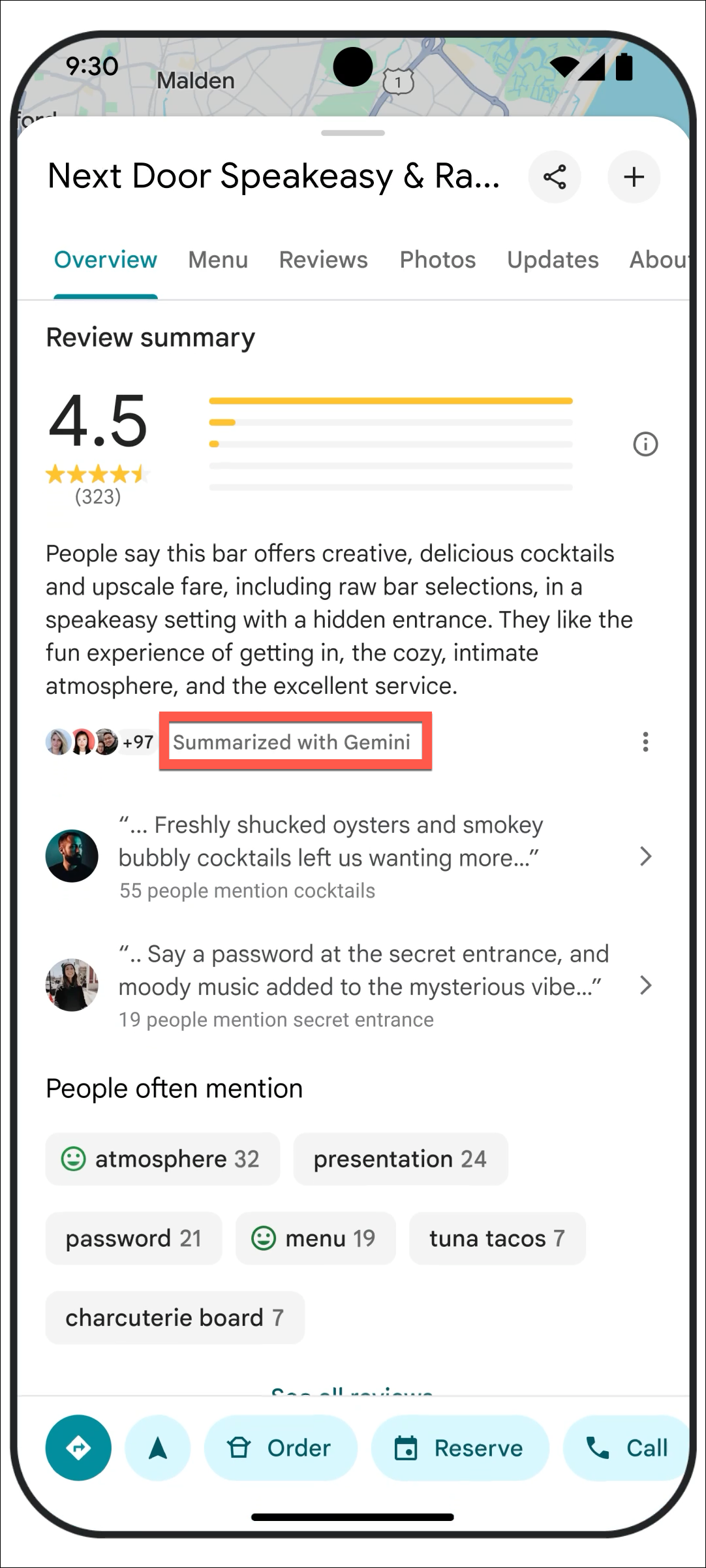

Gemini's help doesn't end just there. If you like a recommendation and go on to further check it out, Maps will now also feature an AI-generated summarized review. Summarized reviews are fast becoming a norm in a lot of apps since they can get you an overview of user sentiments much more quickly; you can get a quick summary of reviews for products on Amazon as well, for instance.

You can ask Gemini follow-up questions about a place like if you want to know what the atmosphere is like or whether it has outdoor seating. You can enter your own prompt in the 'Ask Gemini about this place' area or choose from some pre-defined options. Gemini will answer your question and also show the reviews it based its answer on so you can verify the information.

Ask Maps is rolling out to all iOS and Android users this week, but only in the US currently. There's no knowing when the feature will be rolling out to a wider audience. For the feature to succeed, it's important that Gemini presents real recommendations from the map with up-to-date information. The team at Google probably wants to see how the feature fares, given the tendency of LLM models for hallucination, before a wider rollout.