Recently, I tried out Claude's new 3.5 Sonnet model, which is the most powerful AI model from Anthropic yet, and which the company claims can outperform rivals like OpenAI's ChatGPT. This is a bold claim, which Anthropic is backing up with some pretty impressive benchmarks.

The new model also possesses vision capabilities, allowing you to provide it with images and documents and extract information from them. And it can understand emotions like humor better while being much faster. All these elements make Claude 3.5 a major competitor to the new GPT-4o powered ChatGPT, which is also a multi-modal AI model.

Like Sonnet, ChatGPT-4o can use vision-based inputs in addition to text-based ones to provide responses. It is equally good at problem-solving and possesses similar conversational capabilities. Since both new models are so close to each other in terms of capability and performance, the question that is on everyone's minds is, which of the two is better? To answer that, I decided to compare both models in detail.

Extracting information from documents

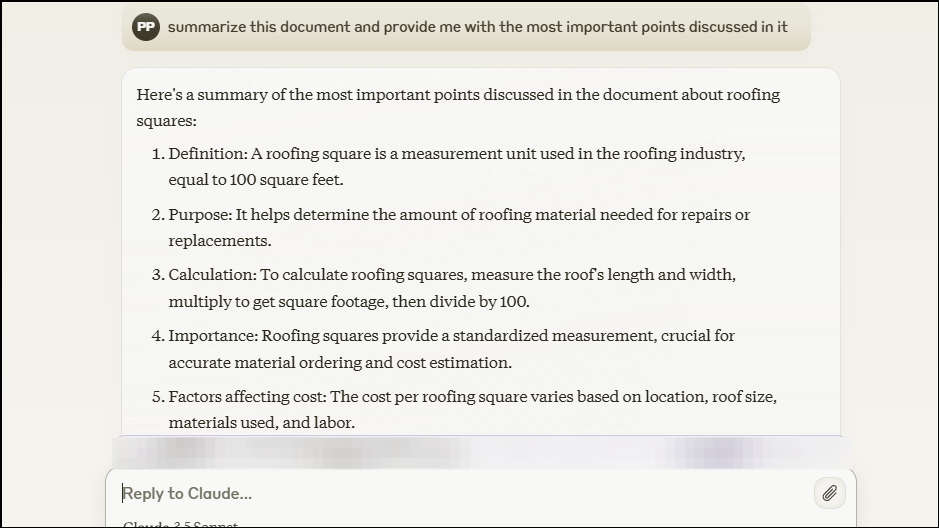

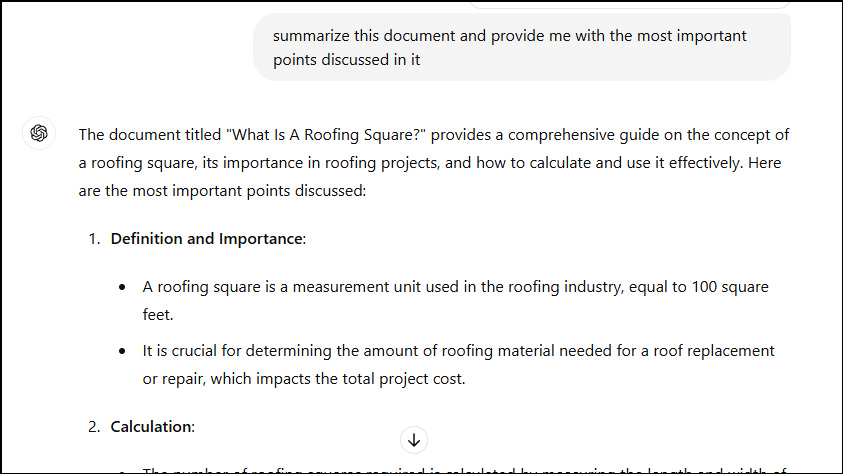

AI tools are often used to extract information from documents like PDF files and then summarize it; so, I decided to first check which of the two models could do this more effectively. For that, I prepared a PDF document about roofing squares I'd written some time ago and uploaded it to ChatGPT and Claude.

Then, I gave them the prompt, summarize this document and provide me with the most important points discussed in it. Here's what I discovered. The new Claude model was much faster than ChatGPT and started generating its response immediately after I submitted my request. It also followed the prompt more closely, listing the important points in a numbered list. If you're short on time and just want to glance at what a document contains, this is what you need.

However, despite being slower than Claude, I preferred ChatGPT's response in this instance. It not only listed the most important points in the document but also divided them into different sections, such as Definition and Importance, Calculation, etc.

If you need to find specific information regarding a certain aspect of the topic discussed in a document, ChatGPT's way of doing things seems to be more useful. You do not need to go through all the points and can just look at the section needed. The information is provided in a manner that is easier to go through and digest.

Testing vision capabilities

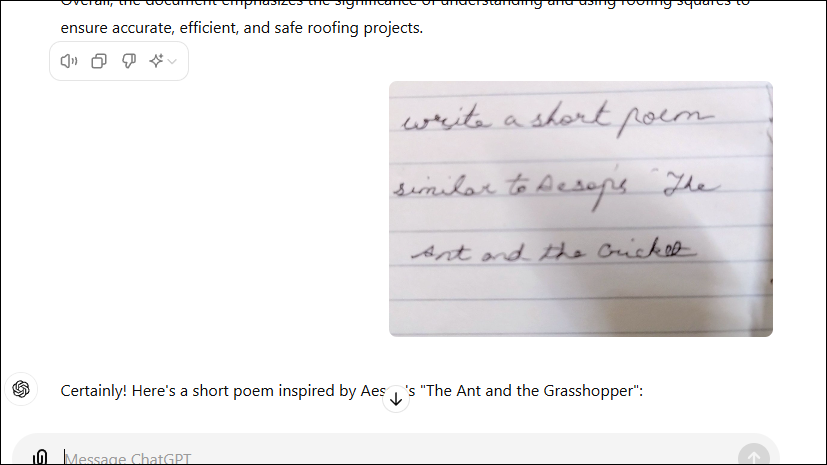

Since one of the key highlights of both Claude 3.5 and ChatGPT-4o is their ability to use visual input and provide information based on that, I decided to test that next by asking them to follow handwritten instructions after transcribing them. I asked the AI models to write a short poem similar to Aesop's 'The Ant and the Cricket'.

While I did not specify it in writing, I wanted the output to be inspired by the poem but with different characters. Claude first asked me to confirm my handwritten request and then proceeded with it. The result was quite good, very close to the original poem, but featured the same characters. The AI chatbot also asked me whether I wanted a different approach or any modifications to the poem after it finished writing the poem.

ChatGPT did not require me to confirm my request but immediately proceeded to complete it. The poem it wrote was also very impressive, and it replaced the ant and the cricket from the original creation with a bee and a butterfly, something Claude did not do. I also found ChatGPT's version to be more poetic.

So, in transcription, there is a slight difference in the results but both can decipher and understand handwritten and printed text very well, even if the images are not very clear. These powerful vision capabilities also mean you can use these tools to glean information from graphs and charts, making them suitable for mathematical tasks.

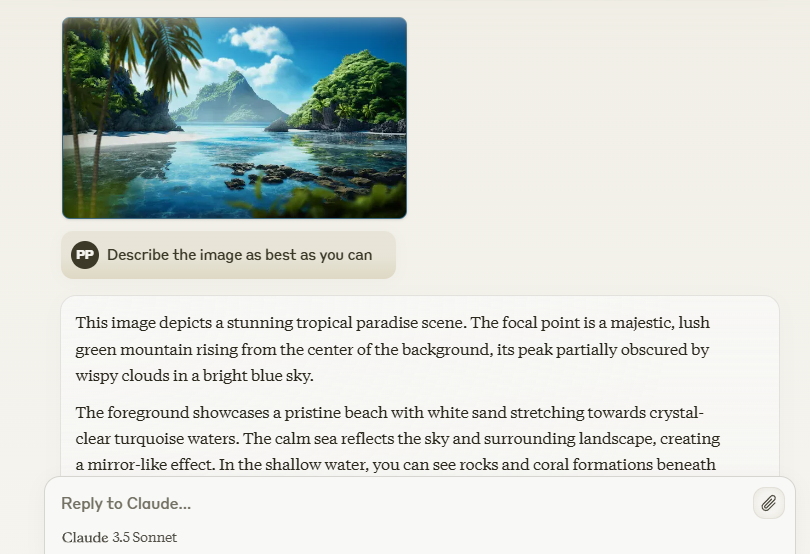

Describing Images: Since both models can also extract information from images, I had to try it out as well. I provided Claude and ChatGPT with an image of a tropical island and asked them to describe it. As you can see, Claude provides a vivid description of the image, describing each element in the foreground and background very clearly, even those I failed to notice myself.

Claude's choice of phrases and words to describe the image also felt more impactful, doing justice to the image. It does a fine job of describing the colors, lighting and conveying the overall sense of serenity and tranquility the image generates.

The results were more complicated in the case of ChatGPT, which can describe images, though not as well as Claude's. OpenAI's model tends to make mistakes, adding in elements that are not present, which shows that it can still hallucinate. Also, originally, it kept trying to describe the image based on its title instead of what it depicted, finally getting it right after multiple attempts.

Even then, the description I got from it could not hold a candle to Claude's response. This was quite surprising since GPT-4o's vision capabilities were one of the biggest highlights that OpenAI showcased at launch.

Generating and editing content

Next, I attempted to see which model fared better in content generation. To get a clear idea about how they perform, I decided to generate content that requires real facts and data, as well as fictional content that would rely on the AI model's creativity.

First, I asked Claude and ChatGPT to provide me with a detailed article on different Android skins, since it is something that many people want to know about but is a very subjective topic, with each individual having their own favorite. I used the prompt Can you write a detailed article on the different Android skins, such as OneUI, MIUI, ColorOS, etc.? Given how much time we spend with our smartphones, I wanted to find out how accurate the models were and how much information they could provide about each skin.

As usual, Claude was faster in providing a response. It provided an overview explaining what Android skins are, which is nice, but then simply proceeded to list the different skins with the features they offer in a bulleted list. Keep in mind that the model provided this result even though I specifically stated a 'detailed article' in my prompt.

In contrast, ChatGPT created a more impressive title for the article and included a brief introduction. Following that, it explained each skin in its own section, dividing each one into an Overview, Key Features, Pros, and Cons.

Not only does this provide more comprehensive information, but lets you know exactly how the different skins compare to each other. Finally, it ended the article with a proper conclusion. While the number of skins that ChatGPT mentioned was less than those listed by Claude, here the quality matters more than the quantity.

While ChatGPT did perform better than Claude in this case, the latter can also generate good content, as I've found in my previous testing. It may depend on the topic or the way you phrase your prompt. That's why I gave both models another prompt, this time using the prompt, Write a humorous story about a penguin that wants to fly but ends up getting entangled into funny situations when it attempts to do so. It also provided me with an opportunity to see how well the models understand and can convey humor.

This time, the results were very close to each other, with both models crafting genuinely hilarious stories. Both stories had common elements, like irony and physical comedy. In fiction, personal preference is a powerful factor, and overall, I found Claude's output slightly better, especially the way it played with words to generate humor.

But as I mentioned before, ChatGPT's story was fun to read as well and was slightly longer than Claude's. Its ending was also more wholesome. Thus, both Claude and ChatGPT were able to generate good fictional content while including humorous elements as per my prompt.

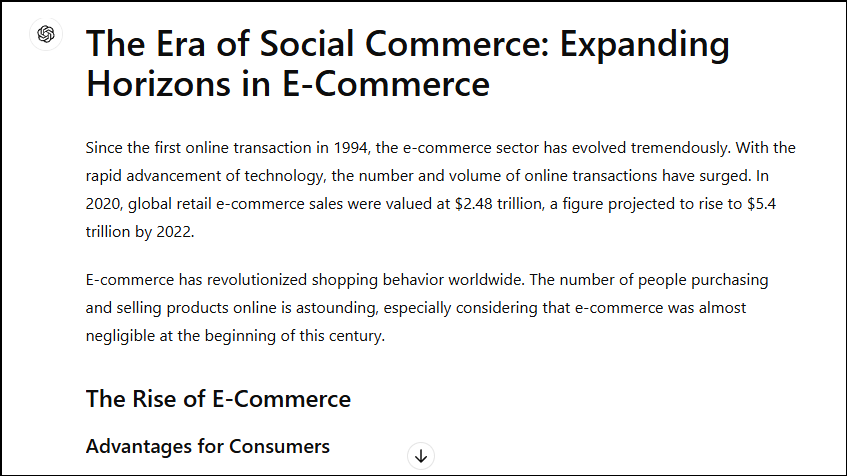

Editing Content: Generating content is only one part of the process. To truly find out what an AI model can do when it comes to content, you also need to test its content editing capabilities, which is what I proceeded to do. For this purpose, I provided a text piece on social commerce to Claude and ChatGPT and gave them the prompt, Can you expand this article while also proofreading and improving it?

When improving the article, Claude started with an introduction, then wrote about the evolution of Social Commerce, and finally followed with other sections, expanding each one as it saw fit. The model also used numbered lists and bullet points where it deemed necessary to improve readability.

ChatGPT's response was similar to its earlier ones, where it divided the content into various sections with different subheadings. It did not use any lists but kept the information in the form of paragraphs. As for the changes and improvements, I noticed that Claude made more drastic changes to the article than ChatGPT, but the end result was also much better. Ultimately, I found the editing capabilities of Sonnet to be more powerful and much better suited to my workflow.

Coding Ability

No comparison of AI models is complete without including their coding abilities. While Claude has been specially developed to help programmers write better code quickly and easily, the new GPT-4o-powered ChatGPT is also not something to look down upon when it comes to coding.

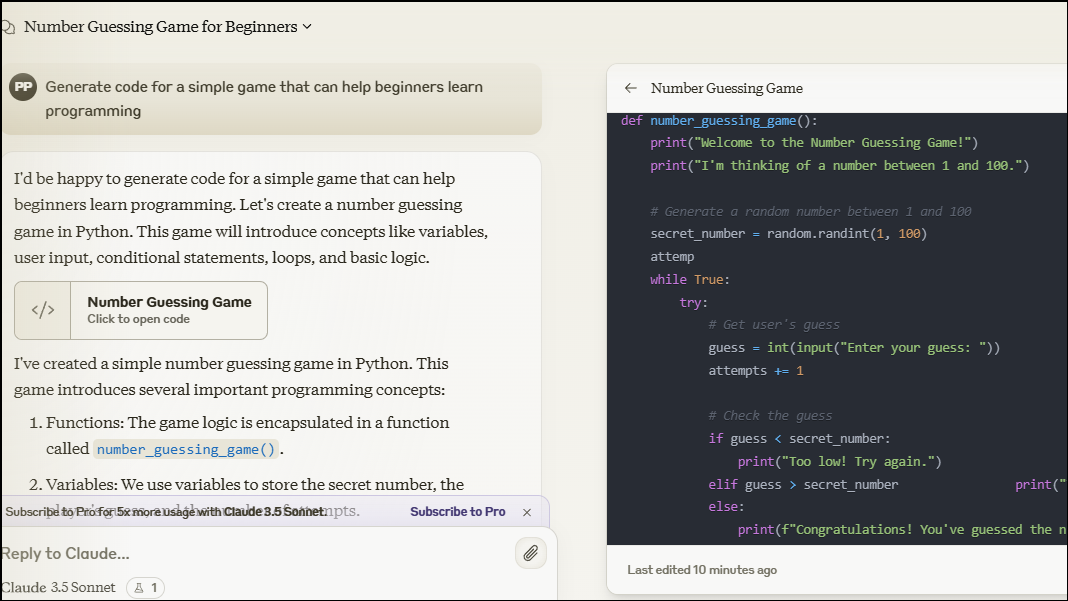

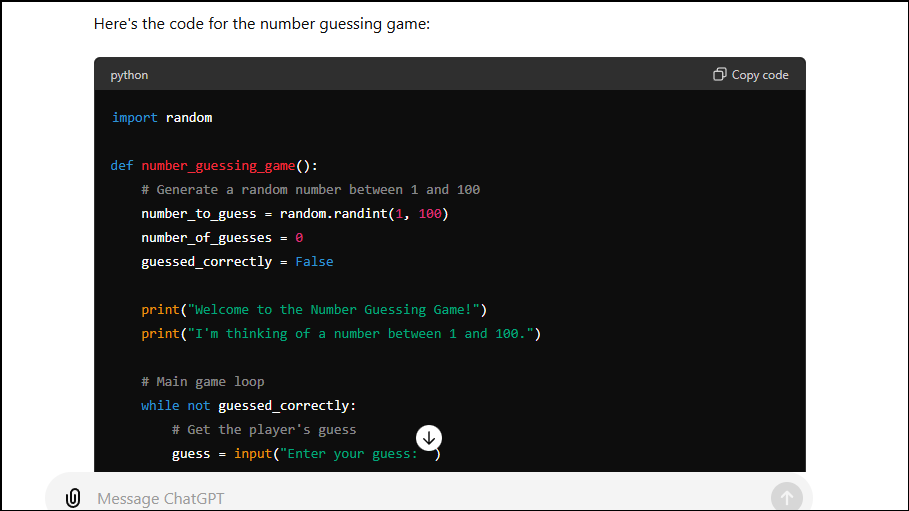

To test their code-generating ability, I asked both Claude and ChatGPT to Generate code for a simple game that can help beginners learn programming. While both wrote the code in Python, Claude completed the code generation faster, as expected. It displayed the entire code on the right side of the screen while explaining elements like Functions and Variables on the left.

What I liked most about Claude's response is that it also included a button that lets you go to the code instantly, so you can easily check it out. In addition, the chatbot informed me of the requirements needed to run the code, complete with instructions. As for the code itself, it was quite easy to understand and also ran perfectly well when I tested it.

Coming to ChatGPT's response, it was also able to generate a simple yet functional code, as I had requested. Below the code, the chatbot provided the steps needed to run the game as well as the concepts that the code covers, making it easy for beginners to understand. Overall, the results were pretty similar for both models in this instance, though Claude explained more elements and had an option by which you could ask it to explain any part of the code in detail.

Mathematical abilities

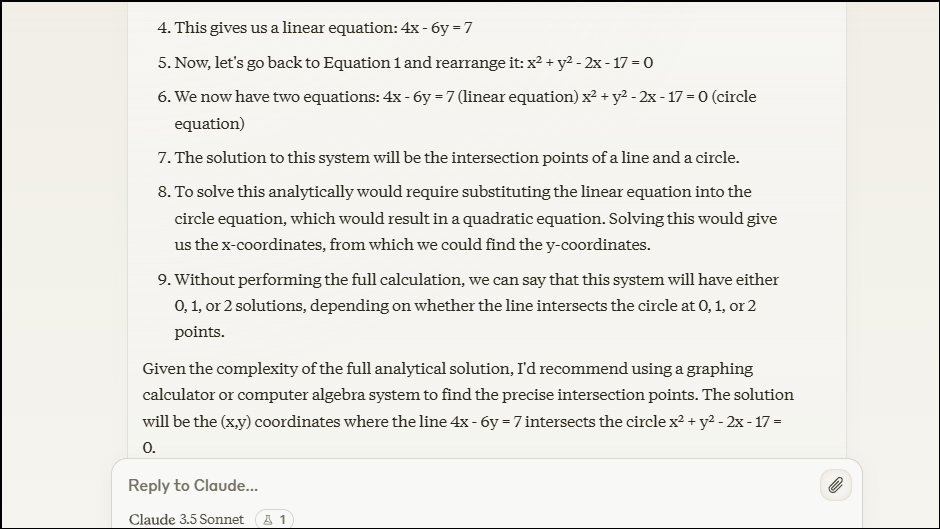

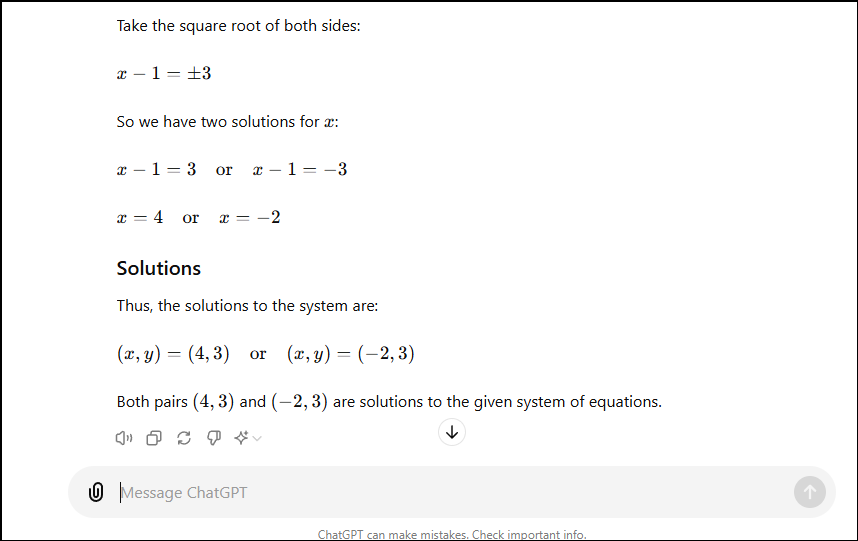

Lastly, I gave both Claude and ChatGPT a math question to solve, to see how well they did, and which one was faster. The question involved algebraic equations but was not particularly challenging. Both models started by explaining what to do in the first step, though their approach was different. Claude proceeded to expand the equation and ultimately told me that solving the problem completely required using a graphic calculator or a computer algebra system.

That said, it did state the number of potential solutions to the problem. In contrast, ChatGPT solved the problem in its entirety and gave me all the possible solutions to it. This indicates that as far as mathematical abilities are concerned, ChatGPT-4o is ahead of Sonnet.

Final Verdict - Claude Sonnet 3.5 or ChatGPT-4o: Who won?

Choosing between Claude 3.5 and ChatGPT-4o isn't easy, but ultimately, only one can be a winner, and for me, that has to be the new Sonnet model. Not only is it significantly faster than ChatGPT, but also provides more accurate answers. I especially liked how well it could describe images and take actions relating to them.

Claude also did not hallucinate even once during my time with it, which is another point in its favor, and its responses were overall closer to my instructions. Even though it did not perform as I expected in one instance where I wanted detailed content, using it to get the information I wanted was generally easier and required less effort.

By trying out both Claude 3.5 Sonnet and ChatGPT-4o, I've discovered that both are exceptionally good AI models that are very close to each other in performance. While Sonnet performs some tasks better, ChatGPT delivers better results in others. You should understand that determining which one is better will depend on your individual use case.

Additionally, both free models are limited in what they can do. So, if you want to use either AI on a regular basis, I recommend getting a paid subscription for the best results.