With Google recently renaming its AI model from Bard to Gemini and announcing multiple models, things have become a bit confusing. And now, there's a new model in the mix. Google has released a new Gemini 1.5 Pro model. One of the major mysteries is what makes the newer Gemini Pro 1.5 model different from the older Gemini 1.0 model.

Here we'll be taking a look at the differences between the two and the things you can do with the upgraded AI model.

What is Gemini 1.5 Pro

Gemini 1.5 is the next-generation model in the Gemini family of large-language models from Google that delivers significant improvements over the existing 1.0 model.

If you haven't already used Gemini Basic, it is quite similar to other AI models out there. It runs on the Gemini 1.0 Pro model, and you can enter prompts in the search bar and ask the AI to look up information, generate content, or create images.

Who can access it? While Gemini 1.0 is currently available for free in several regions and multiple languages through the web app, the newer 1.5 Pro model is not available to the general public right now. Only business users and developers can try it out currently using Vertex AI and AI Studio.

The model available right now for testing is free and has a context window of up to one million tokens, but once it is released, it won't be free. While it is available in Preview for free, you should expect some latency from the model.

Moreover, Google initially plans to release Gemini 1.5 Pro with a 128,000 token context window when it is released for everyone. It might launch different pricing tiers, with the base 128,000 token model free and the model with one million tokens available at a price, but the company has yet to make an announcement.

Gemini 1.0 Vs. Gemini 1.5 Pro

Now let's look at the features that make Gemini 1.5 Pro a significant upgrade over the previous version.

Larger Context Window

AI models like Gemini use a context window, which is made of tokens and includes parts of text, images, videos, audio, code, etc. A larger context window allows an AI model to gather and process more information.

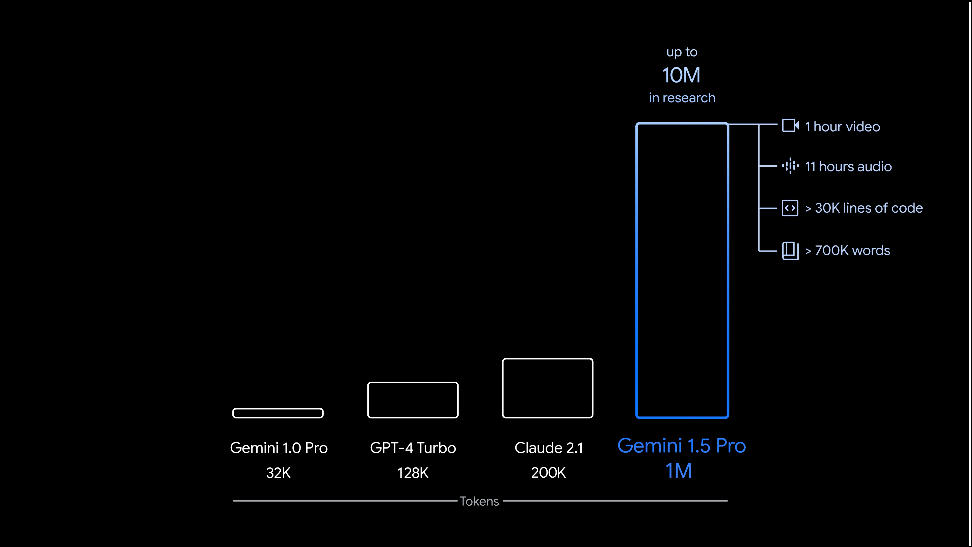

While the context window of Gemini 1.0 is limited to 32,000 tokens, the newer 1.5 model has a context window of one million tokens. (Google has even successfully tested 10M tokens during their research; that's exciting!)

However, this is for the paid version of the Gemini Pro 1.5 model. The context window of the free version of the Pro model is limited to 128,000 tokens, which is still significantly more than that of Gemini 1.0.

With the larger context window, Gemini Pro 1.5 can process 30,000 lines of code, 700,000 words, 11 hours of audio, a one-hour video, and long text documents. This makes this AI model more powerful than OpenAI's GPT-4 model powering ChatGPT.

Faster Response Time

Gemini 1.5 Pro relies on the latest Transformer and Mixture-of-Experts (MoE) architecture, which allows it to provide responses much faster. While a normal Transformer functions as a single neural network, MoE ones utilize groups of such networks resulting in greater efficiency.

When input is provided to AI models using the MoE architecture, they only activate relevant pathways, preventing resource wastage. The task to be completed is also divided between different neural models, ensuring better quality output more quickly.

Thus, with Gemini Pro 1.5, you can find answers or generate images and text-based content more quickly, leading to greater efficiency and productivity.

Superior Coding Abilities

If you're relying on Gemini for coding purposes, Gemini Pro 1.5 is the ideal AI model. It can help you write reliable code quickly, which is mainly possible due to the larger context window allowing the model to handle a larger amount of data.

The enhanced problem-solving abilities of Gemini 1.5 Pro enable it to process bigger code blocks than the previous model. Besides helping you write better code, it can explain the workings of different sections of the code and suggest useful modifications. This makes it an excellent choice for developers.

Enhanced Learning And Reasoning Capabilities

Gemini 1.5 Pro is much better at retaining information and can reason across various multimodal contexts very effectively. It is extremely proficient in interpreting huge pieces of information. Because of this, you can use this AI model to identify and locate information across videos, audio, and long text documents easily.

It can also learn new languages and can handle multiple languages more easily without needing to be provided with a lot of information about them. Furthermore, since it can find such information and even recall it from huge datasets, the model can be used with excellent results in reasoning tasks.

The enhanced reasoning and recall capabilities make Gemini 1.5 Pro suitable for a wide variety of purposes, such as academic research, content creation, and code analysis.

Improved Handling Of Audio And Visual Tasks

As explained above, Gemini 1.5 Pro can interpret information from images and videos better than the older model. It can be used to effectively integrate images with textual data while understanding the context of different elements in the images.

This capability makes it a good choice for generating text-based information from visual data with minimal effort. With the latest image analysis and interpretation capabilities, this AI model can recognize and categorize objects, understand their relationships, and extract information from still images.

Similarly, the video analysis ability of the newer AI model is much more advanced and allows it to recognize patterns in a video, predict outcomes, and track changes. Gemini 1.5 Pro can comprehend events, actions, and even emotions to a certain extent. So, it can be used to get video analyses with greater accuracy than was possible with Gemini 1.0.

Coming to the audio enhancements, the 1.5 Pro version of Gemini can understand and transcribe speech with far fewer errors than other models. Thanks to this, the accuracy remains high even with long audio pieces, and translating one language from another while retaining the context and meaning is easier.

What Can You Do With Gemini 1.5 Pro?

Gemini 1.5 Pro will allow you to accomplish a lot of things that are not possible with the older AI model. Here are a few examples of the things you'll be able to do with Gemini 1.5 Pro; developers and businesses can experiment with these right away:

- Instead of just reading and understanding short articles, you can read entire books and long-form text-based content with Gemini 1.5 Pro. Since it can handle large amounts of text-based content and complex documents easily, you can even ask it to analyze different sections and answer related questions.

- Watch complete movies and get a detailed analysis of each scene. Previously, it was only possible to do so for short clips with Gemini 1.0. For instance, you can ask the AI model to provide you with information like a character's motivations, symbolism, and more.

- Listen to long pieces of audio and gather information from them. Gemini 1.0 only allowed you to make concise notes from short audio pieces. In contrast, you can use the updated AI model to listen to long lectures, summarize complicated ideas, and even provide detailed transcripts.

- With a better recall capability, you can ask Gemini to answer questions about topics that were discussed earlier in the conversation. This ability can come in quite handy when looking up information on multiple topics.

- Using the information obtained from different sources, the AI model can even be used to generate creative content like scripts or poems. Creative fields can benefit a lot from the enhanced capabilities of Gemini 1.5 Pro.

- The new Pro AI model can help you write proper code by understanding the entire program, instead of just a few lines. You can also ask it for suggestions, use it to identify bugs, and generate code snippets.

Gemini 1.5 Pro comes with several improvements over the previous version that make it a fantastic tool for almost everyone. Now, that Google's AI can directly compete with GPT-4-powered ChatGPT, it is bound to become more popular in everyday use once Google releases it more widely.