Google’s Gemini AI now brings a suite of “key actions” directly to Android and iOS devices, changing how users interact with their phones and tablets. These shortcuts—ranging from home screen widgets to screen-aware conversations and custom Gems—are designed to reduce friction, automate repetitive steps, and speed up everything from sharing files to brainstorming new ideas.

Using Gemini’s Home Screen and Lock Screen Widgets

Home screen widgets offer the fastest way to trigger Gemini’s most popular features. On Android, pressing and holding the home screen and tapping “Widgets” reveals new Gemini options, including direct shortcuts for the camera, file/image sharing, and instant chat initiation. These widgets can be resized or removed as needed, providing flexible access without opening the full app.

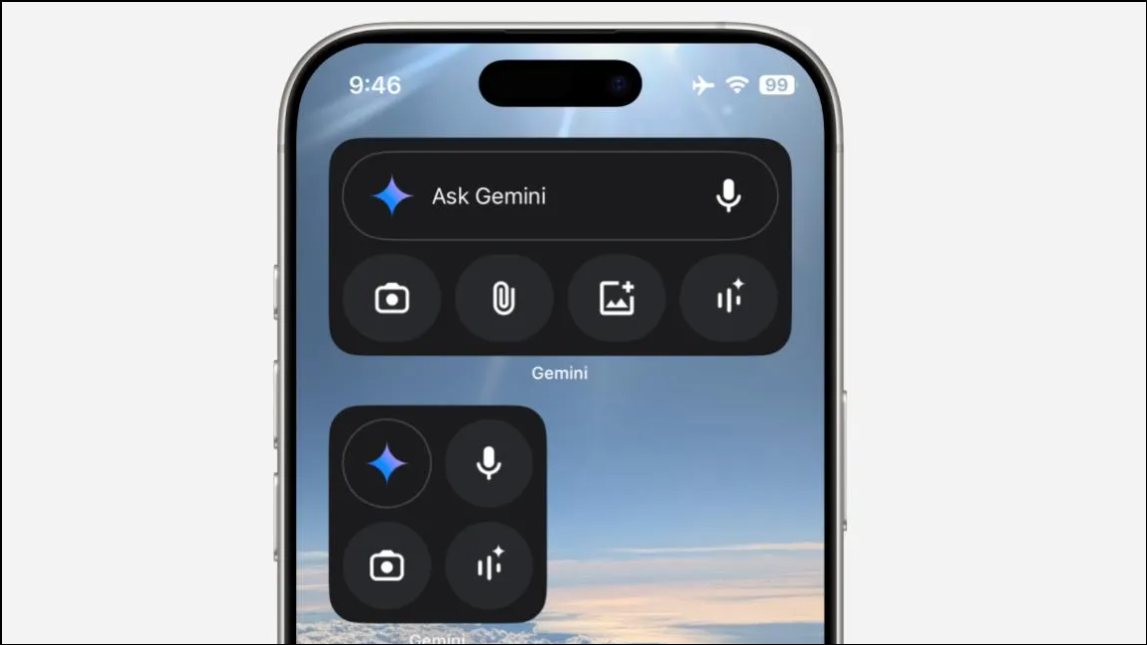

iOS users can add Gemini widgets by long-pressing the home screen and selecting “Edit.” Here, widgets can be customized—users can assign specific actions to each, like launching a new chat, sharing an image, or starting a live conversation. Recent updates also brought Gemini widgets to the lock screen, letting users quickly access tools like Type Prompt, Talk Live, Open Mic, Use Camera, Share Image, and Share File, even when the device is locked. This streamlines common tasks, such as sending a quick message or uploading a document, without navigating through menus.

Screen Actions: Context-Aware Shortcuts for Smarter Conversations

Gemini’s “screen actions” use the content currently displayed on your device to suggest relevant shortcuts. When Gemini is activated over an app, webpage, or file (like a PDF or YouTube video), it displays suggestion chips above the chat box. Tapping these chips uploads the visible content—such as a webpage’s URL or a file’s contents—to Gemini, enabling context-rich queries like “summarize this article” or “explain this chart.”

For example, activating Gemini over a research paper allows you to instantly ask for a summary or key points, while opening it over a foreign-language document lets you request a translation. The Ask about… chip prompts Gemini to analyze the visible screen or file, while Talk Live launches a real-time conversation leveraging the displayed content as context. This reduces the need for manual uploads or copy-pasting, and speeds up research, troubleshooting, or creative brainstorming.

Auto-submit options can be toggled by long-pressing a suggestion chip, giving users control over what data is shared with Gemini for privacy and security.

Custom Gems: Personalized AI Assistants for Repetitive and Specialized Tasks

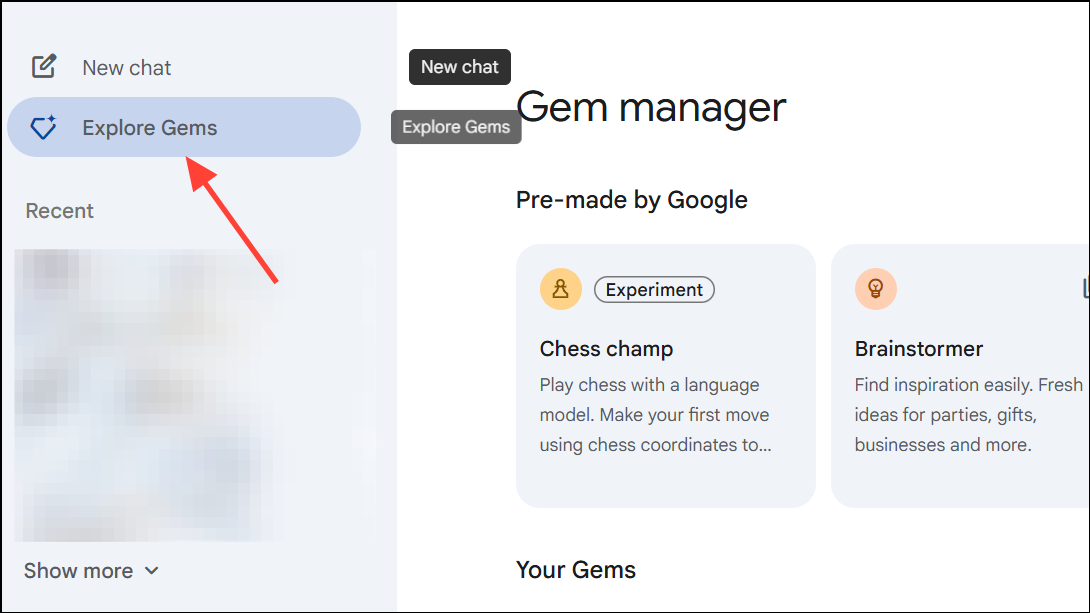

Gemini’s “Gems” feature allows users to create custom AI assistants tailored to their workflows. Unlike generic chatbots, Gems can be programmed with specific instructions for tone, response length, and even workflow steps. Google offers templates like Brainstormer, Career Guide, Coding Partner, Learning Coach, and Writing Editor, but users can also build their own Gems for tasks such as automated report generation, recurring data analysis, or creative writing prompts.

To access Gems, Android users open the Gem manager from the account menu, while web users find it in the side panel at gemini.google.com. Customization—like uploading reference files or setting specialized instructions—is currently limited to the web, but once created, Gems can be used across platforms. This enables automation of repetitive steps, such as reviewing meeting notes, extracting key insights from documents, or generating consistent email drafts, all with a single tap or command.

Gemini Live: Real-Time Analysis and Visual Collaboration

Gemini Live, especially on devices like the Galaxy S25 series, brings voice-driven, real-time interaction to the forefront. Pressing and holding the side button launches Gemini Live, which can analyze images, documents, and even whiteboard snapshots in conversation. For instance, uploading a photo of a complex chart and asking Gemini to break down the data yields instant, actionable insights. Creative professionals can upload design mockups and receive feedback or suggestions, while students can use it to translate and understand textbook pages on the fly.

Gemini Live also supports organizing visual research by categorizing and summarizing uploaded images, making it easier to manage inspiration boards or project references without manual sorting. This real-time, context-aware interaction elevates productivity for both work and personal tasks.

Everyday Productivity: Writing, Summarizing, and Multitasking

Gemini’s AI capabilities extend to daily productivity needs. Users can ask for quick summaries of emails, articles, or PDFs, generate images on demand, and even get help with grammar, translation, or brainstorming. On Android, saying Hey Google while Gemini is active allows for voice-activated actions tied to what’s on the screen—like summarizing a webpage or extracting data from an image. The app’s integration with Google Maps and Google Flights also streamlines planning and logistics, while screen-aware actions automate repetitive research and reporting tasks.

For content creators, Gemini can analyze videos, suggest thumbnail designs, and recommend trending topics by scanning YouTube search results. Technical users can troubleshoot device issues or get step-by-step software guidance by sharing their screen with Gemini and describing the problem.

Google Gemini’s key actions—widgets, screen actions, custom Gems, and Gemini Live—are redefining how users interact with their devices by reducing steps, automating context-aware tasks, and enabling smarter multitasking. As new features roll out, expect even more productivity gains and creative possibilities from your phone’s AI assistant.