While many browsers are integrating AI features in their browsers, Opera just became the first browser to integrate local AI models. Opera is adding experimental support for over 150 Local Large Language Model (LLM) variants from around 50 different families of models to their Opera One browser.

Since the language models will be local, users won't have to worry about their data being sent to the LLM's server. It's a private and secure way of using AI.

To use the local LLM models, you'll need 2-10 GB of space on your computer, depending on the variant you'll be using. Some of the supported LLMs include Llama from Meta, Phi-2 from Microsoft, Gemma from Google, Vicuna, Mixtral from Mistral AI, etc. You can use these language models instead of the Aria AI from Opera and switch back to Aria AI whenever you want.

The feature is currently only available in the developer stream of Opera One. Here's how you can activate and use the local AI models in Opera One Developer.

Download Opera One Developer

If you already have Opera One Developer, just skip this section and move on straight ahead to using the local LLM models.

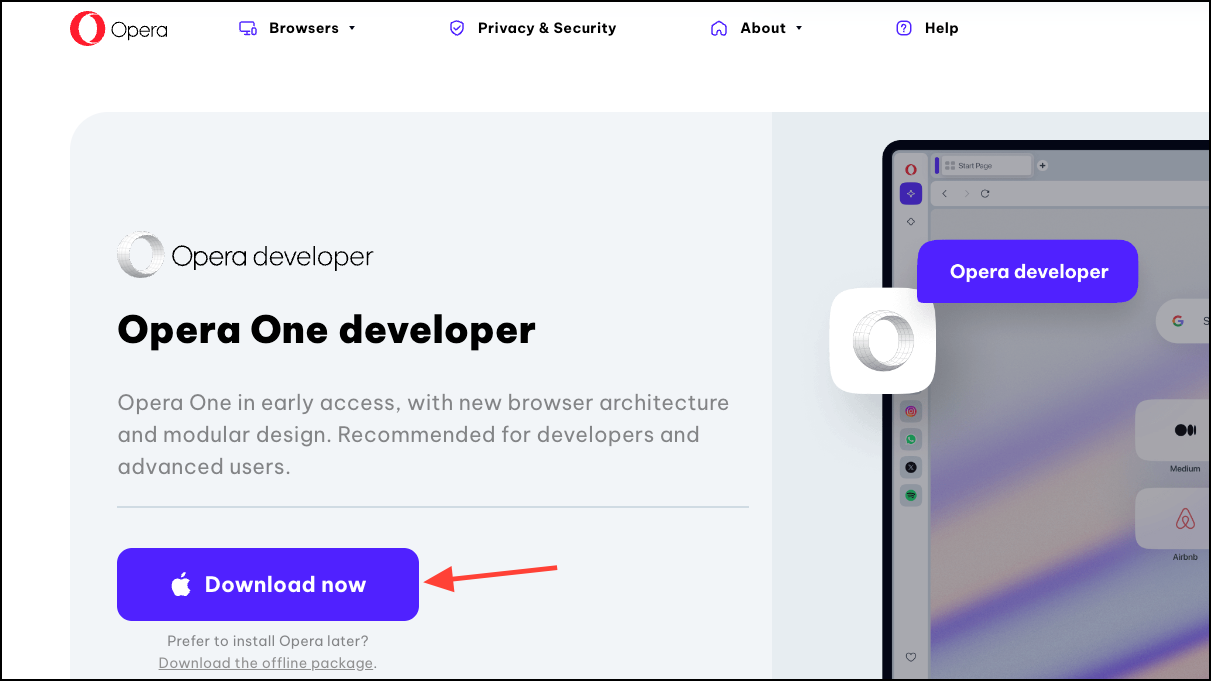

- Go to this link to download Opera One Developer. Scroll down and click 'Download Now' on the Opera One Developer tile.

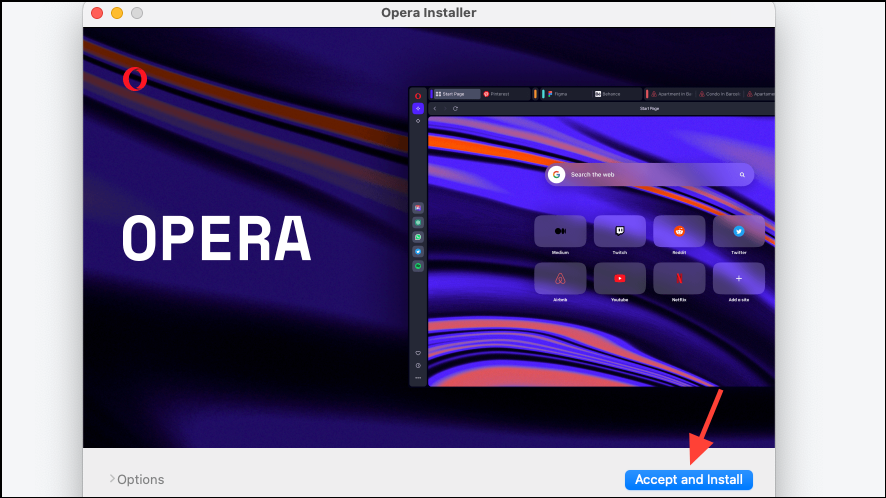

- Open the file once it downloads and run the installer.

- Click on 'Accept and Install' and then follow the instructions on your screen to install the browser.

Set Up Local AI Model

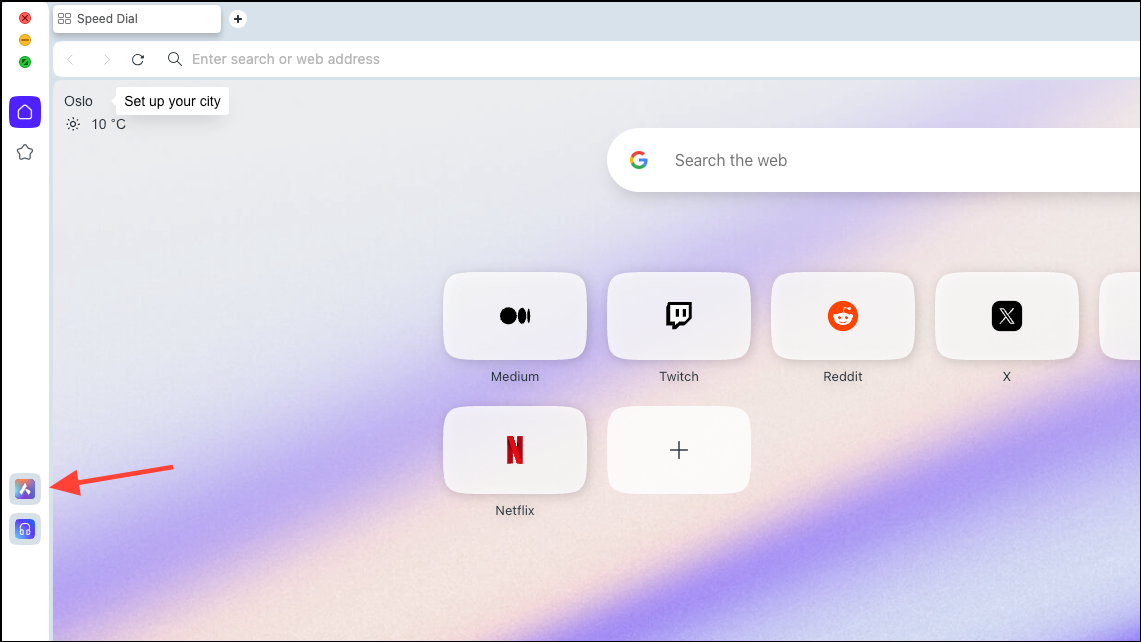

- Once the browser is downloaded, click on the 'Aria AI' icon from the left sidebar.

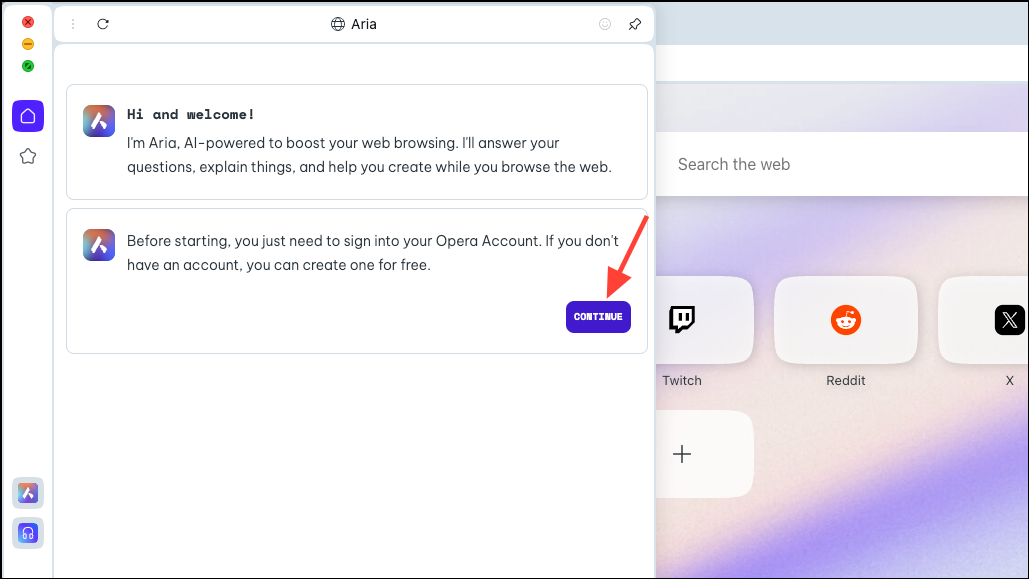

- Click on 'Continue' to proceed.

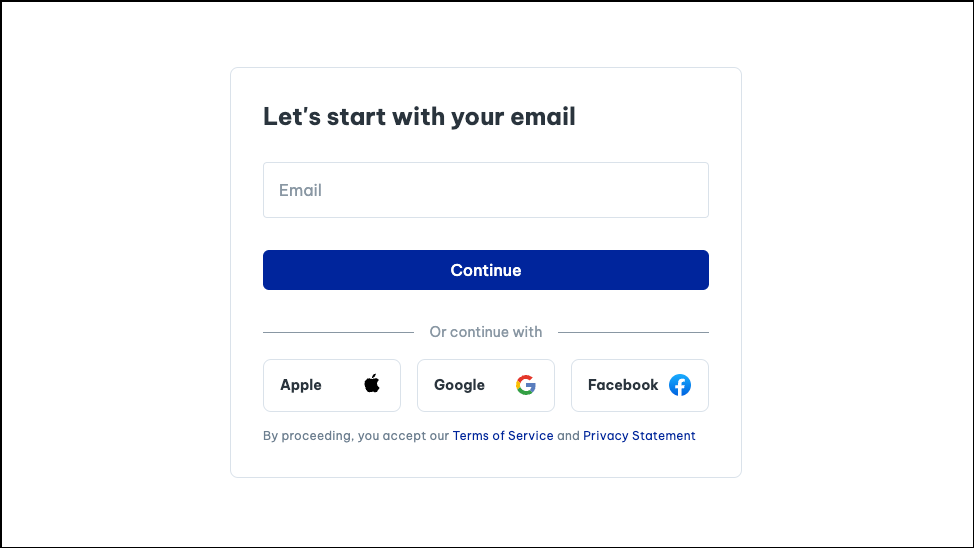

- If you're using Aria for the first time, you'll need to sign up for a free Opera account to use it. You can use your email or your Apple, Facebook, or Google account to create an Opera account.

- Once your account is created, you'll be able to chat with Aria.

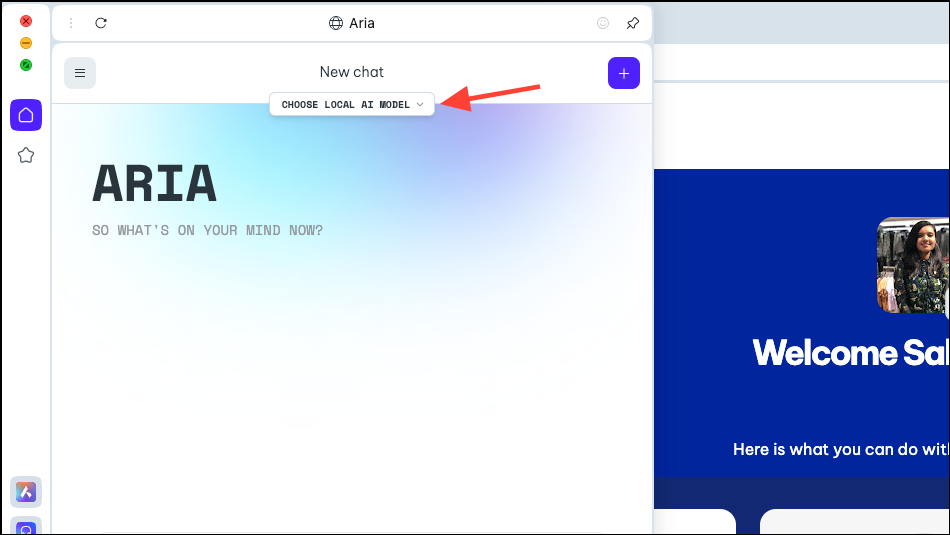

- Now, in the Aria panel, you'll see an option, 'Choose Local AI Model'. Click on it.

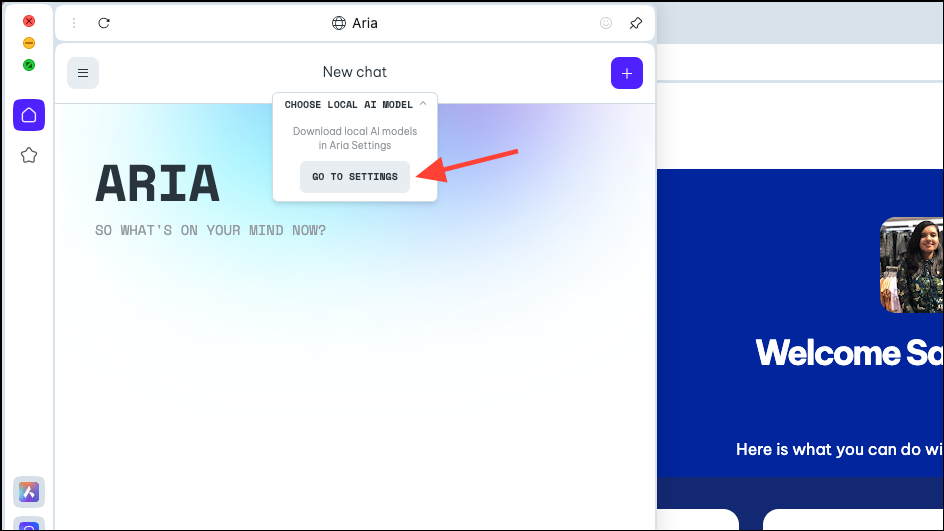

- Select 'Go to Settings' from the drop-down menu.

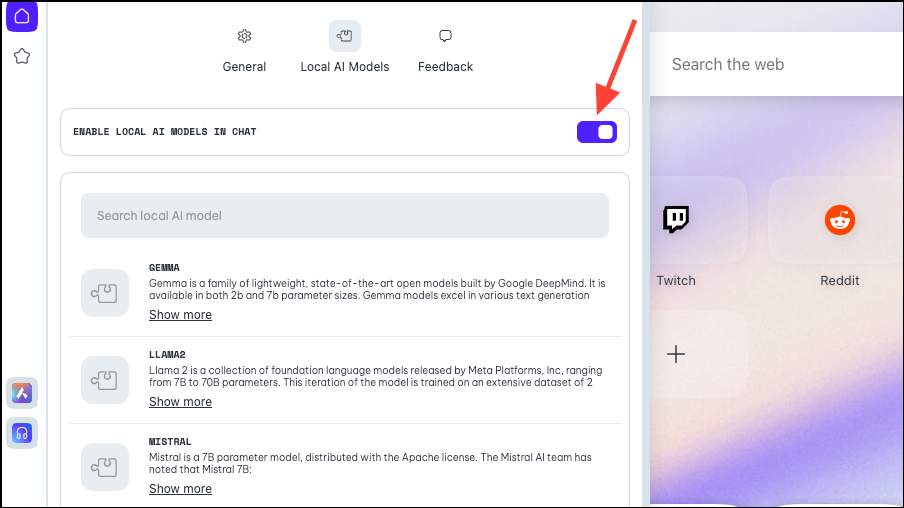

- Make sure the toggle for 'Enable Local AI in Chats' is enabled.

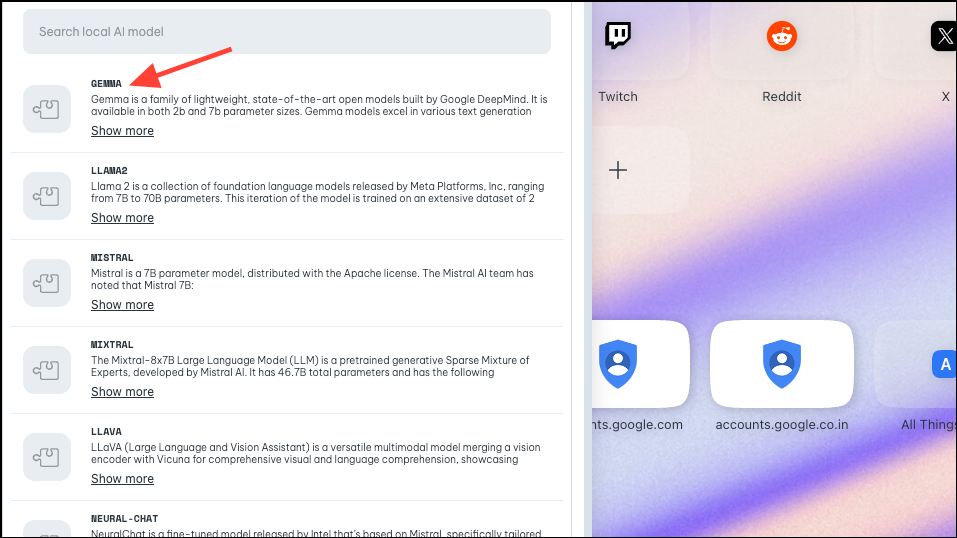

- Next, choose the model you want to download for local use, by either clicking or searching for it. As mentioned above, Opera has implemented support for approximately 50 model families with different variants, bringing the total to 150.

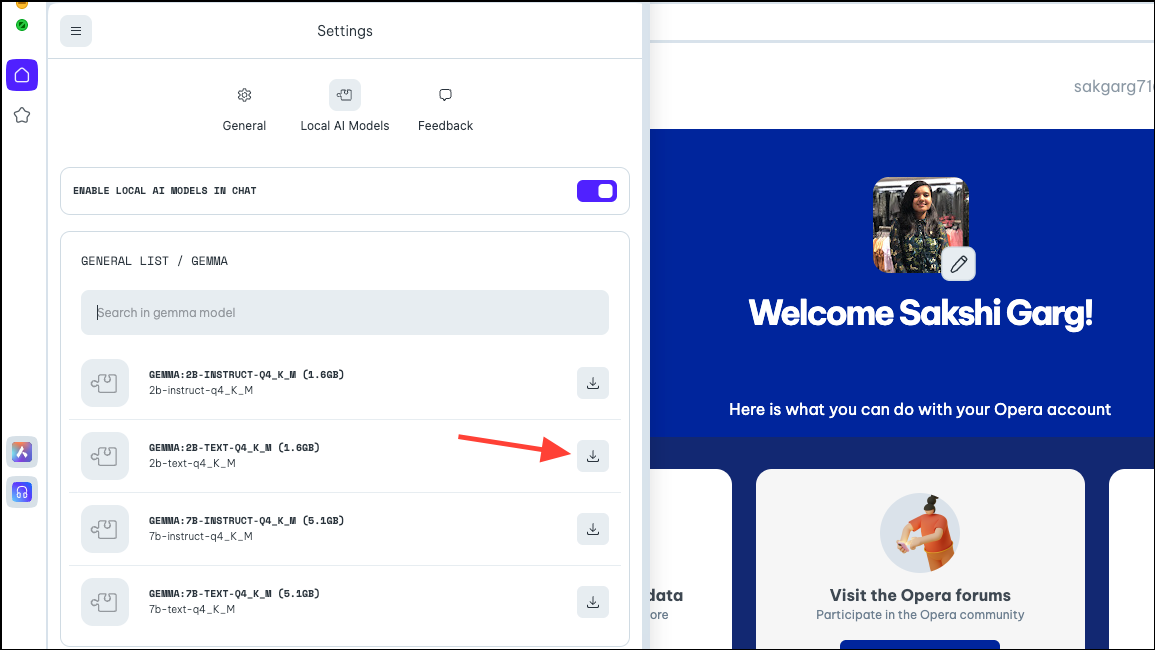

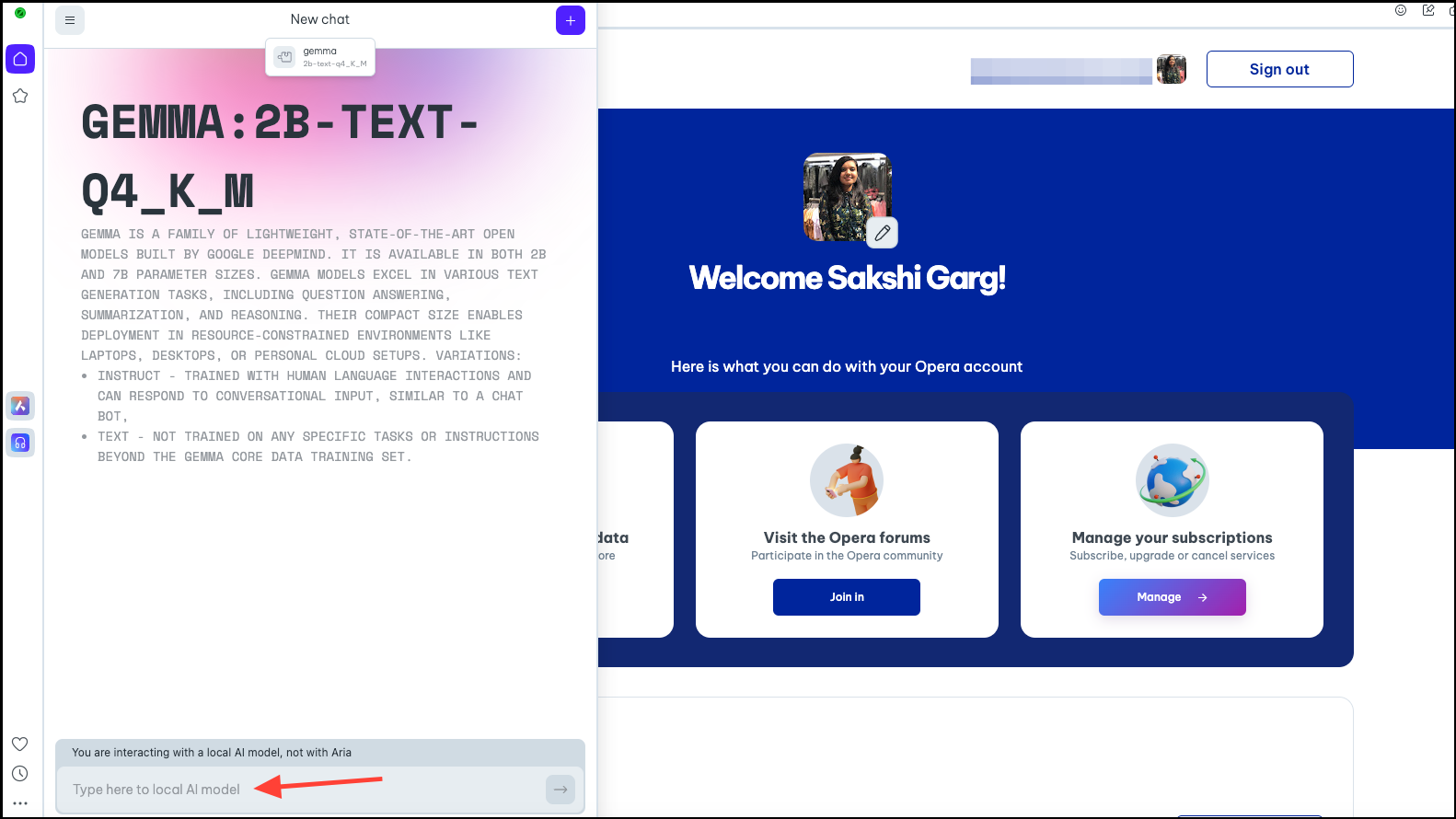

- So, for some models, when you click some of the models, like Google's Gemma, you'll get 4 different variants. Download the model you want to use.

For this guide, we're downloading Gemma's 2B Text model. Click the 'Download' button on the right. You can see the space required by the model on your PC before downloading it.

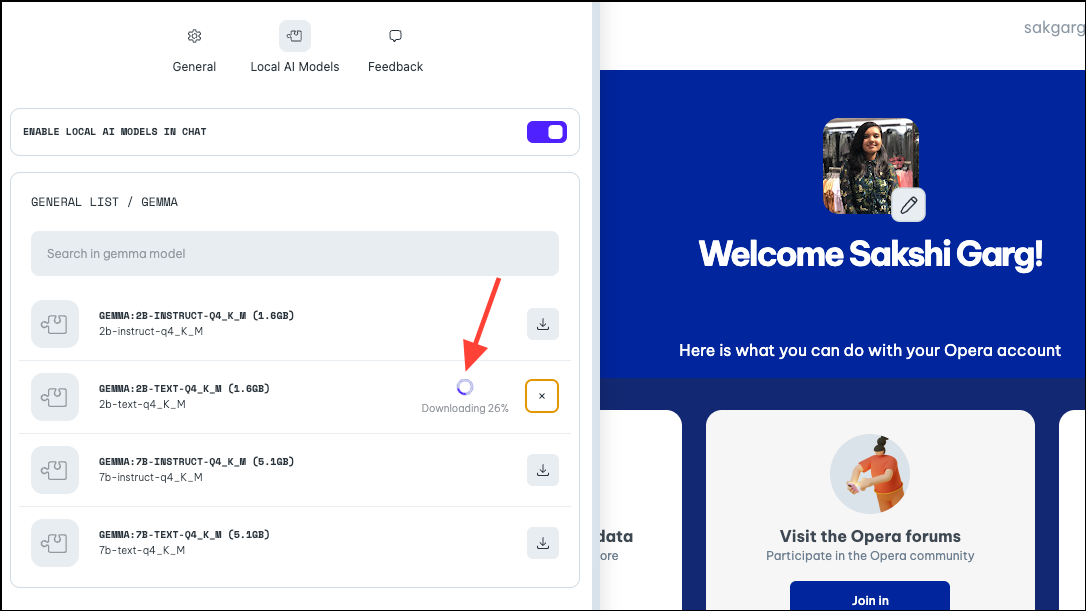

- The download would take some time, depending on the model size and your internet connection. You can see the progress of the download next to it.

Using the Local AI Model

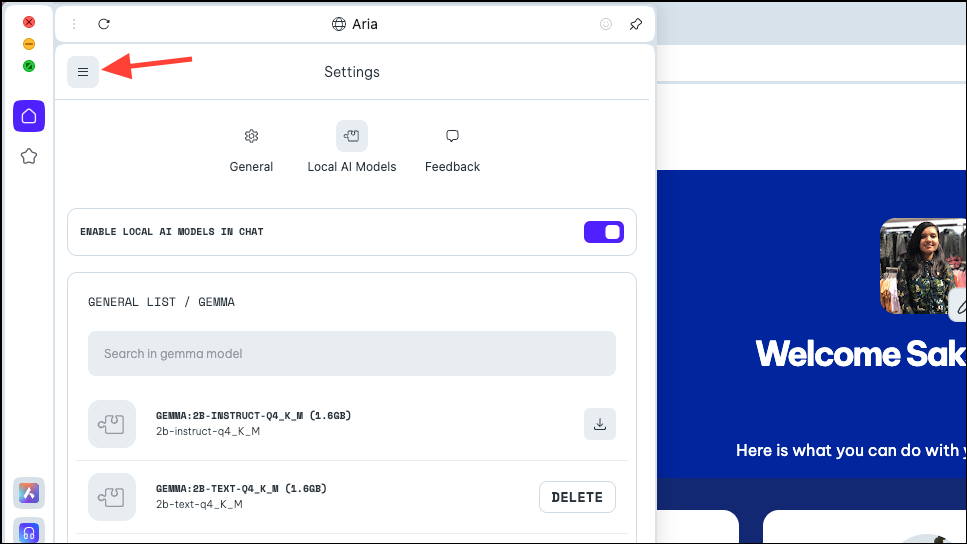

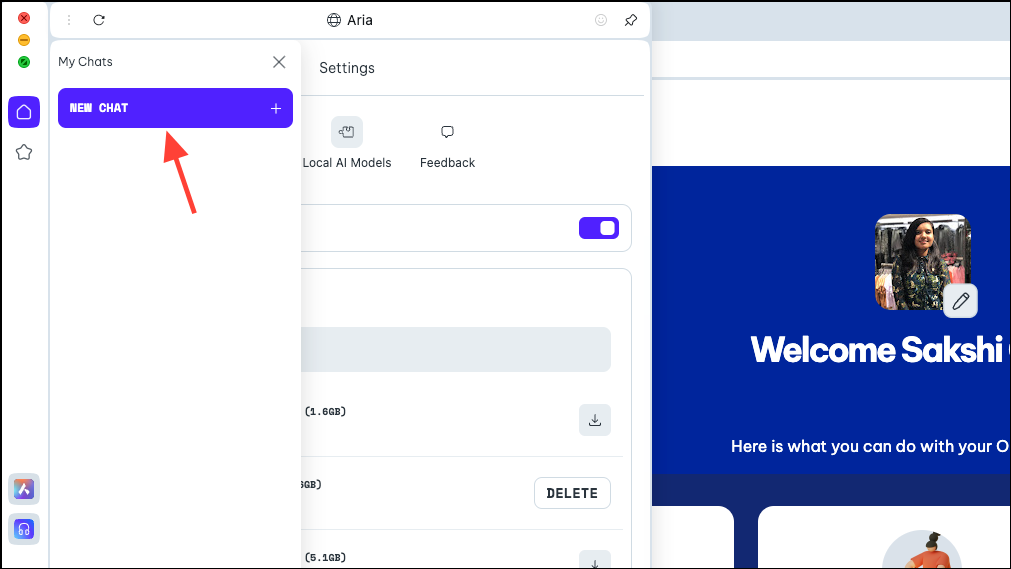

- Once the model is downloaded, click the 'Menu' icon in the top-left of the panel.

- Select 'New Chat' from the menu that appears.

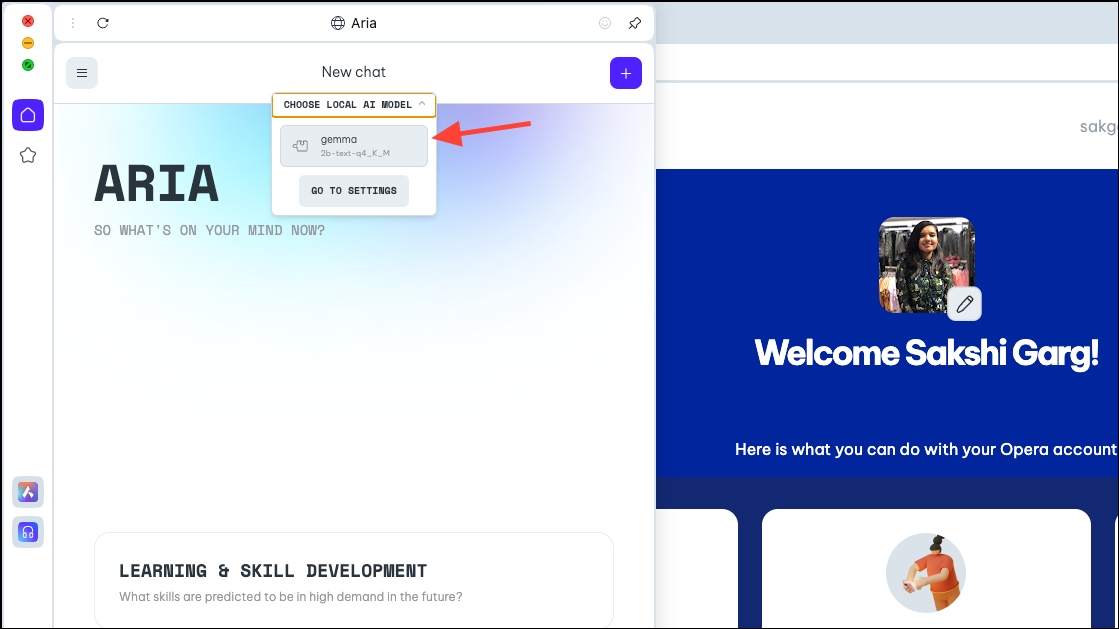

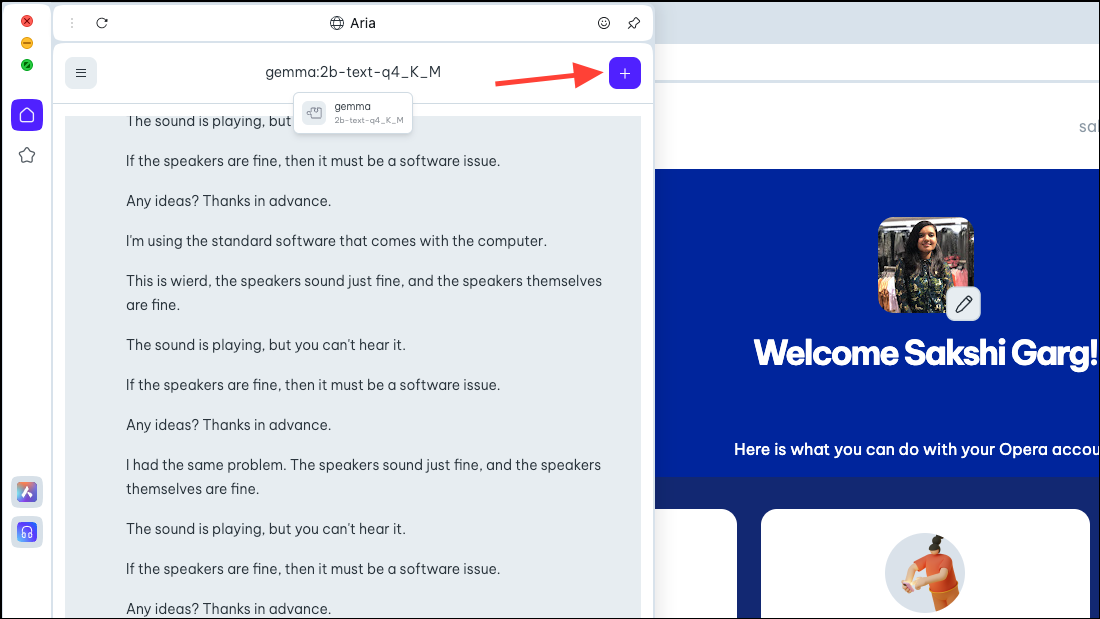

- Again, click the 'Choose Local AI Model' option at the top and select the model you just downloaded, Gemma in our case, from the drop-down menu.

- You'll now be switched to the local AI model of your choosing. Type your prompt in the prompt bar and send it to the AI to begin your local interactions.

- Your chats with the Local AI model will be available in the chat history, just like your chats with Aria are. You can also rename the chats for better organization.

- Similarly, you can download and use multiple local AI models, but remember that each requires somewhere between 2-10 GB of space on your computer.

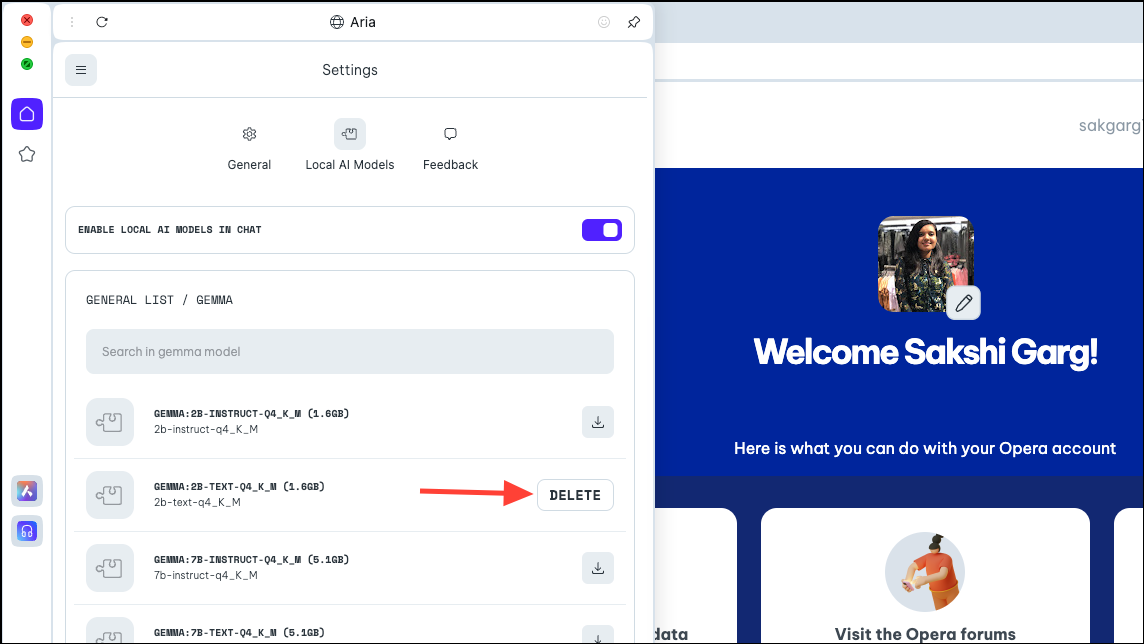

- To delete the model from your PC, navigate to the same settings from where we downloaded the model earlier, i.e.,

Aria>Settings>Local AI Models>[Downloaded Model]. Then, click the 'Delete' button.

Switch Back to Aria

- To switch back to Aria, simply start a new chat with the AI. Click on the '+' icon in the top-right corner.

- The new chat will start with Aria itself.

It's very exciting to be able to use Local AI models on the Opera One browser, and not just from the security and privacy standpoint. While in the early stages, it's all very simple, the potential future cases are rather exciting. A browser with a local AI could mean that it could use your historical input with all of your data; how exciting would that be! And the team at Opera is exploring that possibility.

But even without future possibilities, local AIs are a great move for users who worry about their data and chats with the AI being stored on its servers. There are some great LLMs you could explore, like Llama for coding, Phi-2 for outstanding reasoning capabilities, and Mixtral for tasks like text generation, question answering, etc.