If you want to run Large Language Models or LLMs on your computer, one of the easiest ways to do so is through Ollama. Ollama is a powerful open-source platform that offers a customizable and easily accessible AI experience. It makes it easy to download, install, and interact with various LLMs, without needing to rely on cloud-based platforms or requiring any technical expertise.

In addition to the above advantages, Ollama is quite lightweight and is regularly updated, which makes it quite suitable for building and managing LLMs on local machines. Thus, you do not need any external servers or complicated configurations. Ollama also supports multiple operating systems, including Windows, Linux, and macOS, as well as various Docker environments. Read on to learn how to use Ollama to run LLMs on your Windows machine.

Downloading and installing Ollama

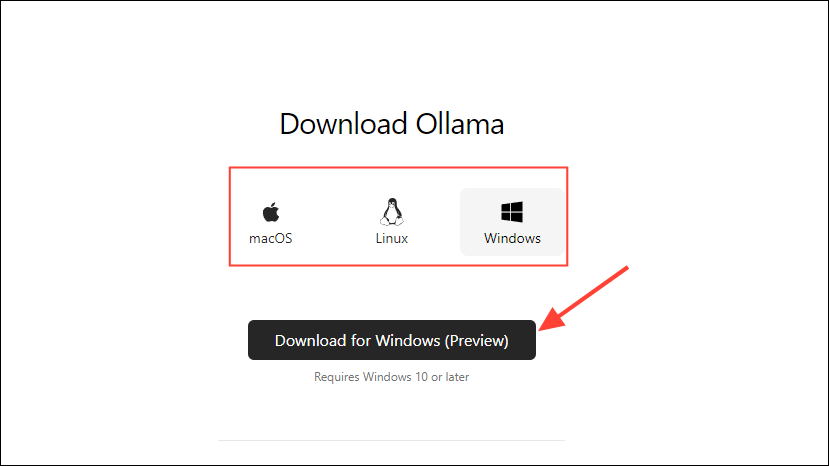

- First, visit the Ollama download page and select your OS before clicking on the 'Download' button. Alternatively, you can download Ollama from its GitHub page.

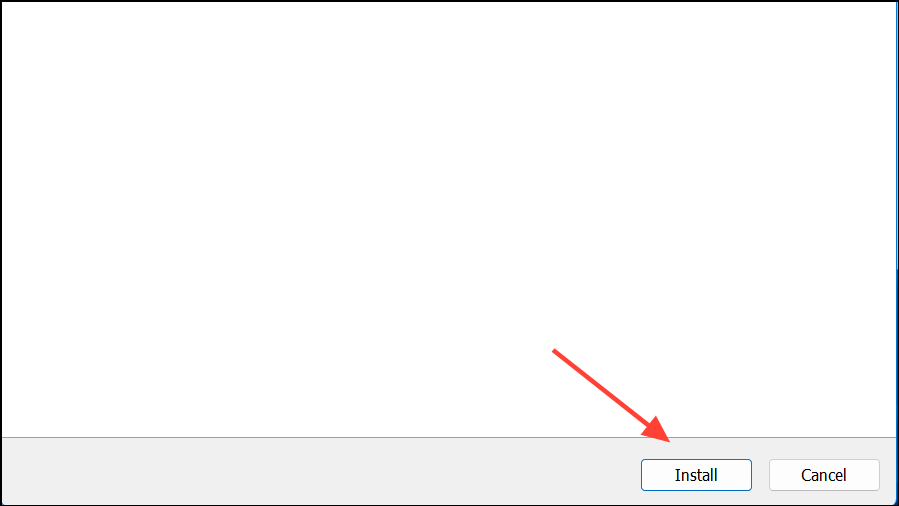

- Once the download is complete, open it and install it on your machine. The installer will close automatically after the installation is complete.

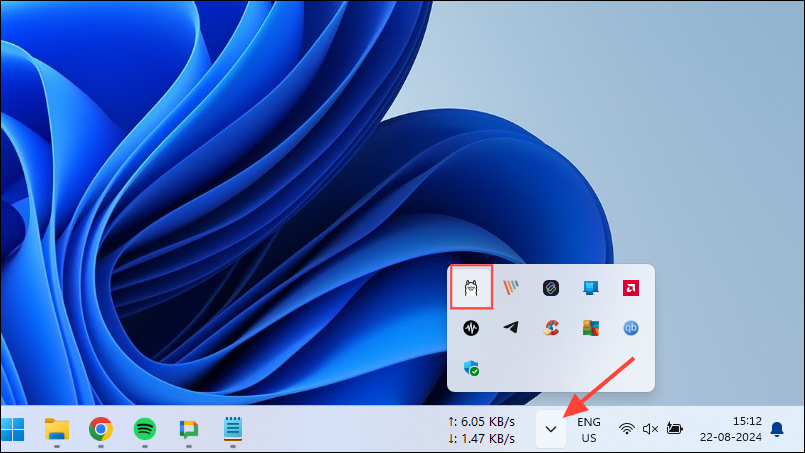

- On Windows, you can check whether Ollama is running or not by clicking on the taskbar overflow button to view hidden icons.

Customizing and using Ollama

Once Ollama is installed on your computer, the first thing you should do is change where it stores its data. By default, the storage location is C:\Users\%username%\.ollama\models but since AI models can be quite large, your C drive can get filled up quickly. To do so,

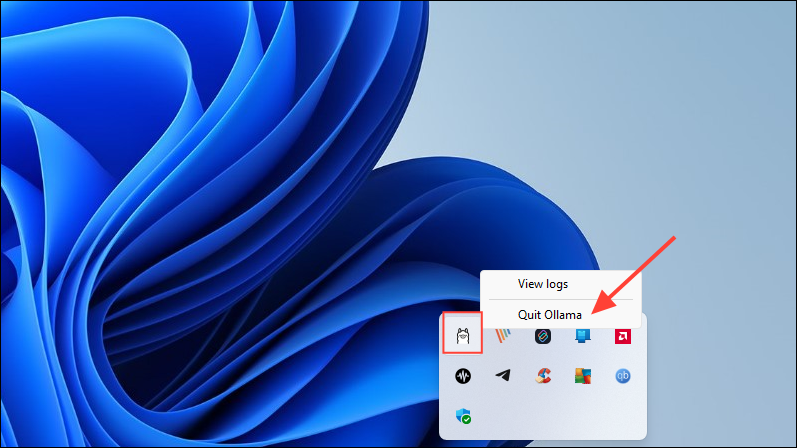

- First, click on the Ollama icon in the taskbar and click on 'Quit Ollama'.

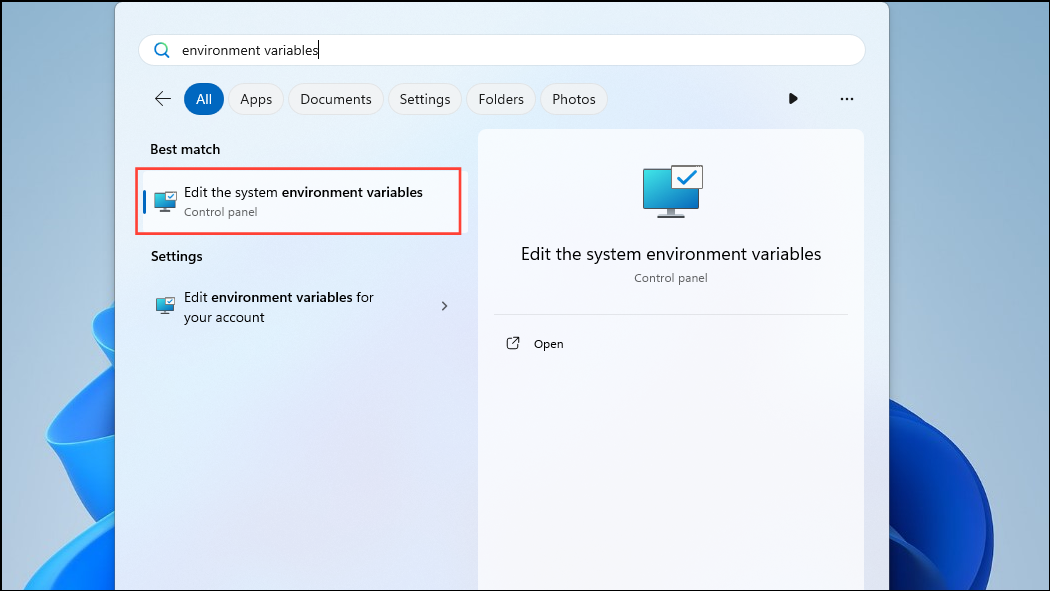

- Once Ollama has exited, open the Start menu, type

environment variablesand click on 'Edit the system environment variables'.

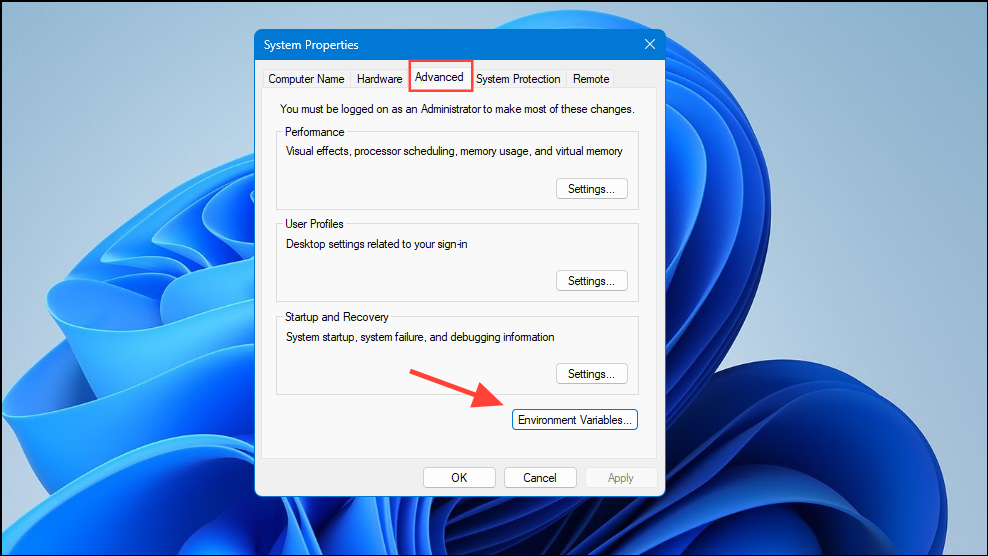

- When the System Variables dialog box opens, click on the 'Environment Variables' button on the 'Advanced' tab.

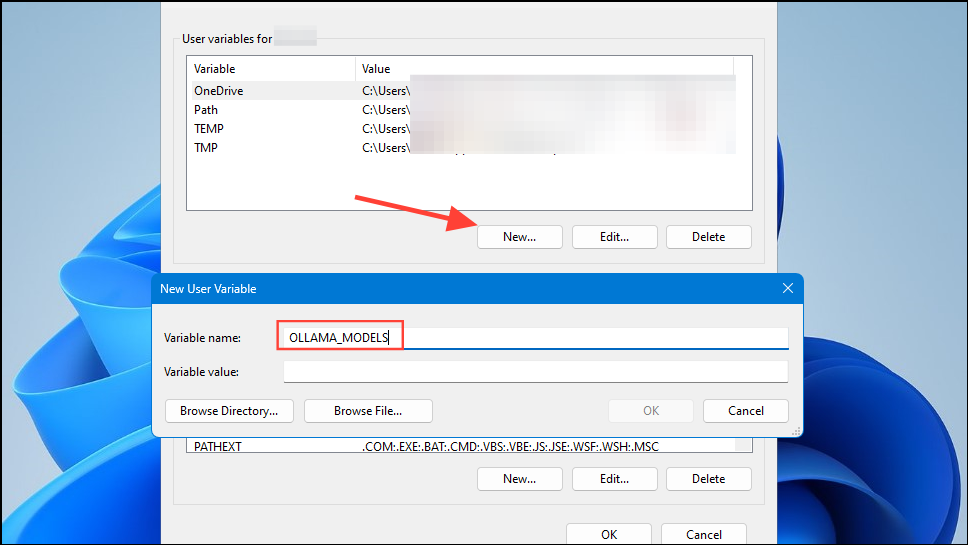

- Click on the 'New' button for your user account and create a variable named

OLLAMA_MODELSin the 'Variable name' field.

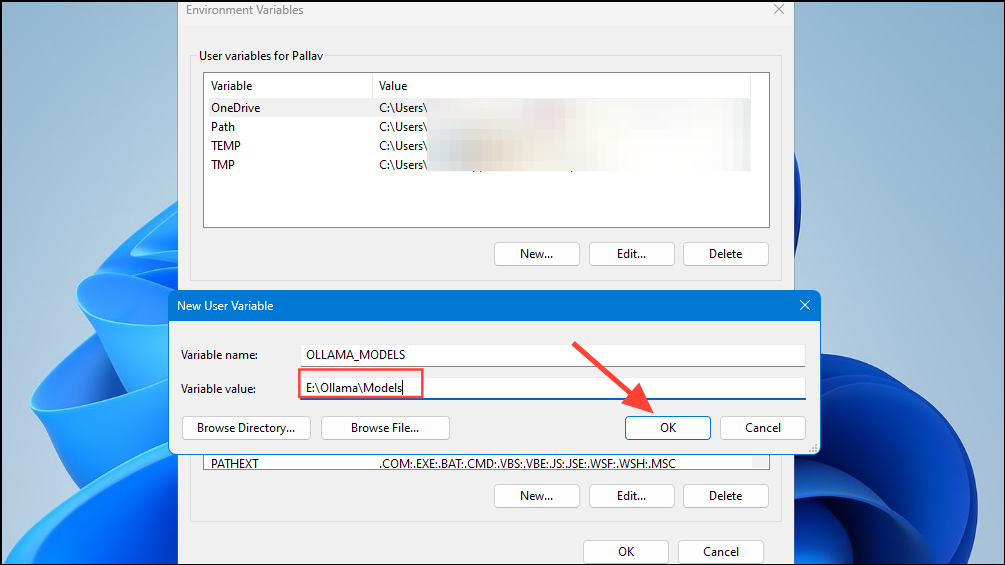

- Then type the location of the directory where you want Ollama to store its models in the 'Variable value' field. Then click on the 'OK' button before launching Ollama from the Start menu.

- Now you're ready to start using Ollama, and you can do this with Meta's Llama 3 8B, the latest open-source AI model from the company. To run the model, launch a command prompt, Powershell, or Windows Terminal window from the Start menu.

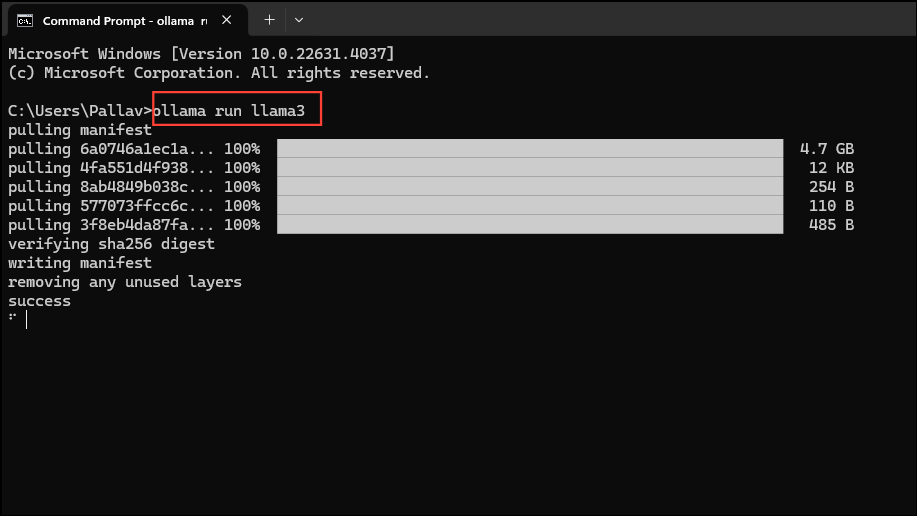

- Once the command prompt window opens, type

ollama run llama3and press Enter. The model is close to 5 GB, so downloading it will take time.

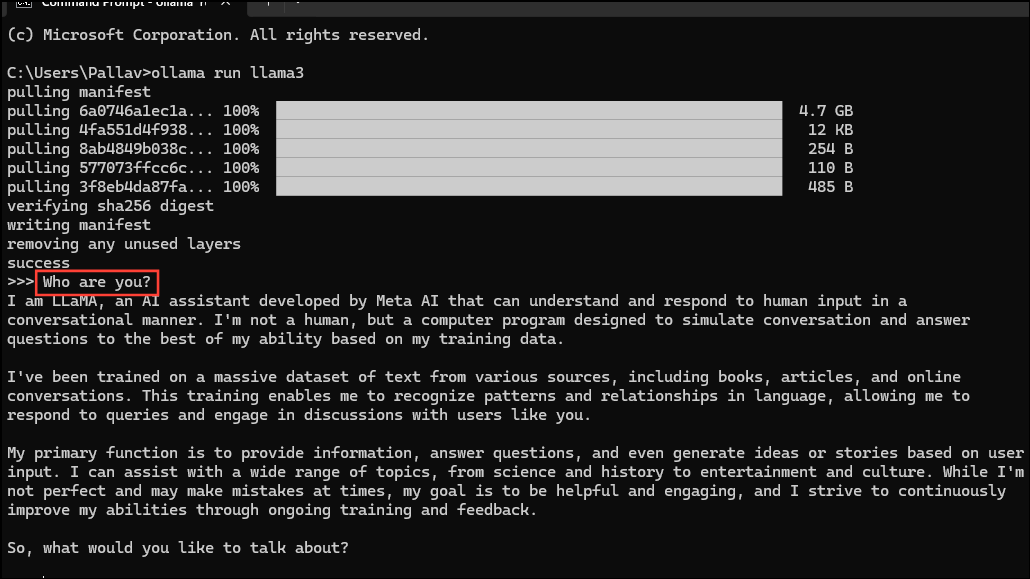

- Once the download completes, you can start using Llama 3 8B and converse with it directly in the command line window. For instance, you can ask the model

Who are you?and press Enter to get a reply.

- Now, you can continue the conversation and ask the AI models questions on various topics. Just keep in mind that Llama 3 can make mistakes and hallucinates, so you should be careful when using it.

- You can also try out other models, by visiting the Ollama model library page. Additionally, there are various commands that you can run to try out different functionalities that Ollama offers.

- You can also perform various operations while running a model, such as setting session variables, showing model information, saving a session, and more.

- Ollama also lets you take advantage of multimodal AI models to recognize images. For instance, the LLava model can recognize images generated by DALLE-3. It can describe images in detail.

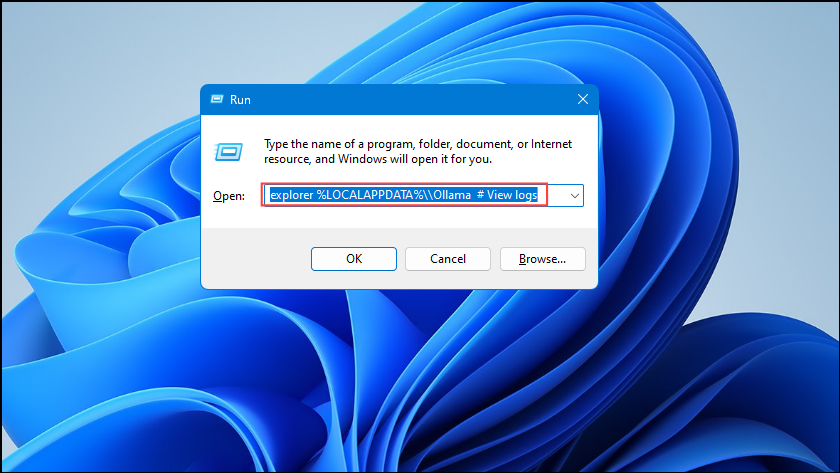

- If you're running into errors while running Ollama, you can check the logs to find out what is the issue. Use the

Win + Rshortcut to open the Run dialog and then typeexplorer %LOCALAPPDATA%\\Ollama # View logsinside it before pressing Enter.

- You can also use other commands like

explorer %LOCALAPPDATA%\\Programs\\Ollamaandexplorer %HOMEPATH%\\.ollamato check the binaries, model, and configuration storage location.

Things to know

- Ollama automatically detects your GPU to run AI models, but in machines with multiple GPUs, it can select the wrong one. To avoid this, open the Nvidia Control Panel and set the Display to 'Nvidia GPU Only'.

- The Display Mode may not be available on every machine and is also absent when you connect your computer to external displays.

- On Windows, you can check whether Ollama is using the correct GPU using the Task Manager, which will show GPU usage and let you know which one is being used.

- While installing Ollama on macOS and Linux is a bit different from Windows, the process of running LLMs through it is quite similar.