At its annual I/O conference in California, Google made several announcements related to AI, including new models and upgrades to existing ones. One of the most interesting announcements was Project Astra – a multimodal assistant that functions in real-time and combines the abilities of Google Lens and Gemini to provide you with information from your surroundings.

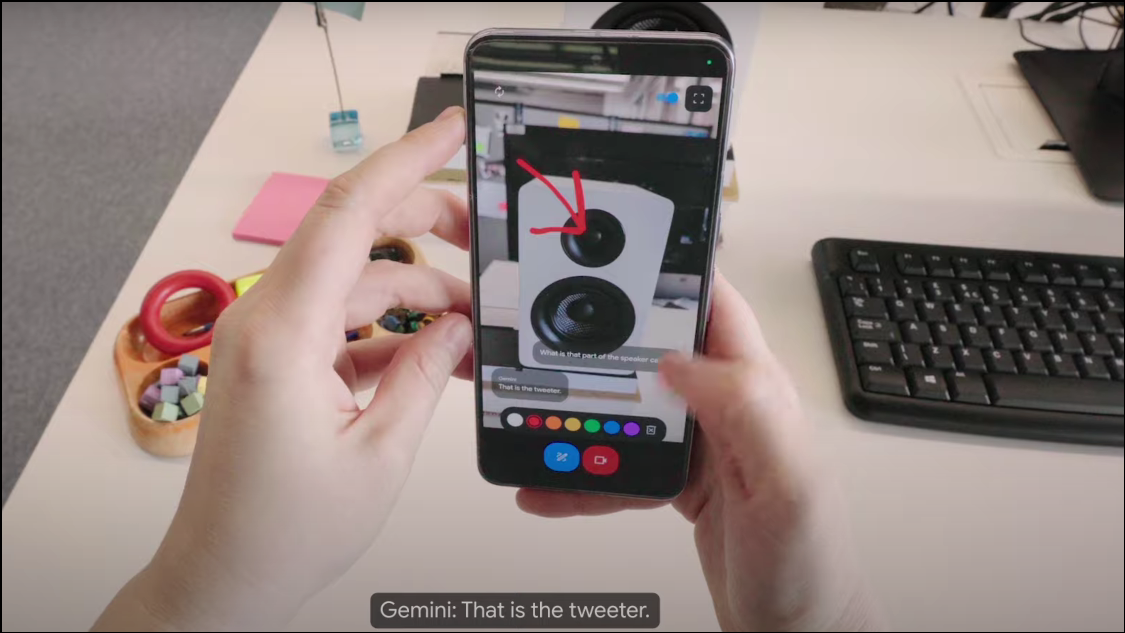

How Does Project Astra Work? At the conference, Google showed off an early version of Project Astra, which functions as a camera-based chatbot and can gather information from your surroundings using the camera on your device. Being a multimodal AI assistant, it can use audio, video, and images as inputs and provide the necessary output based on those. It runs on the Gemini 1.5 Pro model, which makes it incredibly powerful.

The chatbot analyzes objects and surroundings in real time and can answer queries very quickly, making it quite similar to a human assistant, or Tony Stark's beloved JARVIS. Project Astra also works with wearables like smart glasses, which means there is lots of potential for the AI chatbot to be integrated into different devices.

Project Astra can better understand the context it is being used in, process information faster, and retain it for faster recall. Its speech capabilities are also much improved compared to those of earlier Gemini models, so it sounds more natural and human-like.

What Can Project Astra Do? In the short demo that Google showed off, it seems like there is a lot that Project Astra can help accomplish. It can observe and comprehend objects and locations through the camera lens and microphone and provide you with information about them. For instance, you can just aim your phone at a piece of code and discuss it with the chatbot, or ask it to identify an object and its use.

Similarly, thanks to location awareness capabilities, Project Astra can provide information about your locality just by taking a look at your surroundings. It can also retain information shown to it, which can be quite handy if you want to locate misplaced items, as demonstrated in the demo when it helped the user find their glasses.

You can also ask the AI assistant for creative ideas, just like with Gemini on your phone. For example, you can ask it to come up with lyrics for a song, a name for a musical band, or anything else. Basically, Project Astra aims to be a universal AI assistant that can provide you with information in real time in a very conversational manner.

When Will Project Astra Be Available? As of now, Google has not made announcements regarding the availability of Project Astra. The version shown in the demo is an early prototype, but Google hinted that these capabilities might be integrated into the existing Gemini app at a later stage.

With Project Astra, Google is striving to lead the evolution of AI assistants so they become even more useful and easier to use. However, Google is not the only one in pursuit of this goal. OpenAI also recently announced GPT-4o, making its ChatGPT multimodal, enhancing its capabilities and efficiency. ChatGPT's new Voice Mode (to be released soon) can also use video input from the device's camera while interacting with users.

Right now, the difference between the two is that GPT-4o will soon be available on all devices running ChatGPT, while Project Astra is expected to be available as Gemini Live sometime in the future, though there is no release date currently. With GPT-4o powered ChatGPT being available earlier, it remains to be seen whether Google's Project Astra will be good enough to rival OpenAI's more popular chatbot.