Meta’s new standalone AI app introduces a more personal, conversational assistant that works across mobile devices, web, and Meta’s smart glasses. Unlike the previous AI integrations within Facebook, Instagram, WhatsApp, and Messenger, this app delivers a dedicated environment where users can interact with Meta AI using both text and voice, manage preferences, and explore a social feed of AI-generated content. This shift marks Meta’s direct response to competitors like ChatGPT and Gemini, signaling a new phase for consumer AI assistants.

How the Standalone Meta AI App Works

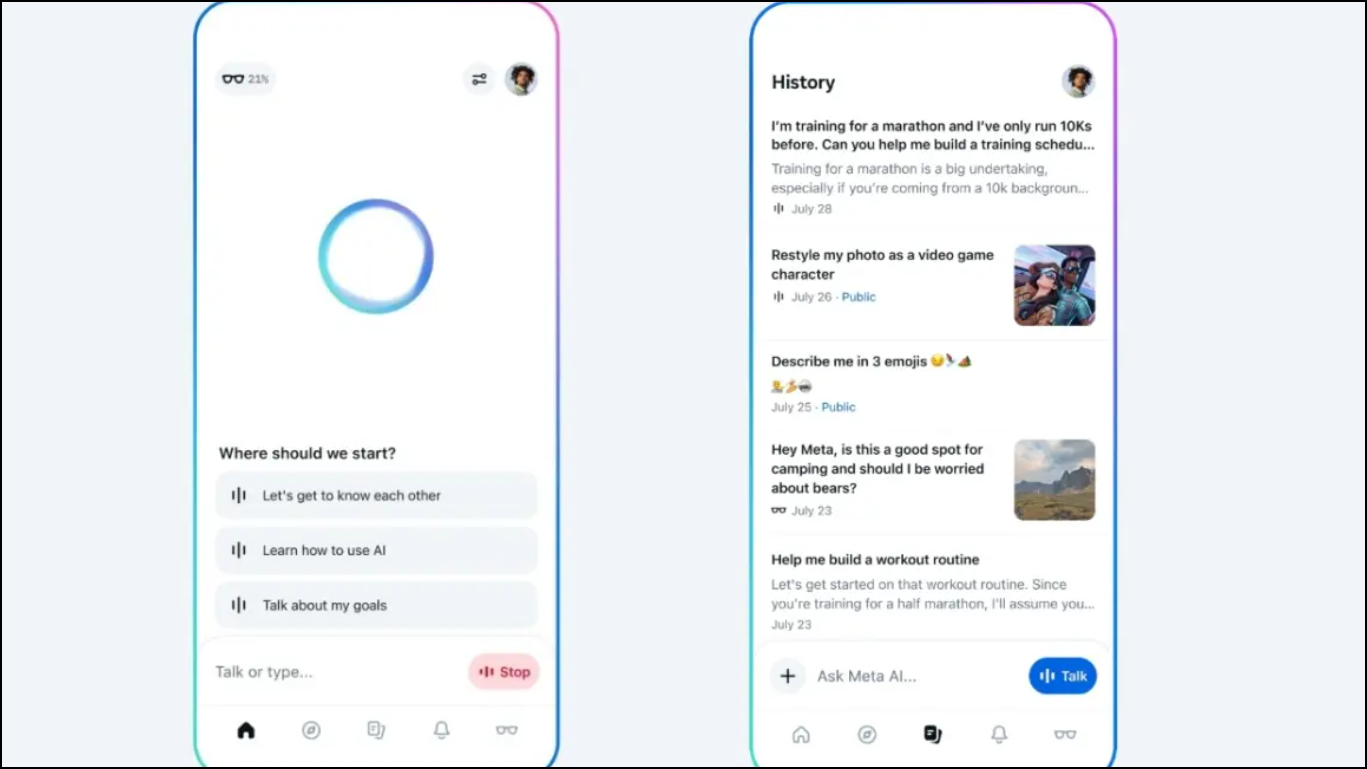

The dedicated Meta AI app leverages the latest Llama 4 language model, allowing users to engage in natural conversations, generate images, and even edit visuals within the same interface. After signing in with a Meta account or a linked Facebook/Instagram profile, users land on a home screen that prioritizes voice interactions and context-aware assistance. The app’s voice feature supports real-time, full-duplex conversations, letting users speak and listen simultaneously for a more fluid exchange—something not available in the AI features embedded within Meta’s social apps.

By introducing a standalone app, Meta positions its assistant as a daily companion rather than just an optional add-on. The app’s “Ready to Talk” mode keeps it listening for commands, while a visible microphone indicator maintains transparency about when the assistant is active. For those who prefer privacy, voice activation can be toggled off in the settings.

Social Discovery and Community Features

One of the app’s standout additions is the Discover feed, a curated stream where users can browse, remix, and share AI-generated prompts and images. This feature transforms the AI experience from a one-on-one chat into a social activity, allowing people to see creative uses, comment, and interact with content from others in the Meta ecosystem. Unlike the more limited AI integrations in Facebook or Instagram, where AI is typically used for search, recommendations, or chat support, the standalone app turns AI into a platform for creative expression and community engagement.

Users can share their own AI creations in the Discover feed, but nothing is posted publicly unless the user opts in. This social layer is unique to the standalone app and is not mirrored in Meta’s other apps, where AI usage remains largely private and utility-driven.

Personalization and Memory Across Devices

The standalone Meta AI app is designed to remember user preferences and context, tailoring responses based on information users choose to share from their Meta profiles. By connecting Facebook and Instagram accounts through the Meta Accounts Center, the assistant can draw from a wider range of user data, such as interests, liked content, or activity history, to deliver more relevant answers. This memory feature is opt-in and managed through the app’s settings, where users can instruct the AI to “remember this” and review saved information at any time.

In contrast, the AI features within WhatsApp, Messenger, Facebook, and Instagram offer only limited personalization, typically drawing on the context of a single conversation or recent activity. The standalone app’s memory and cross-device continuity—especially when paired with Meta smart glasses—allow users to start a conversation on one device and pick it up later on another, a capability not available in the embedded AI experiences.

Seamless Integration with Meta Devices and Web

Meta’s new app also serves as the companion for Ray-Ban Meta smart glasses, replacing the previous Meta View app. This integration means users can initiate conversations or capture moments with their glasses, then manage content, settings, and conversation history directly from the app. The web version of Meta AI has also been upgraded to mirror the app’s features, including voice interaction and the Discover feed, while supporting document editing and analysis in select countries. This unified experience simplifies device management and ensures continuity across desktop, mobile, and wearable devices.

Key Differences: Standalone App vs. Meta AI in Other Apps

- Dedicated Environment: The standalone app offers a focused, distraction-free space for AI interactions, while embedded AI in social apps is often secondary to messaging or browsing.

- Advanced Voice Features: Full-duplex, real-time voice conversations are exclusive to the app, with more limited voice support in Messenger or WhatsApp.

- Social Discovery: The Discover feed for sharing and remixing AI content is unique to the standalone app.

- Personalization and Memory: The app remembers preferences and context across devices, whereas AI in other Meta apps has limited or session-based memory.

- Device Integration: Seamless pairing and management of Meta smart glasses is built into the standalone app, not available in the other apps.

- Image and Document Tools: The app supports image generation, editing, and document analysis, features not found in the embedded AI assistants.

Access and Availability

The Meta AI app is currently available for iOS and Android in select markets, with web access for desktop users. Voice features and advanced personalization are initially limited to the US, Canada, Australia, and New Zealand. Existing Meta View users for Ray-Ban glasses will see their devices and settings automatically transferred to the new app. As Meta continues to collect user feedback, more features and a broader rollout are expected in the coming months.

Meta’s standalone AI app delivers a more conversational, personalized, and social assistant than what’s possible within Facebook, WhatsApp, or Instagram, setting a new standard for everyday AI interaction across devices.