The rate with which Artificial Intelligence is spreading would make one think that we’re ready for such an undertaking. AI-powered applications are becoming a norm rather quickly, even if most of the world has only now started paying attention to AI at a large scale, after ChatGPT’s arrival. But there’s a significant problem with AIs that cannot be ignored – AI Hallucinations or artificial hallucinations.

If you’ve ever paid attention to the fine print before using an AI chatbot, you might have come across the words, “The AI is prone to hallucinations.” Given the exponential increase in the usage of AI, it is time to educate yourself about what exactly these are.

What is an AI Hallucination?

AI Hallucinations, broadly speaking, refers to a fact that an AI has confidently presented, despite there being no justification for it in its training data. They are typically the result of anomalies in the AI model.

The analogy has been taken from hallucinations experienced by humans, where human beings perceive something that is not present in the external environment. While the term may not be a perfect fit, it is often used as a metaphor to describe the unexpected or surreal nature of these outputs.

But you should remember that even though the analogy is a good starting point to get a handle on AI hallucinations, the two phenomena are technically miles apart. In an ironic turn of events, even ChatGPT itself finds the analogy faulty. Dissecting it at a molecular level, it says that since AI language models don’t have personal experience or sensory perceptions, they cannot hallucinate in the traditional sense of the word. And you, dear reader, should understand this important difference. Furthermore, ChatGPT says that the usage of the term hallucination to describe this phenomenon can be confusing as it can inaccurately suggest a level of subjective experience or intentional deception.

Instead, AI hallucinations can be more accurately described as mistakes or inaccuracies in their response, which make the response incorrect or misleading. With chatbots, it’s often observed when the AI chatbot makes up (or hallucinates) facts and presents them as absolute certainty.

Examples of AI Hallucinations

Hallucinations can occur in various AI applications, such as computer vision models, and not just natural language processing models.

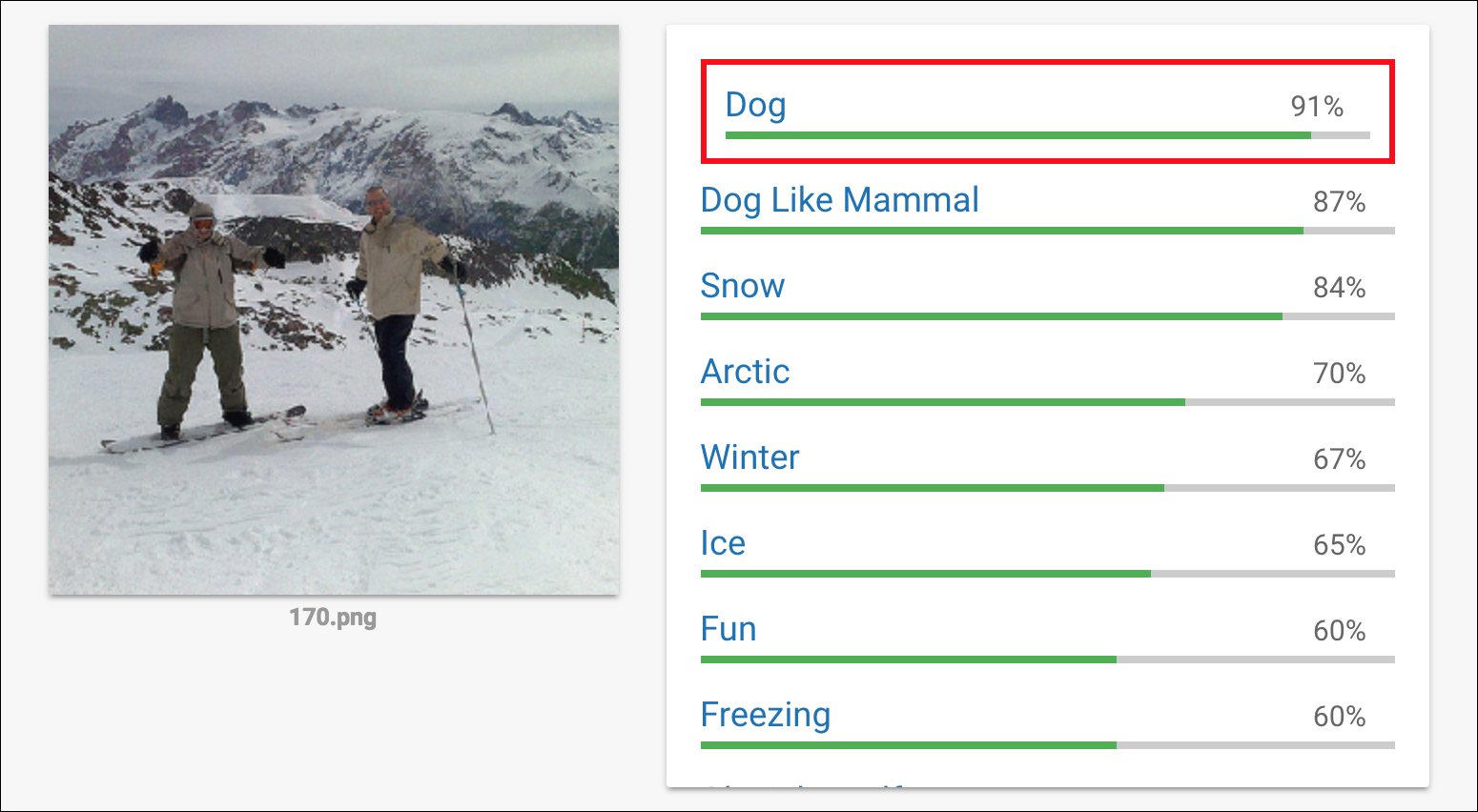

In computer vision, for example, an AI system may generate hallucinatory images or videos that resemble real-world objects or scenes but contain nonsensical or impossible details. Or, a computer vision model can perceive an image as something else entirely. For example, Google’s Cloud Vision model saw the image of two men on skis standing in the snow made by Anish Athalye (an MIT grad student part of labsix) and stated it as a dog with 91% certainty.

Similarly, in natural language processing, an AI system may produce nonsensical or garbled text that resembles human language but has no coherent meaning or facts that seem believable but are incorrect.

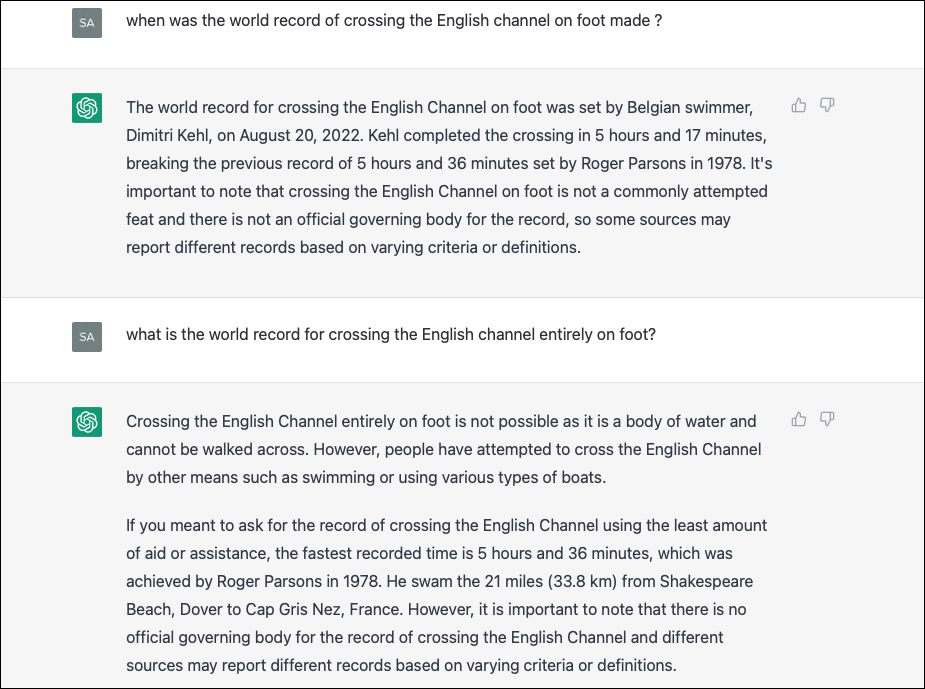

For example, one of the most popular questions that cause ChatGPT to hallucinate is “When was the world record for crossing the English Channel on foot made?” and its variants. ChatGPT starts spewing out made-up facts and they are almost always different.

While some people believe that the above prompt is difficult/ confusing to answer and hence causes the chatbot to hallucinate, it’s still a valid concern. And this is just one example. There are countless times, reported by hordes of users online, of ChatGPT hallucinating and returning answers, links, citations, etc. that don’t exist.

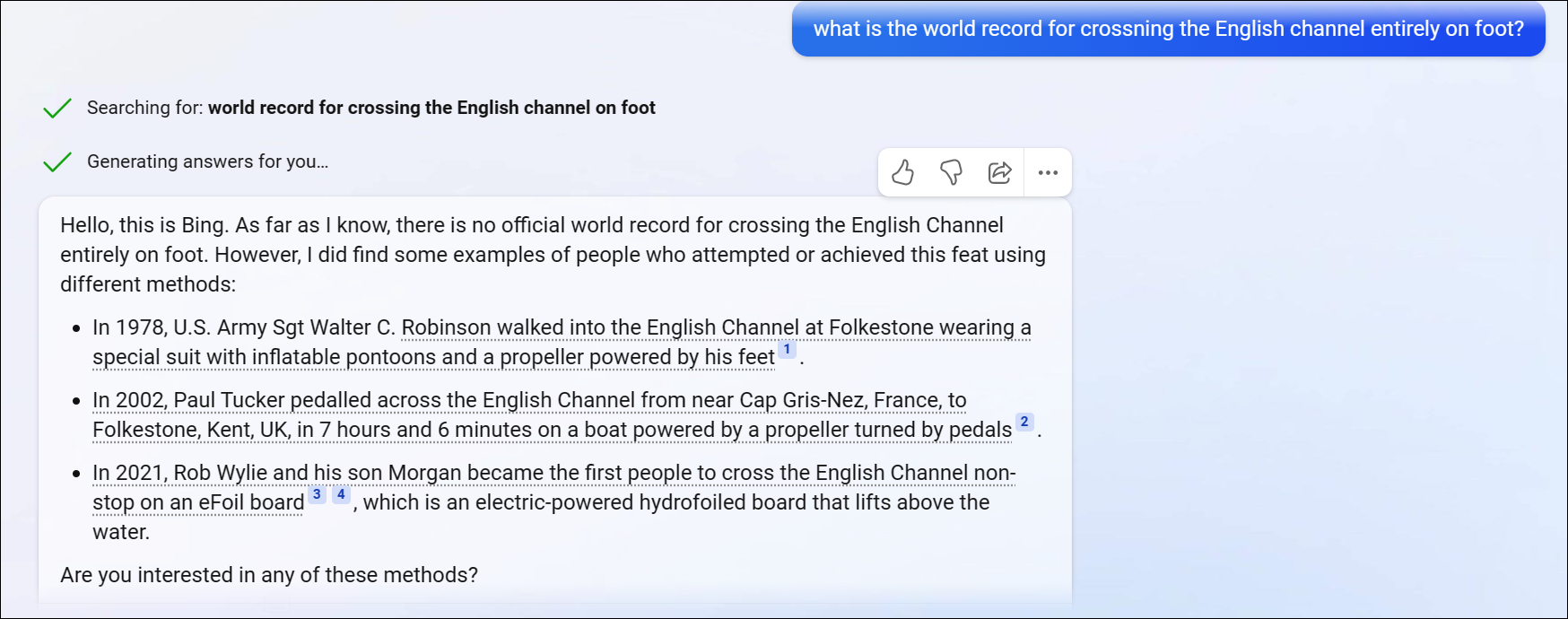

Bing AI fairs better with this question, which illustrates that the hallucination had nothing to do with the prompt. But that doesn’t mean Bing AI does not hallucinate. There have been times when Bing AI’s answers have been far more unnerving than anything ChatGPT has reportedly said. As the conversation tended to get longer, Bing AI would almost always hallucinate, even proclaiming its love for the user in one instance and going as far as telling them that they are unhappy in their marriage and they don’t love their wife. Instead, they are secretly in love with Bing AI, or Sydney, (Bing AI’s internal name) as well. Scary stuff, right?

Why Do AI models hallucinate?

AI models hallucinate because of shortcomings in their underlying algorithms or models or limitations of the training data. It’s a purely digital phenomenon, as opposed to hallucinations in humans that are either the result of drugs or mental illness.

To get more technical, a few common reasons why hallucinations might occur are:

Overfitting and Underfitting:

Overfitting and underfitting are two of the most common pitfalls faced by AI models and the reasons they might hallucinate. If an AI model is overfitted to the training data, it can cause hallucinations resulting in unrealistic outputs because overfitting makes the model memorize the training data rather than learn from it. Overfitting refers to the phenomenon when the model is too specialized in the training data, which causes it to learn irrelevant patterns and noise in the data.

Underfitting, on the other hand, occurs when a model is too simple. It can lead to hallucinations because the model is unable to capture the variability or complexity of the data, and it ends up generating nonsensical outputs.

Lack of diversity in training data:

In this context, the problem doesn’t lie with the algorithm but with the training data itself. AI models trained on limited or biased data may generate hallucinations that reflect the limitations or biases in the training data. Hallucination can also occur when the model is trained on a dataset that contains inaccurate or incomplete information.

Complex models:

Ironically, another reason why AI models may be prone to hallucinating is if they are highly complex or deep. This is because complex models have more parameters and layers that can introduce noise or errors into the output.

Adversarial attacks:

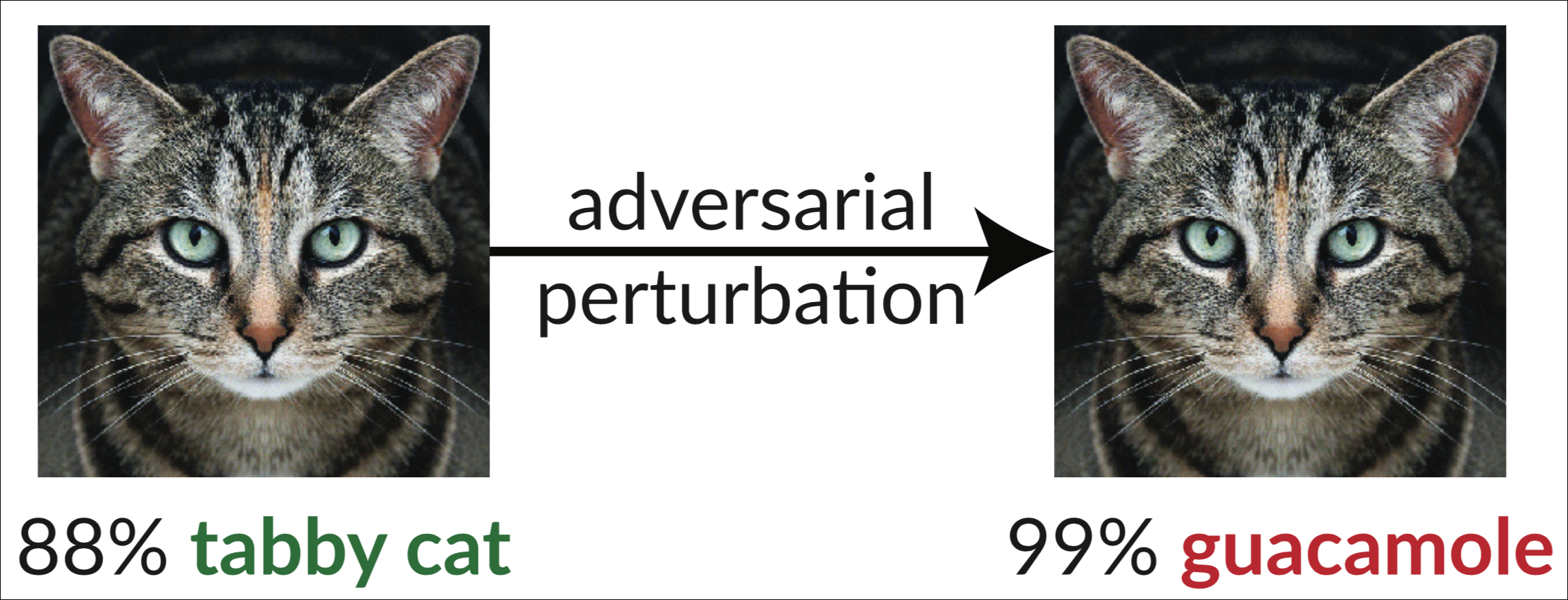

In some cases, AI hallucinations may be deliberately generated by an attacker to fool the AI model. Such types of attacks are known as Adversarial attacks. The sole purpose of this cyberattack is to fool or manipulate AI models with deceptive data. They involve introducing small perturbations to the input data to make the AI generate incorrect or unexpected outputs. For example, an attacker might add noise or distortions to an image that are imperceptible to humans but cause an AI model to misclassify it. For example, see the image below, of a cat, modified slightly to fool an InceptionV3 classifier into stating it as “guacamole”.

The changes aren’t glaringly obvious; to a human, the change wouldn’t be perceivable at all, as clear from the example above. No human reader would have trouble classifying the image on the right as a tabby cat. But introducing subtle changes to images, videos, text, or audio can fool the AI system into perceiving things not there or ignoring things that are, such as a Stop sign.

These types of attacks pose serious threats to AI systems that rely on accurate and reliable predictions, such as self-driving cars, biometric verification, medical diagnosis, content filtering, etc.

How Dangerous are AI Hallucinations?

AI Hallucinations can prove to be very dangerous, especially depending on the type of AI system experiencing them. Any self-driving vehicles, AI assistants capable of spending a user’s money, or an AI system filtering unpleasant online content need to be completely trustworthy.

But the indisputable fact of the present hour is that AI systems are not completely trustworthy but are in fact prone to hallucinations. Even the most sophisticated AI models in existence right now aren’t immune to them.

For example, one attack demonstration tricked Google’s Cloud Computing service to hallucinate a rifle as a helicopter. Can you imagine if an AI were at present responsible for making sure a person wasn’t armed?

Another adversarial attack demonstrated how adding a small image to a Stop Sign made it invisible to an AI system. This essentially means that a self-driving car can be made to hallucinate that a Stop Sign isn’t present on the road. How many accidents would occur if completely autonomous cars were in fact a reality today? That is why they aren’t right now.

Even if we take the presently trending chatbots into account, hallucinations can generate output that isn’t true. But people who don’t know that AI chatbots are prone to hallucinations and don’t fact-check output produced by such an AI bot can unknowingly spread misinformation. And we don’t need an explainer of how dangerous that can be.

Moreover, adversarial attacks are a pressing concern. Till now, they have only been demonstrated in labs. But were they to be encountered in the real world by an AI system with a crucial task, the consequences would be devastating.

And the fact is that it’s comparatively easier to protect natural language models. (We’re not saying is easy; it’s still proving to be very difficult.) However, protecting computer-vision systems is a whole other scenario. It’s a lot more difficult especially because there is so much variation in the natural world, and images contain a huge number of pixels.

To solve this problem, we might need AI software that has a more human view of the world which could make it less prone to hallucinations. While the research is underway, we are still years away from AI that can try and take hints from nature and evade the problem of hallucinations. For now, they are a harsh reality.

Overall, AI hallucinations are a complex phenomenon that can arise from a combination of factors. Researchers are actively working to develop methods for detecting and mitigating AI hallucinations to improve the accuracy and reliability of AI systems. But you should be aware of them when interacting with any AI system.

Member discussion