Google recently announced a slew of updates to its Gemini AI model, including several upgrades and new models. Among these, one that got a lot of attention was Gemini Live, a multimodal AI model that features video and voice capabilities.

Since Bard was renamed to Gemini in February, the AI model has been serving as a replacement for Google Assistant on Android devices. However, it is quite limited in what it can currently do. With Gemini Live, Google aims to change this by offering a more powerful and versatile AI model.

What is Gemini Live?

To provide users with an improved AI experience and to take on OpenAI's GPT-4o enhanced ChatGPT, Google announced Gemini Live at its I/O Developer Conference recently. Gemini Live will allow users to have natural and personalized conversations in real-time with it through voice, and later on, video.

The new AI model is a part of Google's Project Astra, which is the search giant's attempt to build a universal AI assistant that can use different types of inputs from everyday life to provide assistance. For instance, Gemini Live can use text, visuals from your smartphone camera, and your voice to answer questions.

According to Google, the new natural language model will not only help users solve problems and perform various actions but also feel completely natural during interactions. Users will be able to launch Gemini Live by tapping the voice icon on their phone, which will display the AI in fullscreen with an audio waveform effect.

You can then converse with the AI just as you would with a real personal assistant. An excellent example of how the upgraded AI model can help you out is when you ask it to help you out with an interview prep. Gemini Live will suggest the skills you can highlight, provide public speaking tips, and more.

Features

Gemini Live comes with a few features that make it a much better AI assistant than Google Assistant, Apple's Siri, or Amazon's Alexa.

Two-Way Voice Conversations

Gemini Live lets you converse with it and provides human-like verbal responses, resulting in engaging and intuitive conversations. For instance, you can ask it about the weather and it will give you an accurate and concise update.

Smart Assistant Capabilities

The AI model can serve as a smart assistant and perform tasks like summarizing information from emails and updating your calendar. For example, you can take a photo of a concert flyer, and Gemini will add the event to your calendar.

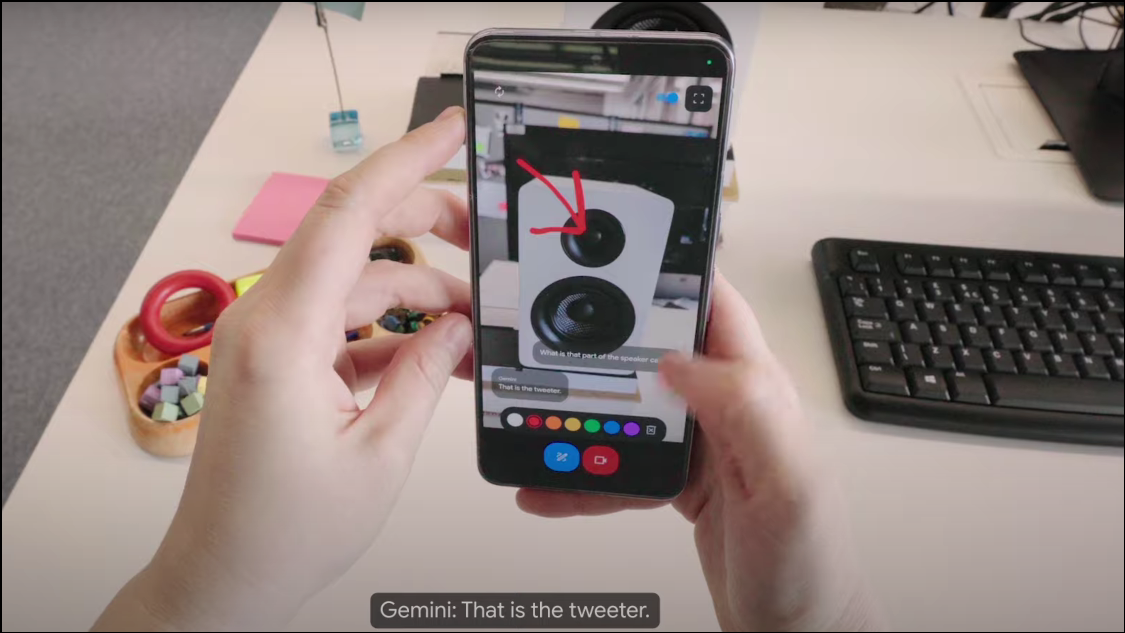

Visual Capabilities

By using the camera on your smartphone, Gemini Live can capture videos in real-time. This lets it identify objects and answer questions regarding them. As an example, if you point your smartphone camera at a speaker and ask Gemini to identify it, it will tell you what it is and even identify its make and model.

How Does Gemini Live Operate?

Project Astra can combine speech and visual inputs making them easy to understand for the AI model. It can then react to the information and provide the required assistance. Like OpenAI's GPT-4o-powered ChatGPT, Gemini Live is a multimodal AI and does not rely solely on text as an input.

While Gemini Live will originally use voice input to gather and analyze data at the initial release, it will be upgraded in the coming months to also process and analyze videos by breaking them down frame by frame for better comprehension and interaction.

The AI can adapt to the speed at which different users speak, and you can even interrupt it to ask for clarification or provide more information. Its ability to mimic human dialogue can provide a more engaging interaction. Thus, you can have a back-and-forth conversation with it, just like with a human assistant. Additionally, you will be able to choose from between ten different voices for the AI.

GPT-4o Vs. Gemini Live

While both GPT-4o and Gemini Live are multimodal AI models, right now it is difficult to see which one performs better in real life, especially since neither is publicly available at the moment.

However, unlike ChatGPT, Gemini Live relies on other AI models like Google Veo and Imagen 3, for providing output in the form of videos and images. Despite that, in the demos shown off by OpenAI and Google, ChatGPT seemed more natural and the new GPT-4o model could even detect and simulate human emotions through vocal tones.

Additionally, it can adapt to the way you want it to answer, which Gemini Live cannot do, at least in its current state.

Gemini Live Availability

Gemini Live will be available for Gemini Advanced subscribers, which is the paid version of the AI chatbot. It will be rolled out in the coming months and is expected to be widely available by the end of the year.

Apps like Google Messages will be able to take full advantage of Gemini Live, allowing users to interact with the AI directly within the messaging app.

Gemini Live might be the next major upgrade to Google's AI chatbot, and just what it needs to take on rivals like OpenAI's ChatGPT. With multimodal functionality and powerful speech capabilities, the upgraded model can help Google achieve success in delivering a versatile and reliable digital assistant.

Right now Google has only announced that it will be bringing the new AI model to paid subscribers. While this leaves out free users, which make up a huge chunk of Google's user base, we do hope Google changes its stance and decides to expand the availability of Gemini Live.