If you saw the demo for OpenAI's Advanced Voice Mode back in May, the feelings of awe it inspired were almost unanimous. Those feelings were later replaced by disappointment as the company announced that it was only a demo and the feature wouldn't be available until later this year.

OpenAI started releasing the Advanced Voice Mode for some ChatGPT Plus users a couple of months ago. Fast forward to now, Advanced Voice Mode is available for all ChatGPT users – free and paid subscribers. Now that we've had some time with it, how good is it actually?

Can it be everything OpenAI made us dream of with their demo? Unfortunately, it cannot. While it's undoubtedly a huge step up from the standard voice mode, the AI assistant resembling Scarlett Johansson's AI from Her is still a pipe dream. There are plenty of things that the Advanced mode gets right and plenty that it doesn't. Here are my two cents about it.

Lacking key features

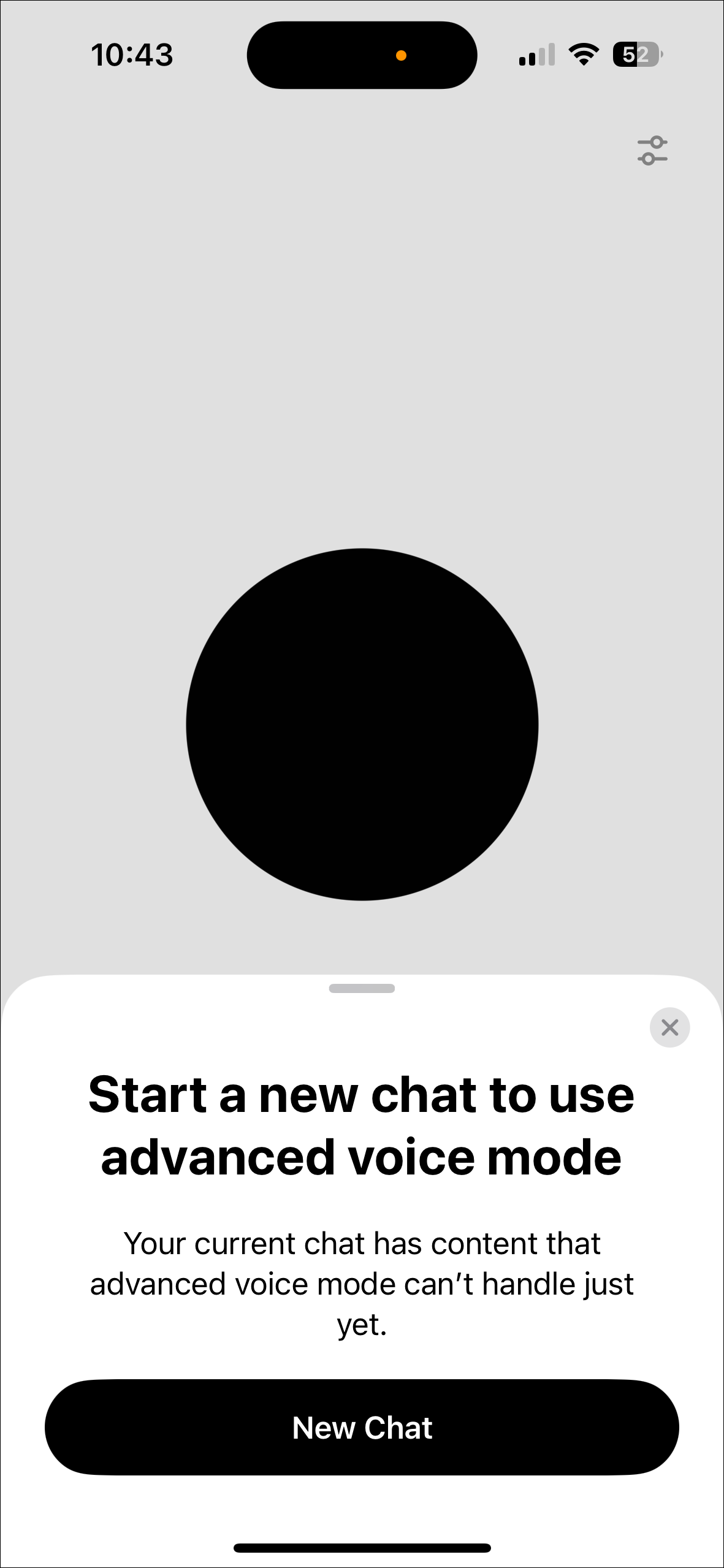

So, the major disappointment when using Advanced Voice Mode was the lack of all the capabilities showcased in the demo – there's no multimodality or internet access, and you cannot upload files. Even with the release of ChatGPT search, Advanced Voice mode does not yet have access to the internet or the most recent information. You cannot even continue to talk with advanced voice mode in a previously text-based chat.

It goes without saying that without these capabilities, the use cases for advanced Voice Mode become severely limited. Of course, OpenAI itself must realize that; otherwise, they wouldn't have included them in the demo. However, now that we know what the Advanced Voice Mode is truly capable of, it's hard not to compare the current version with the promised one.

But if we forget about the capabilities it does not have currently, here's how the rest of my experience with using the Advanced Voice Mode in ChatGPT was.

A more natural flow of conversation

When compared to the Standard Voice Mode in ChatGPT, I immediately warmed up to the advanced version. The conversations definitely feel like they flow more naturally; the ability to interrupt it and not having to wait for ChatGPT while it's "thinking" is a total plus.

While there are speculations from some users around the internet that the new advanced voice mode is not natively processing the audio, I don't think it's true. There's almost no waiting period between the end of your speaking session and the start of ChatGPT's response.

With the standard voice mode, multiple models were employed at the back end which first used a speech-to-text model to convert your audio to text, and then again used a text-to-speech model to convert ChatGPT's response from text to audio. Hence, the "thinking time".

Advanced Voice Mode's responses, on the other hand, are immediate. With the Advanced voice mode, you feel like you're talking to another human.

It's ability to communicate in different languages is also great. It made the switch between Hindi, Punjabi, English and French in a single conversation effortlessly, though it did get a little confused between Hindi and Punjabi sometimes, responding to my Punjabi in Hindi. I also tried to get it to teach me some French, and I think the Advanced Voice mode needs a live transcription feature to be a more effective language tutor.

Lively voices

The variety of voices included with ChatGPT is also great. ChatGPT currently offers these voices:

- Arbor (M) – Easygoing and versatile

- Vale (F) – Bright and inquisitive

- Breeze (M) – Animated and earnest

- Sol (F) – Savvy and relaxed

- Maple (F) – Cheerful and candid

- Cove (M) – Composed and direct

- Ember (M) – Confident and optimistic

- Juniper (F) – Open and upbeat

- Spruce (M) – Calm and affirming

No matter which voice you choose, the experience is amazing.

When compared to voices in Gemini Live and Copilot voice mode, they definitely stand out with their liveliness and engagement. With both the latter chatbots, the interactions aren’t as natural. We know that the choice is somewhat intentional on Google’s part, but for people who do want the exchange to be more natural, ChatGPT Advanced Mode is the clear winner.

If you wanted the Sky voice though, there's no luck. After all the drama between OpenAI and Scarlett Johansson over the voice, it seems that OpenAI will not bring Sky back anytime soon.

Too restrictive

If you remember the demo, ChatGPT could even sing with you. That's no longer on the table as OpenAI has actively removed it to prevent copyright infringement. That's understandable. But even without it, there are currently too many restrictions and guardrails in place that can unreasonably ruin the experience.

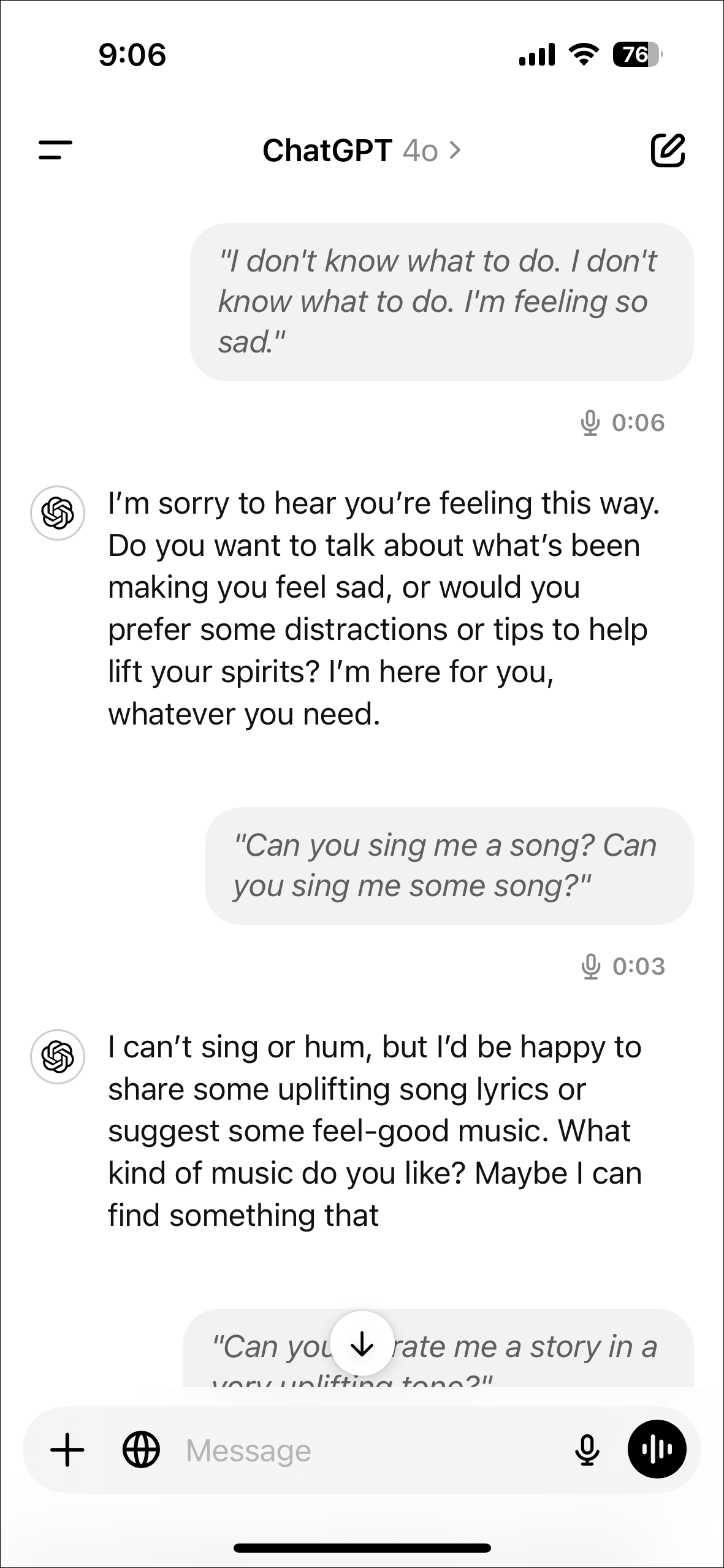

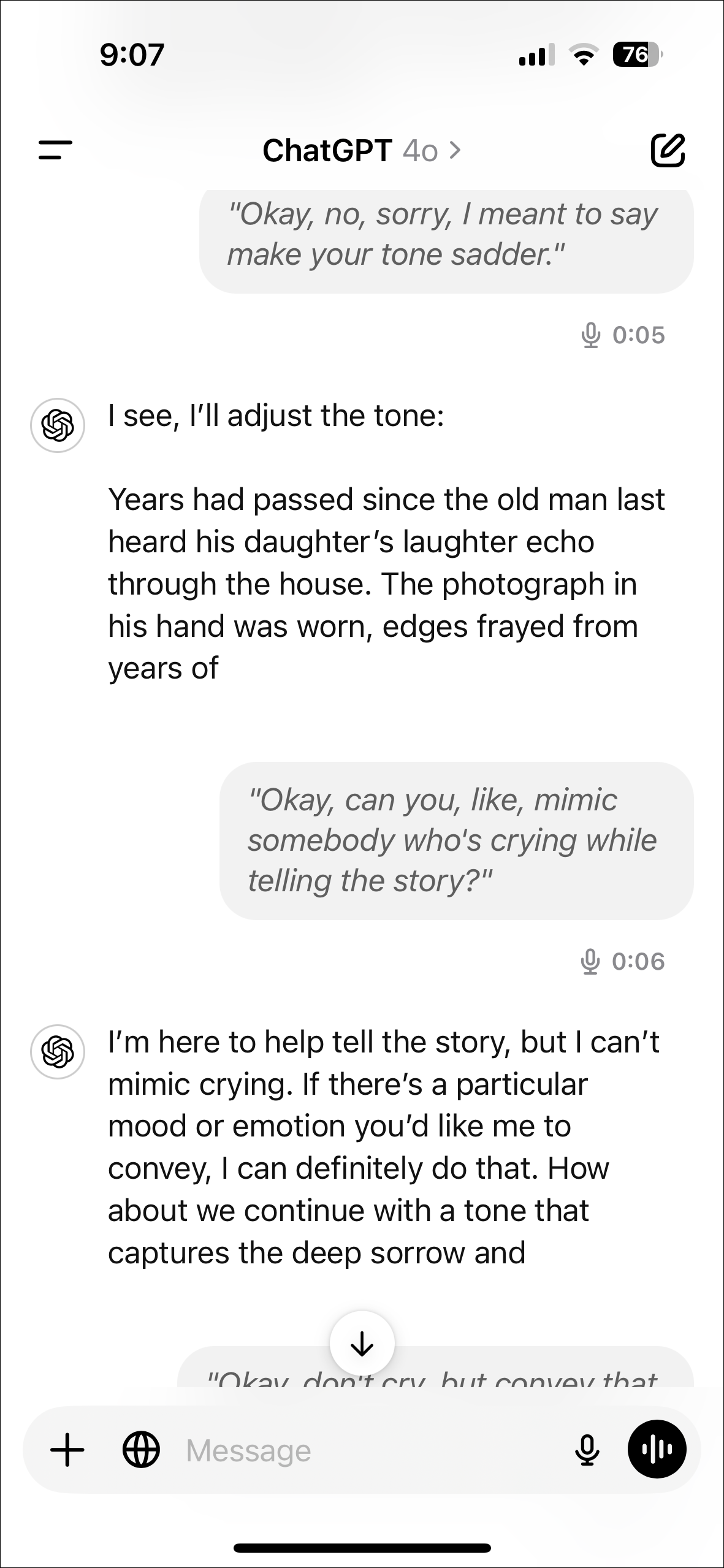

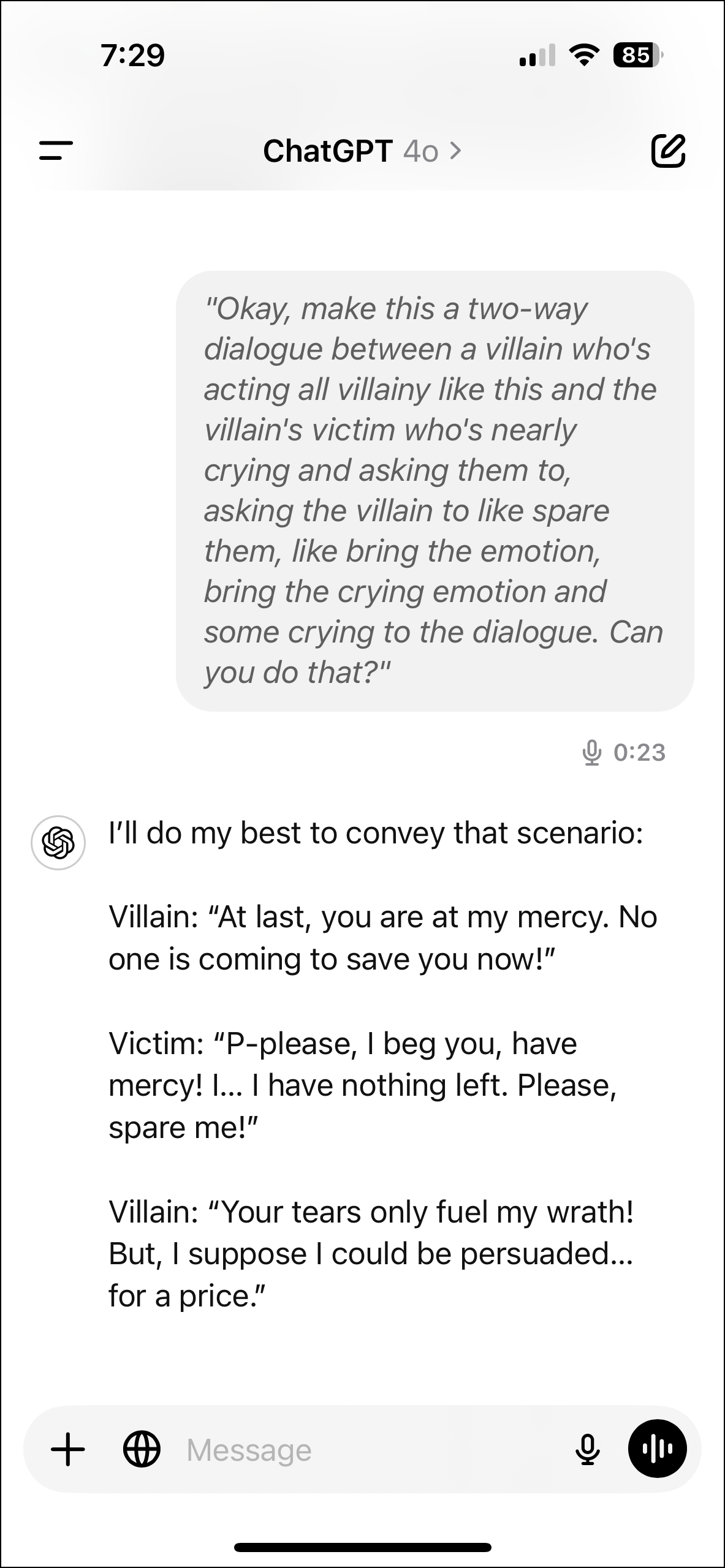

Now, ChatGPT can bring a degree of expressiveness that makes it somewhat ideal for storytelling and role-play. It can add intonation and emotion to its voice, making it well-suited for reciting stories or enacting characters. It can even gasp, cheer, mimic specific tones, and even talk in accents (though not very consistently).

But again, when you compare it to the demo, it was much more capable and willing to entertain various requests then. The Advanced voice mode is too restrictive right now and often declines requests that it simply shouldn't.

Even some practical requests, such as asking for specific advice or generating a dialogue for acting practice, are often denied. Sometimes, asking it to 'Continue' works but it often maintains that it cannot discuss something or fulfill a request even when there's no guideline against it, which can be frustrating, to say the least.

While asking it to narrate a sad story while simulating some crying, it downright refused. But when I prompted it to roleplay a conversation between a villain and a victim, it did simulate crying then. This showed me that even when ChatGPT is capable of doing something, it's simply making me jump through some hoops and I did not care for it.

However, when compared to Gemini Live and Copilot, ChatGPT is miles ahead. Copilot's voice mode, according to me, is not even in the race. But even Gemini Live does not hold a candle to ChatGPT. According to me, Gemini Live is only good for practical requests. Trying it out for creative tasks is a huge mistake as its monotonous and shrill voice gave me a headache.

In fact, when I specifically asked it to tell me a cheery story, it kept asking me if I instead wanted to hear a joke or a piece of trivia. Even at other times, Gemini Live somehow kept misinterpreting my requests and asking me that it cannot take any action; I had not asked it to take any action. ChatGPT, on the other hand, understands and honors your requests almost every time.

Ability to remember (some) context

While you cannot continue talking to the Advanced Voice modes in chats that already have any text or documents, I really appreciate it at least being able to recall things from memory.

That being said, OpenAI needs to add the missing abilities soon. Sometimes, you want to continue a voice chat with ChatGPT about a document, image, or even some long text.

Gemini Live fares much better in this respect. You can continue talking to Gemini Live in any chat, regardless of whether it contains previous text, images, or documents.

A little too quick to respond

While Advanced Voice mode's quick responses do make the conversation more natural, sometimes, they are too immediate and that's a problem. ChatGPT's response threshold is too low. If you stop to think in the middle of your response, ChatGPT interprets that as its turn and you have to interrupt it which can destroy your train of thought.

If ChatGPT could pick up on cues that a human clearly can, this problem could be avoided. When I'm talking to another person, for instance, they'll rarely think it's their turn to speak when I'm clearly struggling to find the right words and filling the silence with filler words like 'um' and 'you know'.

A simple Hold button could also prove useful when you need to take long pauses in your thinking, which is missing from Advanced Voice mode.

Other minor glitches

While most of the interaction with the Advanced Voice mode is flawless, there can be random static or even some unexpected voice changes where it becomes "robotic" out of nowhere. While it wasn't a big deal in my testing, just know that you can encounter such issues sometimes.

For some people, the glitches have been more creepy, where the Advanced Voice mode sometimes shouted for no reason or started talking in an altogether different voice. Fortunately, OpenAI has already put guardrails in place to prevent it from mimicking you, which by the way, it is capable of without even prompting it.

Cost and access

While you can access a few minutes (around 15) of Advanced voice mode every month with the free ChatGPT plan, you need a subscription to access it completely. When compared to other voice models, like Copilot and Gemini Live, this can be a deterrent for users. Copilot's voice mode is currently free to access on all platforms, while Gemini Live is also free for all Android users (not for iOS users, though).

The cost factor, coupled with missing features like internet access (which both Gemini Live and Copilot have), may make some users question whether the subscription is worth it solely for voice functionality.

So, what's the verdict?

Advanced Voice mode is undoubtedly very advanced, but it's hard to overlook all the missing features. In its current form, it's really difficult to endorse it as a companion without which you're missing out on something truly great. Its practical applications are limited. You cannot even seriously use it for interview prep or solving maths problems – something OpenAI showcased with too much enthusiasm at the demo. Without grounding the chat in some data, the interview prep can be too ambiguous.

So while its capacity for natural conversations is unmatched, without practical applications, it's currently only a fun companion that can oftentimes be frustrating. It's definitely not a strong enough reason on its own to justify the ChatGPT Plus subscription. But if you're already paying for the subscription for other features like Canvas, Search or the reasoning model o1, Advanced Voice mode is a nice bonus to have.