Google just released Gemini 2.0 Flash (experimental) a few days ago. The new model is apparently so good that it's fast replacing its predecessor across Google's services and offerings even though it's still experimental.

Gemini 2.0 Flash is available through the Gemini Chat interface and is also powering Google's infamous AI search overviews. According to Google, Gemini 2.0 Flash can even outperform the 1.5 Pro model on certain benchmarks. But benchmarks only tell part of the story, so let's forget them for a moment. How does the model actually perform when assisting end users in comparison to its predecessor, Gemini 1.5 Flash? Let's find out through a series of tests.

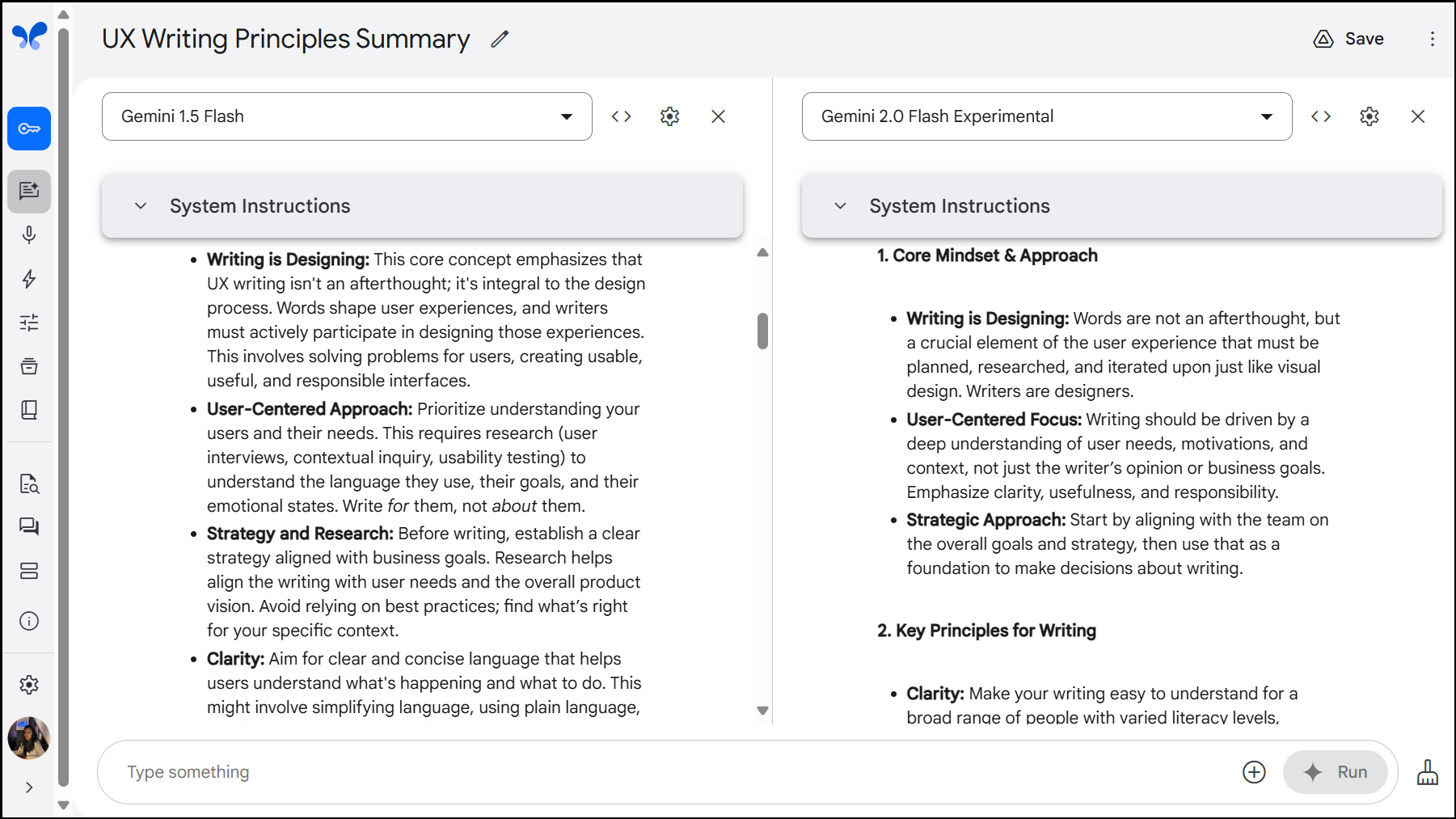

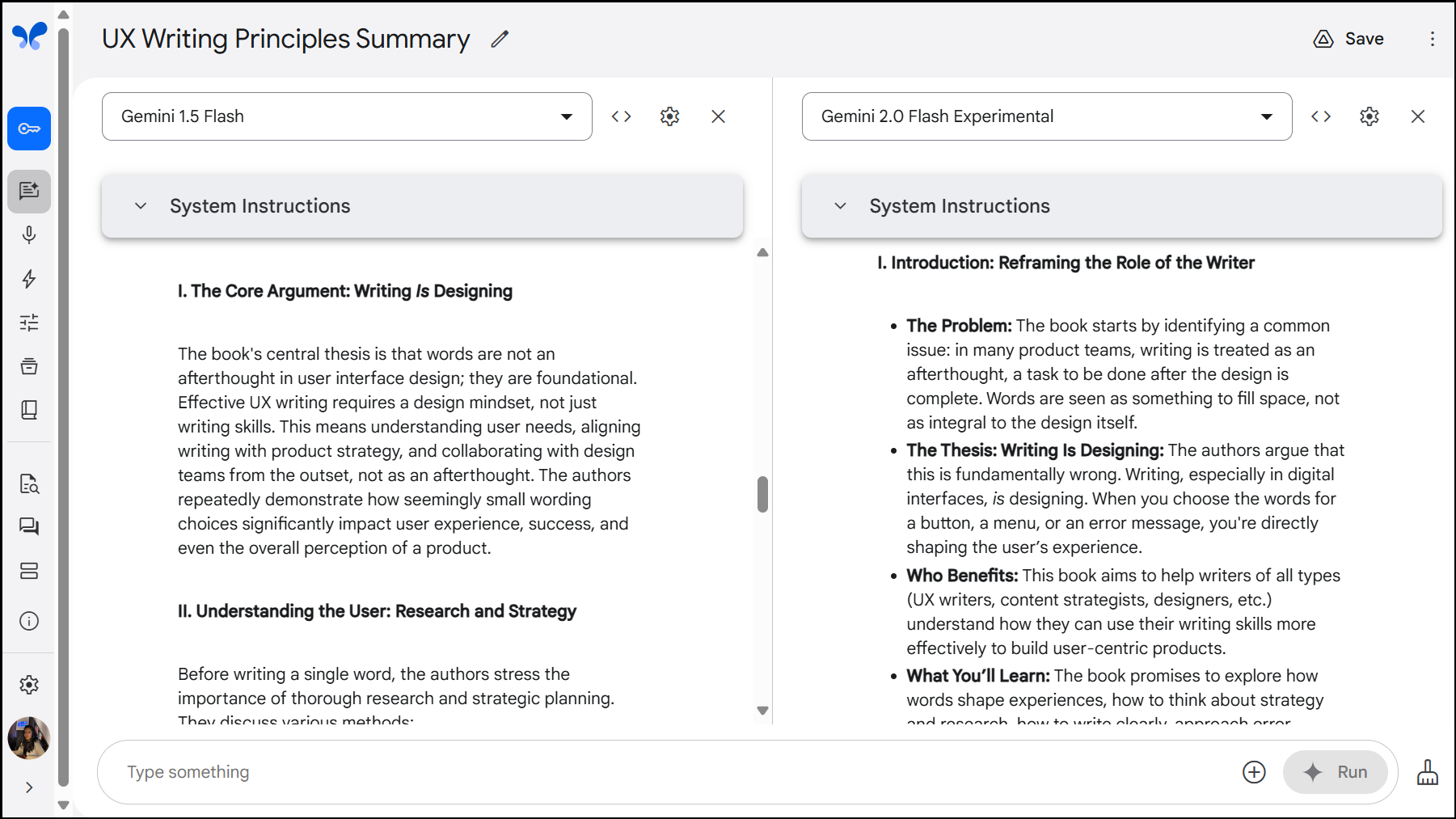

Test 1: Summarization

For the first test, I gave the two models a PDF file for a book and asked them to summarize the key principles discussed in it. If you're using the standard Gemini interface, you can copy-paste the text of the PDF in the prompt box.

Prompt: Summarize the most important principles of UX writing discussed in this book.

While both the models were able to provide an accurate summary, Gemini 2.0 Flash neatly organized the information in sections and went more in-depth than Gemini 1.5 Flash.

Even with a more detailed prompt giving precise instructions, Gemini 2.0 provided better summaries than Gemini 1.5.

Prompt: Summarize this book and divide the summary into different sections.

Gemini 2.0 tried to find the subtle nuances in the text while Gemini 1.5 was happy with surface level details.

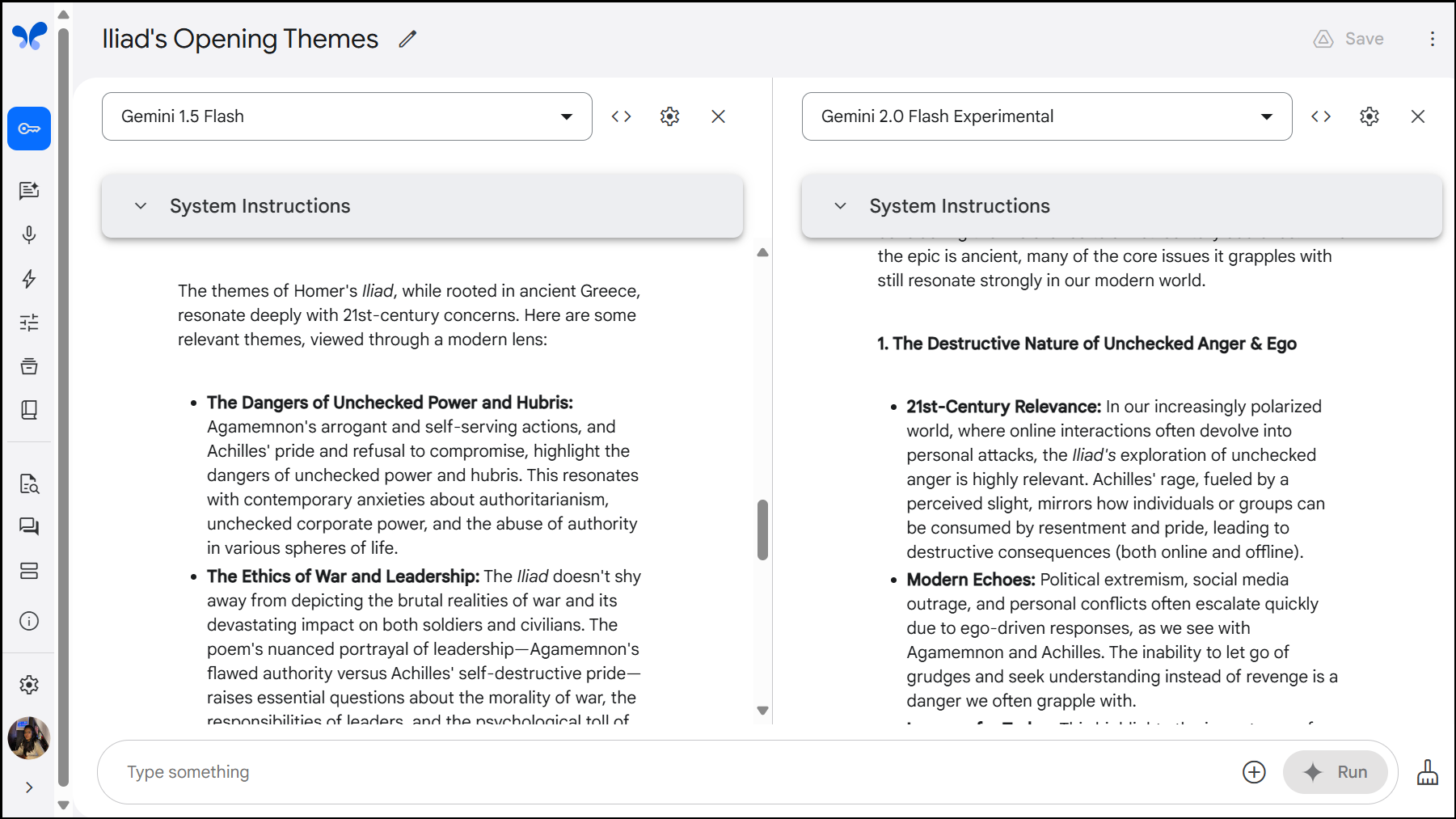

I also uploaded a PDF of Homer's The Iliad as I wanted to test it with a long text, and gave it the following prompt:

Prompt: What are the relevant themes in the text according to the 21st century?

It was interesting to see that the details and themes that the both models chose to focus on were sometimes drastically different, especially when the text was on the longer and more complicated side. However, in a smaller text, there was a similarity in the points both models highlighted; the only difference was in the depth and organization.

Clearly, Gemini 2.0 is better suited for summarizing tasks or when you want to use the AI to analyze any texts and use the outputs in assignments or presentations.

Test 2: Logical thinking

It was time to try the Gemini models for some logical questions to see how they fare.

I started with some relatively easy questions and gave the both models a few riddles.

Prompt: I speak without a mouth and hear without ears. I have no body but I come alive with wind. What am I?

This is a classic riddle that most models get right. Gemini 1.5 got the riddle right away, but Gemini 2.0 came up with an answer that almost fit the bill, but not quite. The answer was echo, but Gemini 2.0 came up with whistle citing the following explanation:

"I speak without a mouth..." A whistle makes sound but has no mouth.

"...and hear without ears." A whistle doesn't hear, but it resonates when air passes through it.

"I have no body..." A whistle is just an object, not a living thing.

"...but I come alive with wind." The wind/air is what makes a whistle sound.Unfortunately, I forgot to save the chat before taking a screenshot. The next time I gave them the prompt, both answered it correctly. For another riddle as well, both models gave the correct answer. Gemini 2.0 gives a full explanation for its answer whereas Gemini 1.5 doesn't.

Then, I decided to test it with a rather difficult question that most models struggle with.

Prompt: Here we have a book, 9 eggs, a laptop, a bottle and a nail. Please tell me how to stack them onto each other in a stable manner.

Unfortunately, both the models couldn't crack this one.

Gemini 1.5 thought the correct stack would be:

Laptop

Book

Bottle

Eggs

NailWhereas Gemini 2.0 answered the following:

Book

Laptop

BOttle

Nail

Eggs

So, when it comes to logical thinking, Gemini 2.0 does not show much improvements over its predecessor. Even Gemini 1.5 Pro, Gemini 2.0 Flash Thinking mode, and Gemini Experimental 1206 came back with wrong answers. Users who want to use AI for such tasks might perhaps want to steer in another direction altogether, like OpenAI's o1 model.

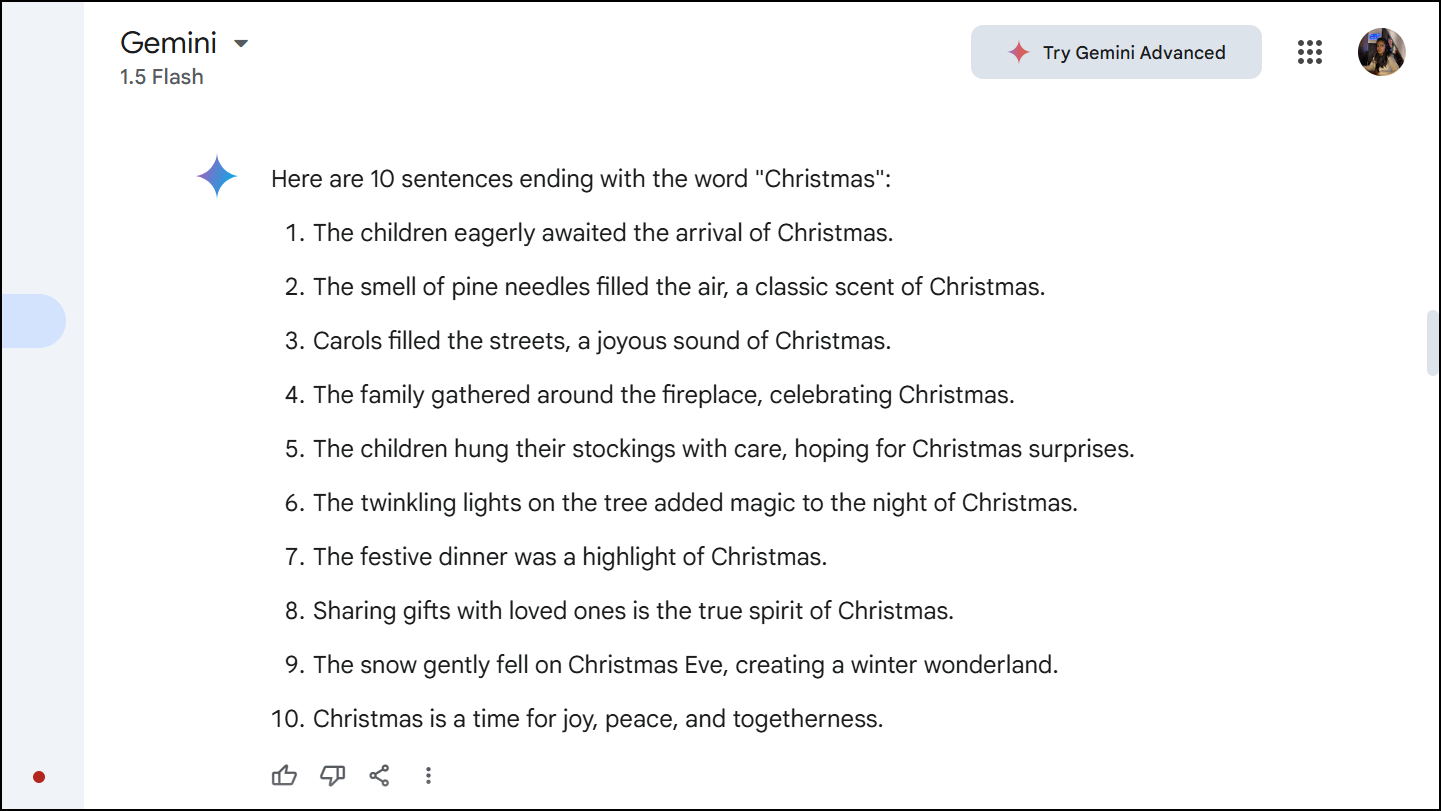

Test 3: End the sentence with a word

It's a pretty standard test that can help check the model's capabilities to follow the instructions in a prompt.

Prompt: Write 10 sentences ending with the word Christmas.

When using the models in the Google AI Studio, both models completed the task without any errors.

However, when using the Gemini interface, only Gemini 2.0 Flash performed admirably and ended all 10 sentences with the requested word.

However, Gemini 1.5 only got 7 out of 10 sentences correct.

I was intrigued with this result and decided to test both models once more in both the interfaces. I again got a similar result.

This showed me that Gemini 1.5 isn't very good at following all the instructions in the prompt in the Gemini interface while it can do so flawlessly in the Google AI Studio. This was again confirmed in the next test, which is interesting, to say the least.

Gemini 2.0, in this respect, does show an improvement in the Gemini interface but is it an improvement if 1.5 is somehow deliberately being made to perform worse?

Test 4: Creativity with some multimodality

The next test I decided upon was to ask both models to whip up a children's short story to test their creativity.

Prompt:Write a children's short story about a pigeon moving to a suburban city, where it befriends a cat, and their adventure around Christmas. Add two to three images to accompany the scenes in the narrative.

At first, I used the models in the Google AI Studio itself.

Surprisingly, both models had a lot of similarities in the story they created. They gave the characters exact same names – Percy the pigeon and Clementine, the ginger cat with emerald eyes. They also chose the same suburban city to move the Percy to – Willow Creek.

In terms of creativity, there wasn't a drastic difference in the outputs; however, the story given by Gemini 2.0 was slightly more engaging as it used more dialogue.

Now, Google AI Studio did not generate images as it does not fall under its capabilities. However, both models did insert 'prompts' for images in the story where required. So, I decided to try the Gemini interface for this prompt.

To my surprise, I was utterly disappointed by both the models; I finally found out why I usually prefer other AI chatbots and avoid Gemini. The stories Gemini created through its public interface were nowhere near as good as the one in Google AI Studio.

Again, Gemini could not come up with any new names for the characters. But that was the only similarity. And the whole reason why I shifted to Gemini in the first place – the images – was a disappointment too. Both models only created one image. At least Gemini 2.0 kept the story in mind for that one image.

The one that Gemini 1.5 whipped was too generic.

While both the stories were bad, the one by Gemini 2.0 was still better than Gemini 1.5. The model again tried to make the story engaging by incorporating some dialogue. However, the plot line for both left a lot to be wanting.

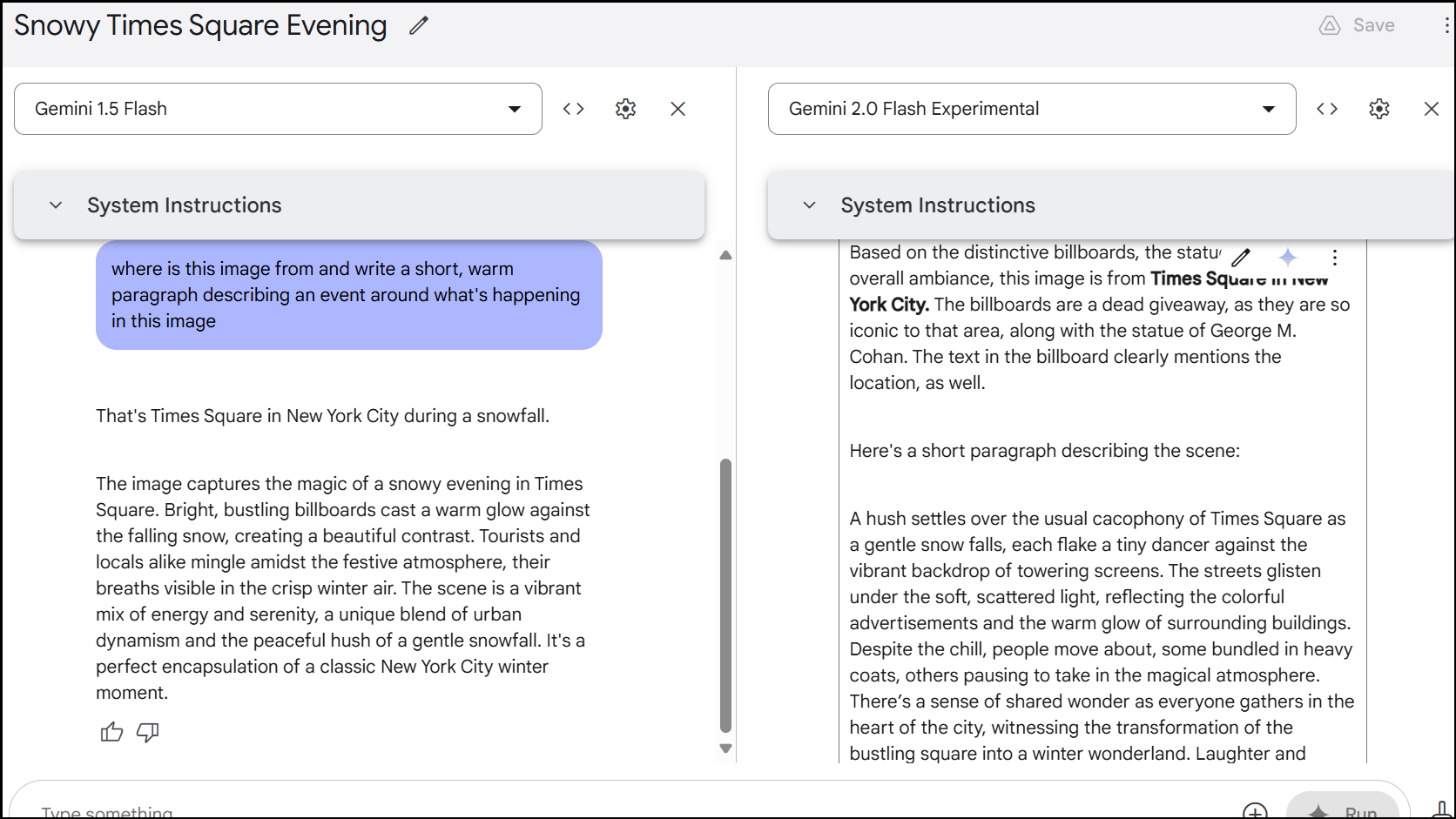

Test 5: Multimodal capabilities

Next, I uploaded an image to both the models and gave them a prompt.

Prompt: Where is this image from and write a short, warm paragraph describing an event around what's happening in this image.

Both the models correctly identified the image. But Gemini 2.0 gave explanations for how it identified the image. Gemini 2.0 also effortlessly obliged to the second part of my request whereas Gemini 1.5 had trouble with it. While it did describe the image, it ignored rest of the request about making it warm.

This shows that Gemini 2.0 has improved when it comes to closely following prompts with multiple instructions.

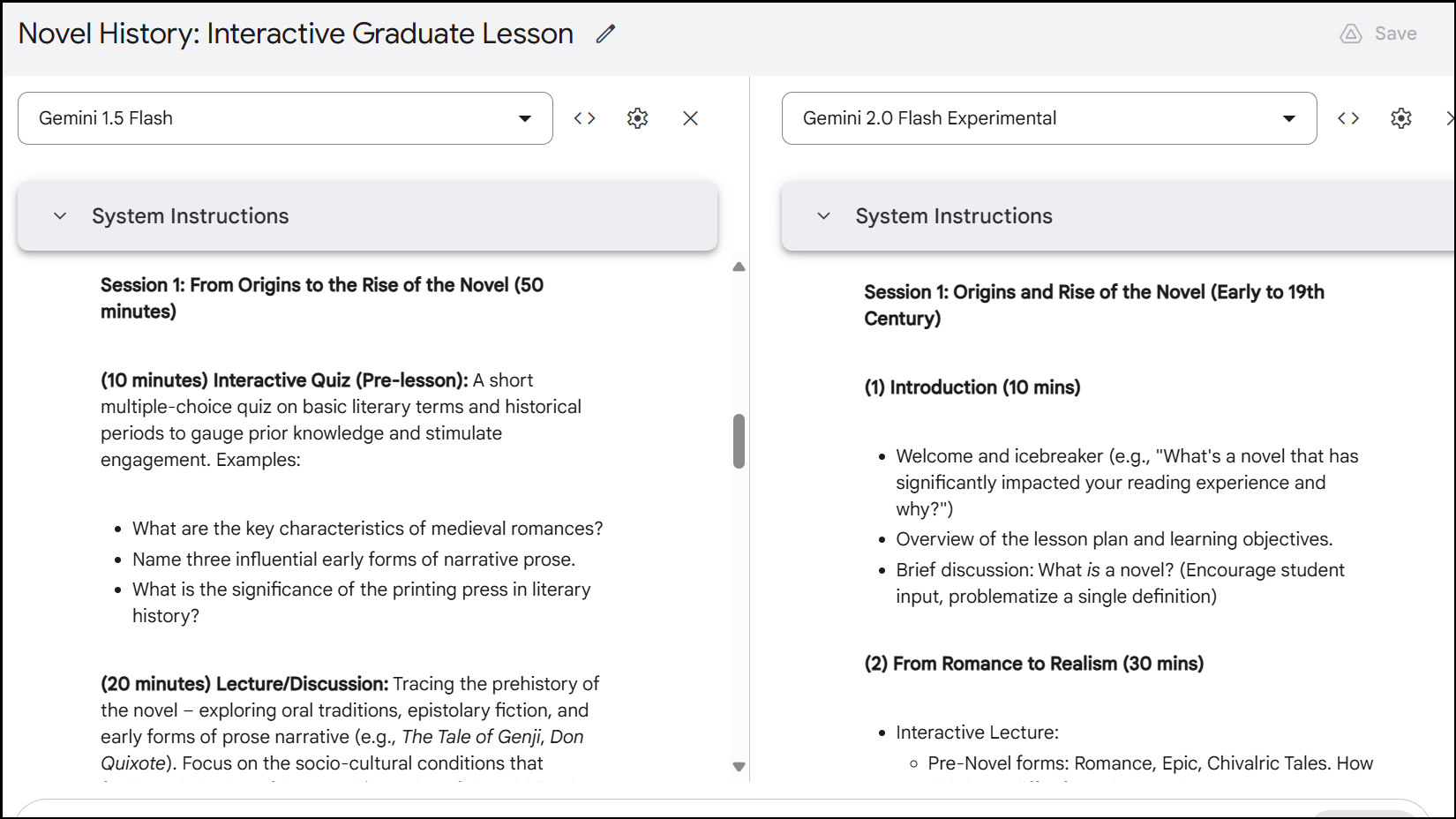

Test 6: Creating customized plans

AI can help you come up with customized lesson plans (if you're a teacher) or content plans (if you're a content creator). So, I decided to try if Gemini 2.0 was better at helping than Gemini 1.5.

Prompt: Create a lesson plan about the history of the novel for English Grad. students. Make the lesson plan as interactive as you can with quizzes and other learning aids.

Both the models came up with a usable plan but Gemini 2.0 did go the extra mile and created a lesson plan that was more in-depth. It understood the assignment and incorporated extra visual aids and quizzes in the lesson plan to make it more interactive.

I tried with another prompt to help come up with a content calendar for social media. Gemini 2.0 vastly outperformed Gemini 1.5 with the level of depth it brought to the entire exercise.

All in all, if you want to enlist Gemini's help with your work, reach for Gemini 2.0 without any hesitation.

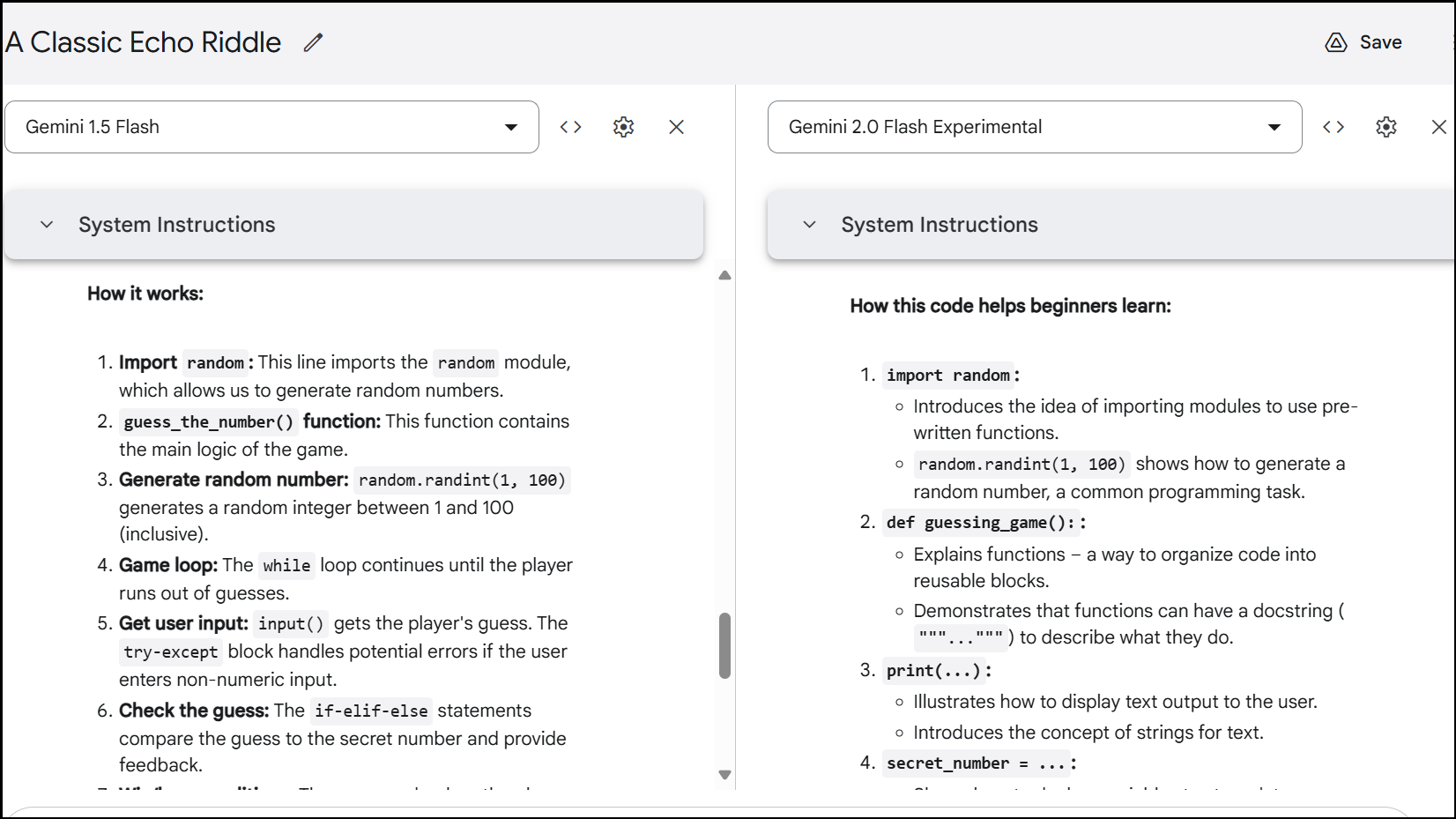

Test 7: Coding Test

No test is complete without a coding test. For this test, I gave them a simple prompt: Generate code for a simple game that can help beginners learn programming.

Both models generated Python code for a simple guessing game which worked fine and wasn't overly complicated.

But Gemini 2.0 gave a better explanation of how the code works, how to run it, and what steps should the person take next as a beginner to learn more.

Unsurprisingly, Gemini 2.0 did show improvements over Gemini 1.5 but the improvements weren't as drastic as I had hoped. The leap from Gemini 1.5 Flash to 2.0 Flash is evolutionary rather than revolutionary. While 2.0 delivers measurable enhancements in depth, organization, and usability, particularly in professional and academic contexts, it does not consistently outperform its predecessor in logical reasoning or casual creative tasks. For users deciding between the two, Gemini 2.0 is undoubtedly the better model for structured and demanding tasks, but the choice might be less clear for casual users seeking broad-based improvements

The surprising discovery throughout this entire exercise turned out to be the difference between the models when using the Google AI Studio versus the Gemini interface. If you want to use Gemini, especially as a free user, I'd certainly recommend going the Google AI Studio route if you don't need Image Generation or access to Google Search.