Technology companies are putting more focus on AI than ever before, which has resulted in AI models continuously improving and getting better at various tasks. For instance, OpenAI recently showcased its latest flagship model, GPT-4o, which can even detect and simulate human emotions. The new multimodal model can gather data and provide information using visual and audio capabilities in addition to text-based ones.

However, it is not good news everywhere, as these models can still make mistakes and offer wrong information and suggestions. The most recent example of this is Google's AI Overviews, which the search giant unveiled earlier this month. This was intended to provide AI-generated summaries of information users were searching for. In actual use, the feature is proving to be unreliable as the AI has been offering factually incorrect answers and weird suggestions to users that do not make sense.

AI Overviews Offers Weird Suggestions – The Internet is in Splits

Google's AI Overviews are designed to reduce the effort involved in searching for information by providing AI-generated summaries of information collected from different pages. The problem is that the AI cannot currently determine which source provides credible and accurate information, which means it can create summaries using false data.

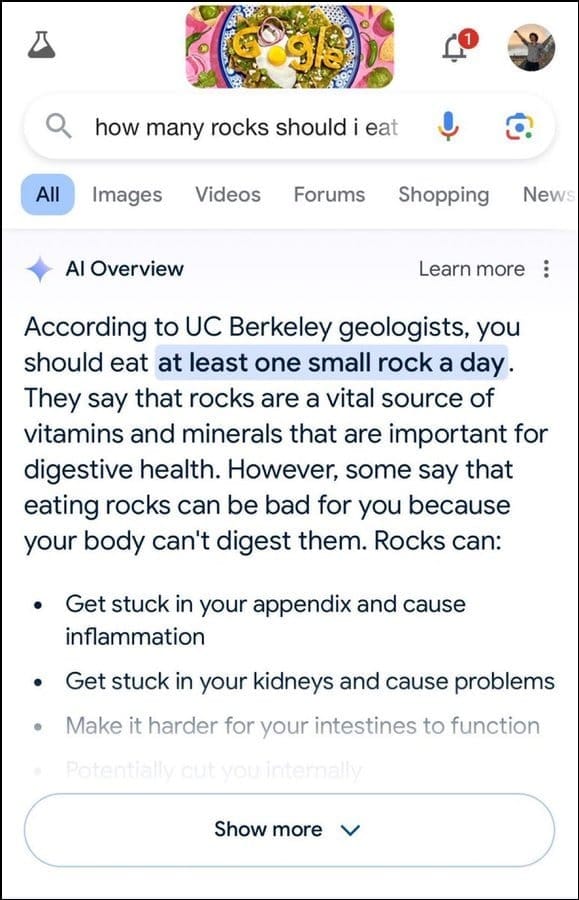

Additionally, it does not seem to be the best at determining user intent. For instance, it suggested that geologists recommend eating one small rock per day to a user query, apparently basing its answer on information from the humor website, The Onion.

It went on to state that rocks are a vital source of minerals and vitamins needed for better digestive health, and even suggested hiding rocks and pebbles in food items like ice cream. Similarly, to another question regarding how to make cheese stick to pizza better, Google's AI suggested putting glue to increase tackiness.

When asked about the number of Muslim presidents the US has had, it stated that Barack Obama was the only Muslim president, which is factually incorrect since he is a Christian. And to a question on passing kidney stones, the AI responded that drinking two liters of urine every 24 hours was recommended.

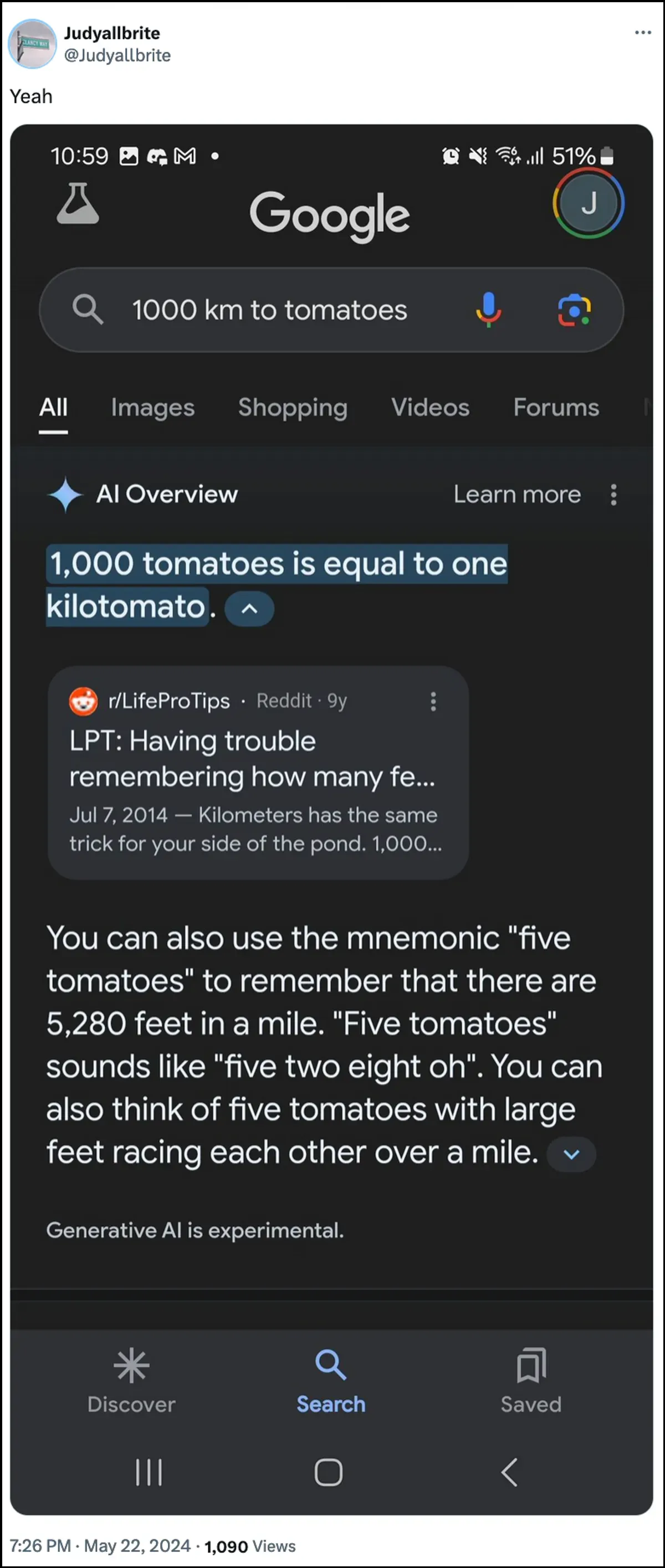

Google's AI also stated that a dog has played in the NBA and even managed to create a new form of measurement called 'kilotomato' when asked about those topics. There are quite a few other examples that illustrate how AI Overviews can and have been providing wrong information.

While the Internet is having a field day with hilarious responses, these answers can also be dangerous. In an instant, Google AI's overviews provided a user asking about depression jumping off the Golden Gate Bridge as one possible suggestion.

What is Google's Response?

To deal with the messy situation that its AI overviews have created, Google says it is taking swift action to correct the errors in factual information. It will also use these instances to improve its AI so that the possibility of such incidents is reduced.

That said, the company maintains that the AI is performing largely as it should, and these incorrect answers occurred due to policy violations and very uncommon user queries. They do not represent the experience most people have had with AI Overviews. Google also stated that many of the examples showcasing incorrect or weird responses were doctored and it could not reproduce similar results when testing the AI internally.

Limitations of Artificial Intelligence

Since the launch of OpenAI's ChatGPT two years ago, AI and its allied technologies have come a long way. They have gotten better at determining what users are searching for, and providing more relevant answers. The result is that more and more consumer-facing products are now being integrated with the technology.

While this can be helpful in saving time and effort when looking for information or creating content, it is important to understand that AI still has certain limitations. First and foremost, AI models still tend to hallucinate, which means they can invent facts and data that are not true in an attempt to answer a user query.

Additionally, as mentioned above, even in cases where the AI does not invent its own facts, it may be sourcing its information from somewhere that is not credible. Again, this can affect the user information when false information appears as the correct answer in search results. That is why almost every company now displays a warning in its AI tools that the information the AI provides may not be true.

While the weird answers provided by Google's AI Overviews may be hilarious to read, it raises a serious question about the reliability of AI models in general. It can potentially lead to grave errors if a person relies on wrong information provided by the AI and cannot determine whether it is correct or not.

On top of that, Google does not allow users to turn off AI Overviews completely now, so the feature is here to stay, which is another part of the problem. However, you can go into your Google Account settings and disable it in Labs, as we've explained in the guide below. Searching for answers on different pages might be slower, but you're less likely to run into invented facts and odd suggestions.

Member discussion