Running advanced AI models like DeepSeek-V3-0324 locally provides you with greater control over your data, faster response times, and the ability to tailor the model specifically to your needs. DeepSeek-V3-0324 is a powerful, 671-billion-parameter language model that requires careful setup and configuration. Below, you'll find a structured, detailed guide to getting this model up and running on your personal hardware.

System Requirements

Before starting, ensure your hardware meets the minimum requirements. The DeepSeek-V3-0324 model is substantial, and you'll need:

- A high-performance GPU (NVIDIA GPUs recommended, such as RTX 4090 or H100).

- At least 160GB combined VRAM and RAM for optimal performance. You can technically run it on systems with less, but performance will significantly degrade.

- Storage space: At least 250GB free (the recommended 2.7-bit quantized version is approximately 231GB).

If you're using Apple hardware (such as Mac Studio M3 Ultra), you can run the quantized 4-bit model efficiently, but ensure you have sufficient unified memory (128GB+ recommended).

Step-by-Step Instructions to Run DeepSeek-V3-0324 Locally

Method 1: Using llama.cpp (Recommended)

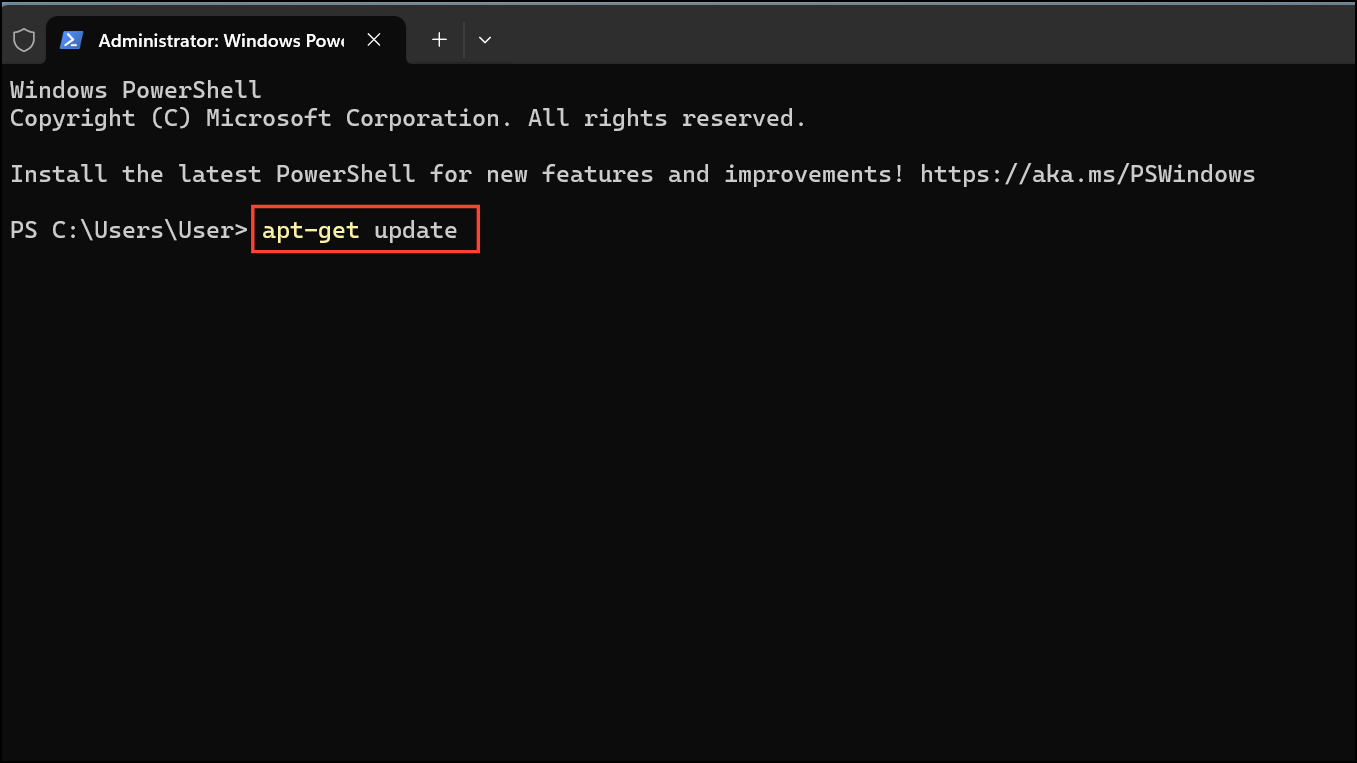

Step 1: First, install the necessary dependencies and build the llama.cpp library. Open your terminal and run the following commands:

apt-get update

apt-get install pciutils build-essential cmake curl libcurl4-openssl-dev -y

git clone https://github.com/ggml-org/llama.cpp

cmake llama.cpp -B llama.cpp/build -DBUILD_SHARED_LIBS=OFF -DGGML_CUDA=ON -DLLAMA_CURL=ON

cmake --build llama.cpp/build --config Release -j --clean-first --target llama-quantize llama-cli llama-gguf-split

cp llama.cpp/build/bin/llama-* llama.cpp

This process compiles the llama.cpp binaries required to run the model.

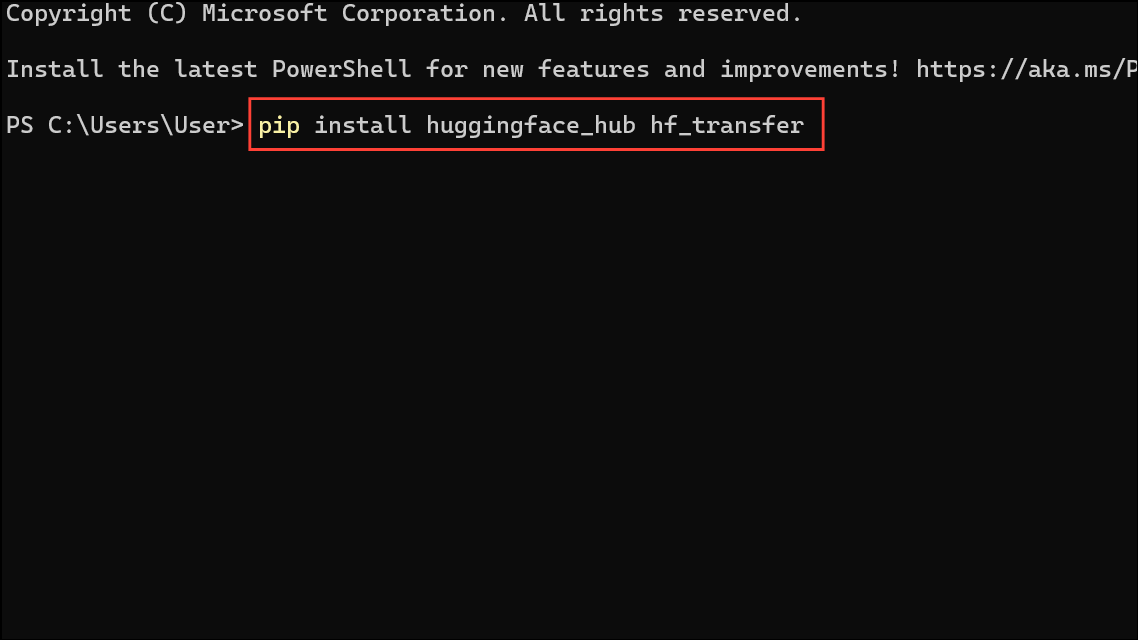

Step 2: Next, download the DeepSeek-V3-0324 model weights from Hugging Face. Install Hugging Face's Python libraries first:

pip install huggingface_hub hf_transfer

Then, run the following Python snippet to download the recommended quantized version (2.7-bit) of the model:

import os

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

from huggingface_hub import snapshot_download

snapshot_download(

repo_id = "unsloth/DeepSeek-V3-0324-GGUF",

local_dir = "unsloth/DeepSeek-V3-0324-GGUF",

allow_patterns = ["*UD-Q2_K_XL*"],

)

This step may take some time depending on your internet speed and hardware.

Step 3: Now, run the model using llama.cpp’s CLI. Use the following command to test your setup with a prompt:

./llama.cpp/llama-cli \

--model unsloth/DeepSeek-V3-0324-GGUF/UD-Q2_K_XL/DeepSeek-V3-0324-UD-Q2_K_XL-00001-of-00006.gguf \

--cache-type-k q8_0 \

--threads 20 \

--n-gpu-layers 2 \

-no-cnv \

--prio 3 \

--temp 0.3 \

--min_p 0.01 \

--ctx-size 4096 \

--seed 3407 \

--prompt "<|User|>Write a simple Python script to display 'Hello World'.<|Assistant|>"

Adjust the --threads and --n-gpu-layers parameters based on your hardware. The model will return the generated Python script directly in the terminal.

Method 2: Running on Apple Silicon (MLX)

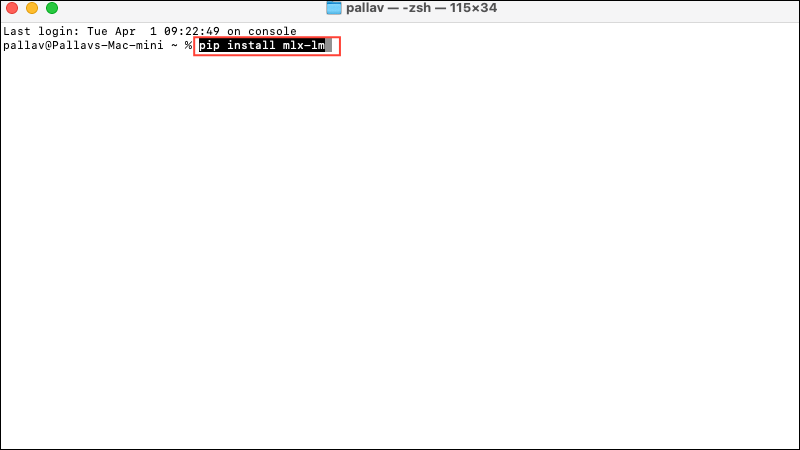

For macOS users with Apple M-series chips, you can efficiently run the quantized 4-bit model using the MLX framework.

Step 1: Install MLX with pip:

pip install mlx-lm

Step 2: Load and run the DeepSeek-V3-0324 model with MLX:

from mlx_lm import load, generate

model, tokenizer = load("mlx-community/DeepSeek-V3-0324-4bit")

prompt = "Write a Python function that returns the factorial of a number."

if tokenizer.chat_template is not None:

messages = [{"role": "user", "content": prompt}]

prompt = tokenizer.apply_chat_template(messages, add_generation_prompt=True)

response = generate(model, tokenizer, prompt=prompt, verbose=True)

print(response)

This method provides a balance between resource usage and performance on Apple Silicon.

Troubleshooting Common Issues

- Compilation errors with llama.cpp: Ensure your CUDA toolkit and GPU drivers are up-to-date. If you encounter issues, try compiling without CUDA by changing

-DGGML_CUDA=OFF. - Slow inference speed: If the model runs slowly, consider reducing context size or increasing GPU offloading layers.

- Memory issues: If your system runs out of memory, reduce

--n-gpu-layersor switch to a smaller quantized model.

Now you're ready to run the DeepSeek-V3-0324 model locally. This setup gives you the flexibility to experiment and integrate advanced language capabilities directly into your workflows. Remember to regularly update your model checkpoints for the best results!